English text plagiarism identifier

This project intends to use a neural network that analyses text based on the writing style of each phrase. If a phrase's style has a certain level of difference in writing style of the text as a whole, the neural network will notify the phrase in question, warning that it might be a plagiarism. A webapp was built to consume this neural network so the users can verify plagiarism more easily.

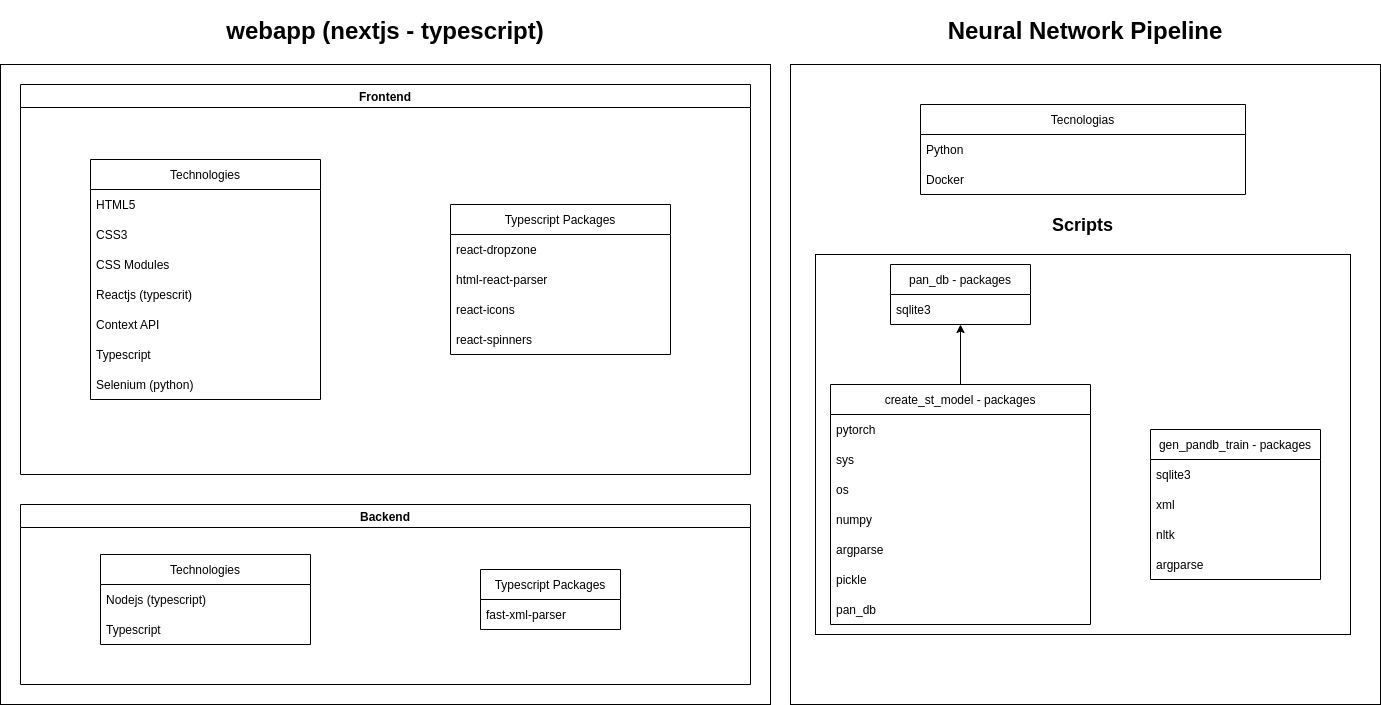

There are two modules on this project: the neural network pipeline and the webapp:

This module contains pipeline to model and train the neural network. Run the following commands to reproduce the neural. The neural network output is a XML file containing the offset and the length of each plagiarized stretch. See an example below:

<?xml version="1.0" encoding="UTF-8"?>

<document xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:noNamespaceSchemaLocation="http://www.uni-weimar.de/medien/webis/research/corpora/pan-pc-09/document.xsd" reference="suspicious-document00001.txt">

<feature name="project-gutenberg" etext_number="4699" url="http://www.gutenberg.org/dirs/etext03/wenev11.txt" />

<feature name="language" value="en" />

<feature name="detected-plagiarism" this_offset="50" this_length="1000" />

<!-- Use tags like the one below to annotate plagiarism you detected. -->

</document>The commands regarding this section must be run on the following directory:

./neural_net_pipeline/

./pancorpus/

The train directory will store texts that will be used for the neural network training.

docker and docker-compose are needed to execute the following steps of this section. To see exactly what each of these commands do, check makefile (open it with any text editor).

Donwload necessary skipthoughts file to genrate stvecs:

$ mkdir -p ./data/skipthoughts ./data/pkl

$ cd ./data/skipthoughts

$ wget http://www.cs.toronto.edu/~rkiros/models/dictionary.txt http://www.cs.toronto.edu/~rkiros/models/utable.npy http://www.cs.toronto.edu/~rkiros/models/btable.npy http://www.cs.toronto.edu/~rkiros/models/uni_skip.npz http://www.cs.toronto.edu/~rkiros/models/uni_skip.npz.pkl http://www.cs.toronto.edu/~rkiros/models/bi_skip.npz http://www.cs.toronto.edu/~rkiros/models/bi_skip.npz.pkl

$ cd ../..

Builds the docker container.

$ make build

Runs docker-compose.

$ make dev

The following scripts must be run inside the container. To run it, check the previous section.

$ python ./src/gen_pandb_train.py --srcdir ./pancorpus/train --destfile ./plag_train.db

$ python ./src/create_st_model.py --pandb plag_train.db --stdir ./data/skipthoughts/ --destdir ./data/pkl --vocab ./data/skipthoughts/dictionary.txt --start 0

This module contains the webapp, written in NextJS (typescript). It is ran using Node v16.14.2 To run the app, run the following commands within the webapp directory:

npm:

Running with npm:

$ npm install

$ npm run dev

yarn:

Running with yarn:

$ yarn

$ yarn dev

$ pip install -r ./test/requirements.txt

To run this test the application must me running and a Chromium-based browser must be installed on your machine

$ yarn test_ui