DietGrail Website Scraping using Python

This script uses these applications and Python libraries to crawl data from DietGrail GI and GL of Foods website

- macOS Monterey (12.2.1)

- Firefox 99.0

- pyenv

- Python 3.9.11

- Geckodriver

- pip

- Beautiful Soup 4

- Requests

- Pandas

- Selenium 4.1.3

- PyAutoGui

-

Install Python Version Management:

brew install pyenv brew install geckodriver -

Install Python 3.9.11:

pyenv install 3.9.11 pyenv global 3.9.11 pyenv exec python -V -

Install Python Package Installer:

pyenv exec python3 get-pip.py -

Create

.pyenvrcfile:echo 'eval "$(pyenv init -)"' > ~/.pyenvrc -

Install Python packages:

pyenv exec pip install beautifulsoup4 pyenv exec pip install requests pyenv exec pip install selenium pyenv exec pip install pyautogui pyenv exec pip install pandas

scrape_selenium_10.py

setting_scrape.txt

-

Edit

setting_scrape.txtfile. Refer to Script Settings section. -

Source the pyenv environment file:

source ~/.pyenvrc -

Run command:

pyenv exec python3 scrape_selenium_10.py -

Other running options:

MOZ_HEADLESS=1 pyenv exec python3 scrape_selenium_10.py -

Output files and folders:

- Webpages will be saved in

offline_pagesfolder. - Output

.csvfile will be saved incsvfolder. - Chart files will be saved in

chartsfolder.

| Parameters | Default Value | Unit | Description |

|---|---|---|---|

WEBPAGE_TIMEOUT |

15 | sec | First wait time at the first webpage loading |

WEBPAGE_LOAD |

2.7 | sec | Wait time between web pages |

WEBPAGE_PAUSE |

10 | page | Number of pages to pause |

WEBPAGE_PAUSE_TIME |

90 | sec | Pause time between two pages |

WEBPAGE_CHART_ON |

0 | - | Enable chart scraping ( 0: OFF, 1: ON) |

WEBPAGE_OFFLINE_PARSE |

0 | - | Enable offline pages processing only ( 0: OFF, 1: ON) |

GI_START_PAGE |

1 | page | Start page to scrape |

GI_STOP_PAGE |

219 | page | Stop page to scrape |

GI_LAST_PAGE |

219 | page | Last page to scrape. It should be larger than stop page |

GI_ROW_NUM |

14 | row | Number of rows in a page |

GI_ROW_NUM_LAST |

4 | row | Last number of rows in the last page |

- Sometimes DietGrail GI and GL of Foods website does not response in Firefox Remote, it needs to click manually.

- After about 50 clicks to download and save 50 pages, the DietGrail GI and GL of Foods website will stop response, it needs to wait about 30 seconds to 1 minute to wait for this website to be okay.

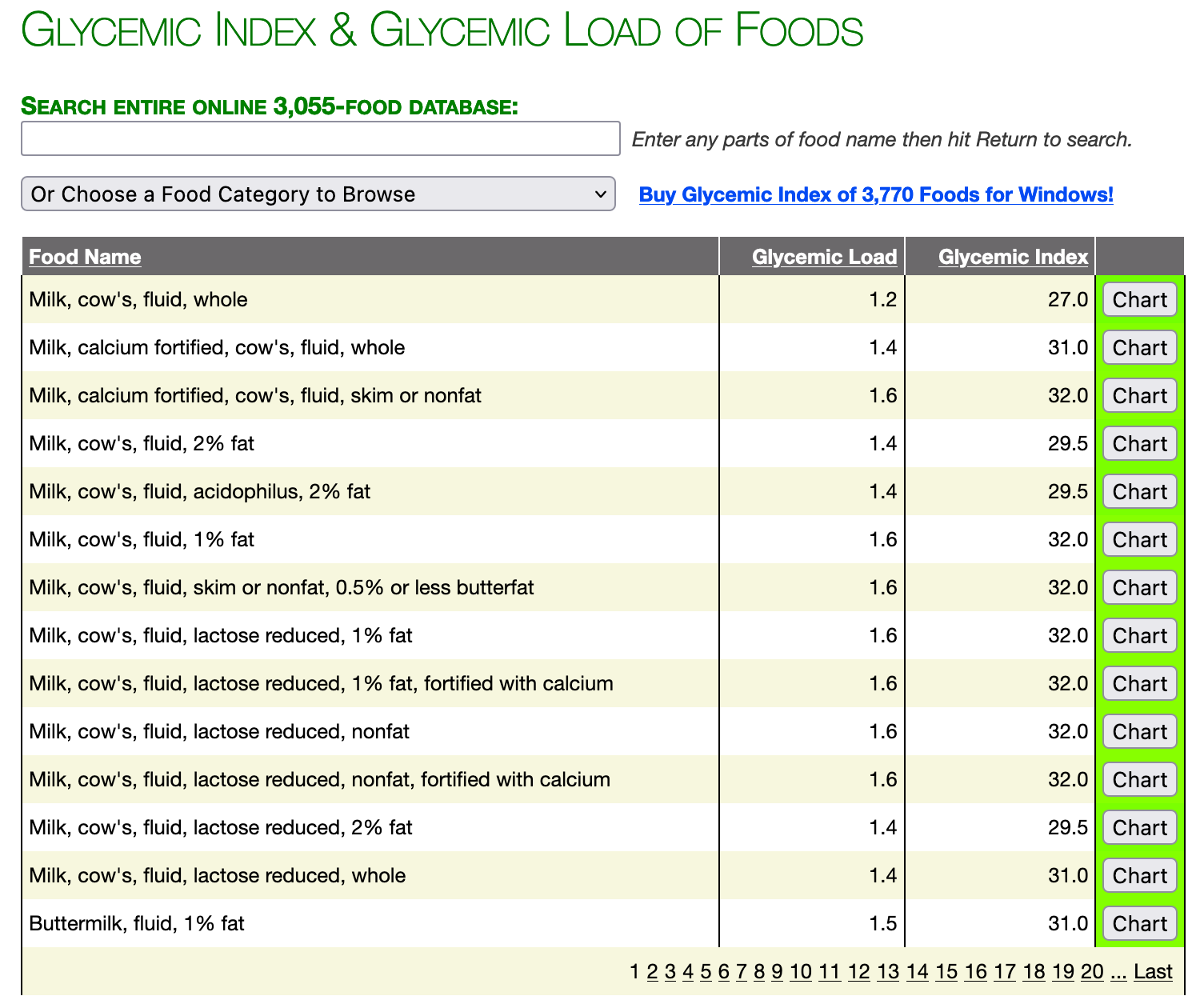

DietGrail GI and GL of Foods first page

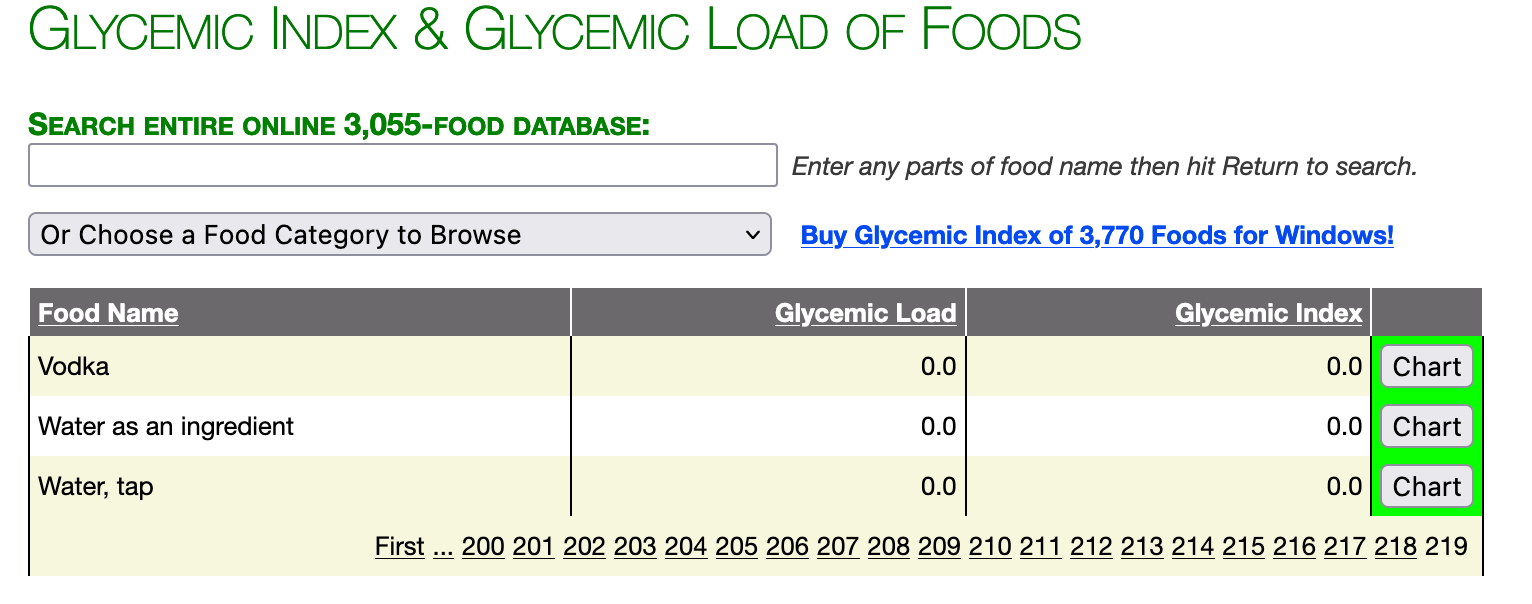

DietGrail GI and GL of Foods last page

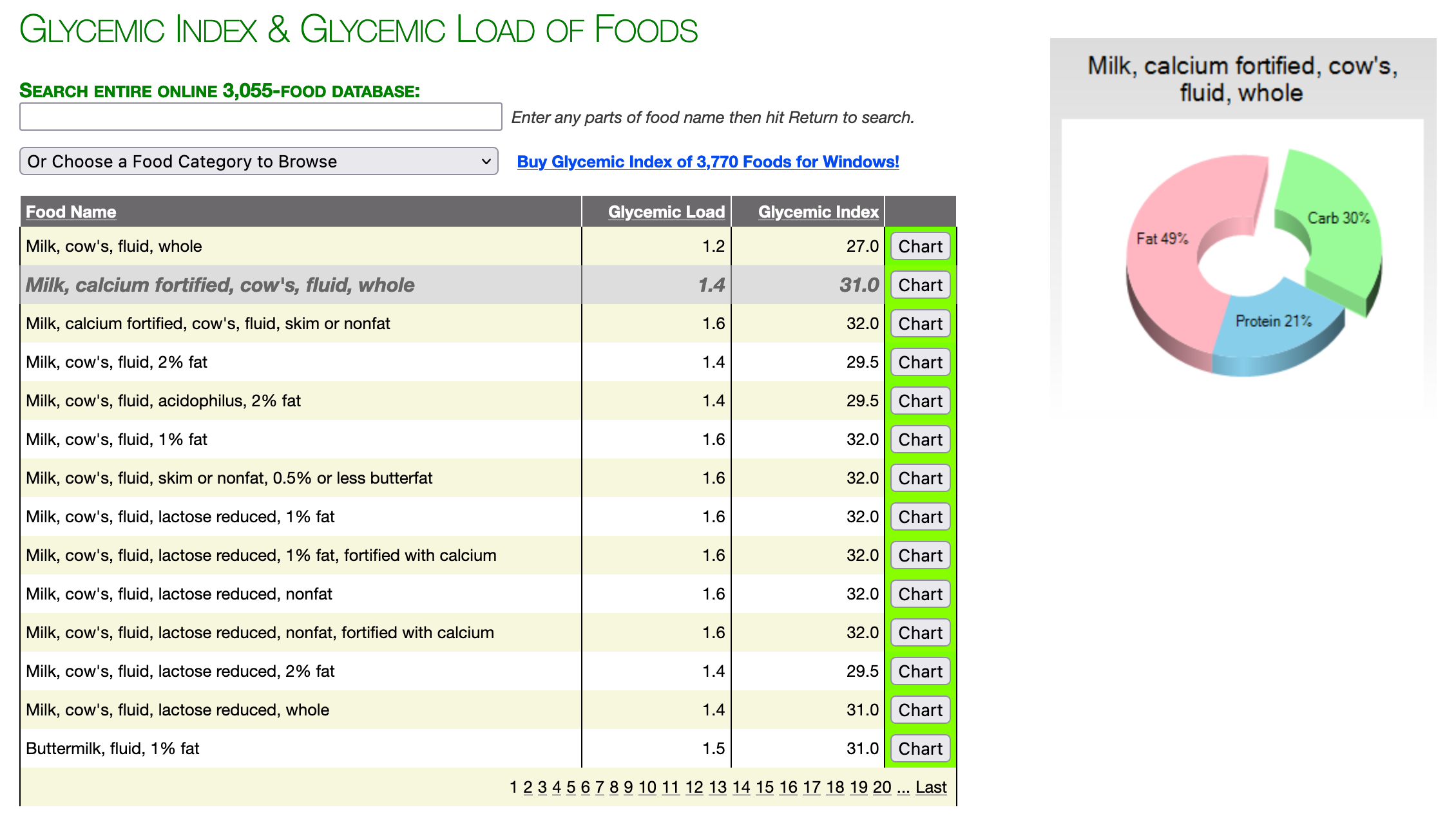

DietGrail GI and GL of Foods with chart

- Taiga 2 Readme

- Install pip, pyenv, BeautifulSoup4

- Organizing information with tables

- GitHub relative link in Markdown file

- GitHub Markup Sample

- How to add images to README.md on GitHub?

- How do I create a folder in a GitHub repository?

- Basic writing and formatting syntax

- how to link to a file with spaces in the filename in github README.md

- GitHub relative link in Markdown file

- Using content attachments

- Attaching files

- About anonymized URLs

- Proxying User Images

- How do I add a newline in a markdown table?