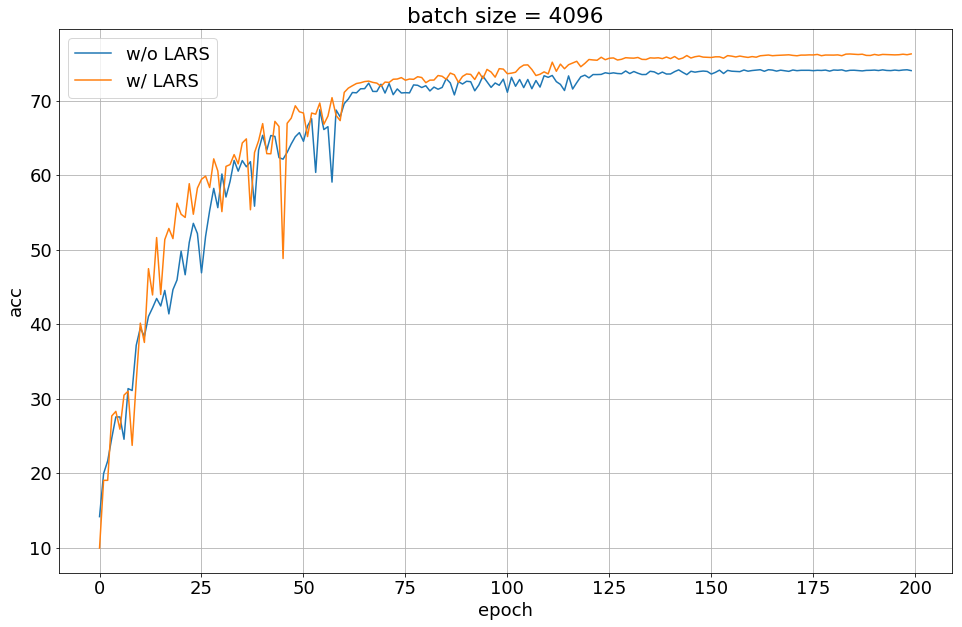

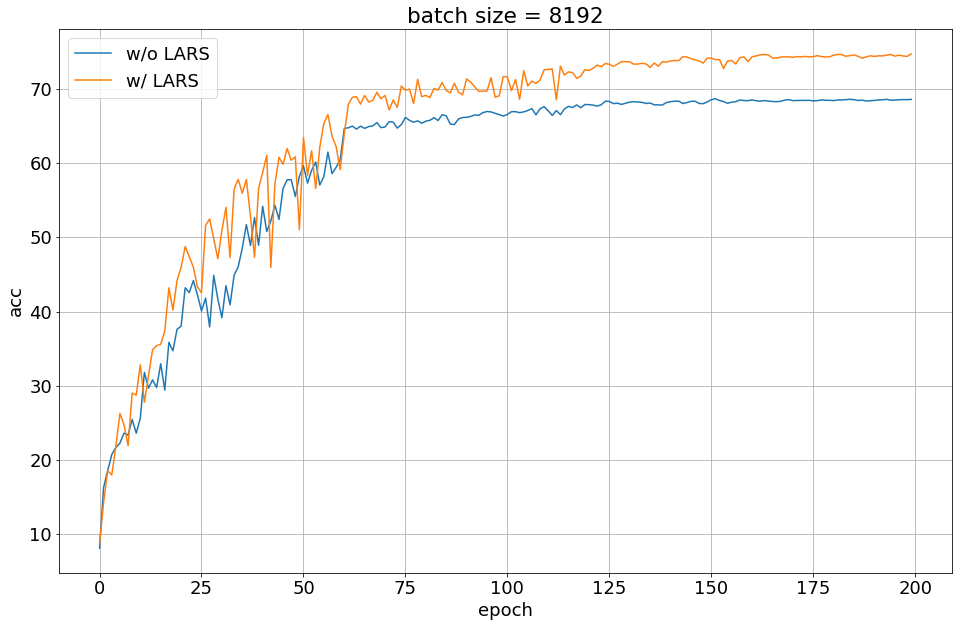

This repository contains code for LARS (Layer-wise Adaptive Rate Scaling) based on Large Batch Training of Convolutional Networks implemented in PyTorch.

- Python 3.6

- PyTorch 1.0

from lars import LARS

optimizer = torch.optim.LARS(model.parameters(), lr=0.1, momentum=0.9)

optimizer.zero_grad()

loss_fn(model(input), target).backward()

optimizer.step()