Welcome to the VirtuaLearn3D (VL3D) framework for artificial intelligence applied to point-wise tasks in 3D point clouds.

Since the VL3D framework is a Python-based software, the installation is quite simple. It consists of three steps (four if you are using conda/miniconda):

-

Clone the repository.

git clone https://github.com/3dgeo-heidelberg/virtualearn3d

-

Change the working directory to the framework's folder.

cd virtualearn3d

-

Install the requirements.

pip install -r requirements.txt

-

Install the requirements.

-

In Windows

conda env create -f vl3d_win.yml

-

In Linux.

conda env create -f vl3d_lin.yml

-

-

Activate the environment

conda activate vl3d

If you are interested in deep learning, you will need some extra steps to be able to use the GPU with TensorFlow+Keras. You can see the TensorFlow documentation on how to meet the hardware requirements when installing with pip. Below you can find a summary of the steps that work in the general case:

-

Check that your graphic card supports CUDA. You can check it here.

-

Install the drivers of your graphic card.

-

Install CUDA. See either the Linux documentation or the Windows documentation on how to install CUDA.

-

Install cuDNN. See the cuDNN installation guide.

In the VL3D framework, you can define pipelines with many components that handle the computation of the different steps involved in a typical artificial intelligence workflow. These components can be used to compute point-wise features, train models, predict with trained models, evaluate the predictions, write the results, apply data transformations, define imputation strategies, and automatic hyperparameter tuning.

For example, a pipeline could start computing some geometric features for a point-wise characterization, then train a model, evaluate its performance with k-folding, and export it as a predictive pipeline that can later be used to classify other point clouds. Pipelines are specified in a JSON file, and they can be executed with the command below:

python vl3d.py --pipeline pipeline_spec.jsonYou can find information about pipelines and the many available components in our documentation. As the first step, we recommend understanding how pipelines work, i.e., reading the documentation on pipelines.

If you are interested in the deep learning components of the VL3D framework, check that your TensorFlow+Keras installation is correctly linked to the GPU. Otherwise, deep learning will be infeasible. To check this, run the following command:

python vl3d.py --testYou should see that all tests are passed. Especially the Keras, TensorFlow, GPU test and the Receptive field test.

You can have a taste of the framework running some of our demo cases. For example, you can try a random forest for point-wise leaf-wood segmentation.

To train the model run (from the framework's directory):

python vl3d.py --pipeline spec/demo/mine_transform_and_train_pipeline_pca_from_url.jsonTo compute a leaf-wood segmentation with the trained model on a previously unseen tree and evalute it run:

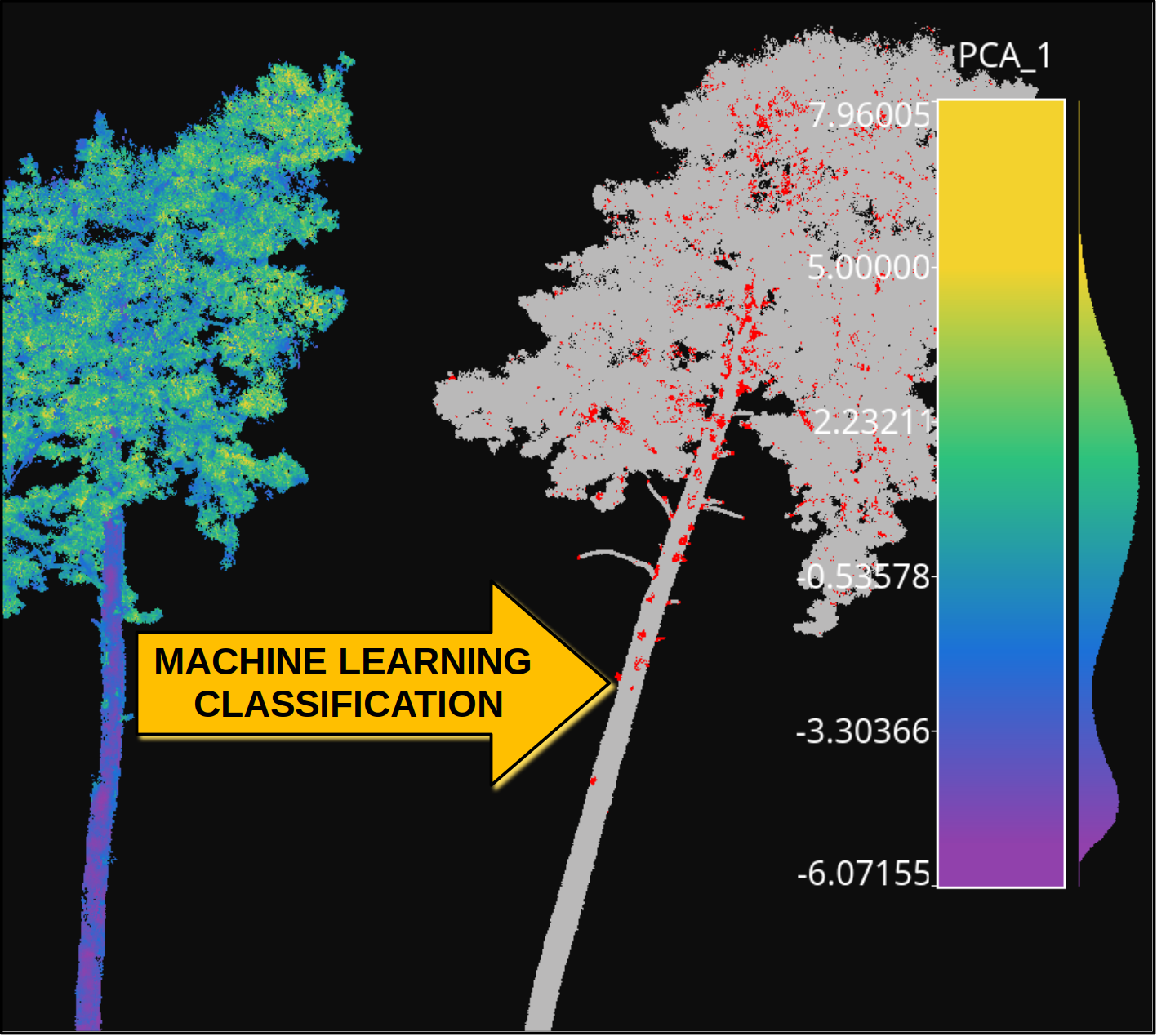

python vl3d.py --pipeline spec/demo/predict_and_eval_pipeline_from_url.jsonMore details about this demo can be read in the documentation's introduction. The image below represents some steps of the demo. The tree on the left side represents the PCA-transformed feature that explains the highest variance ratio on the training point cloud while the tree on the right side represents the leaf-wood segmentation on a previously unseen tree (gray classification, red misclassification).