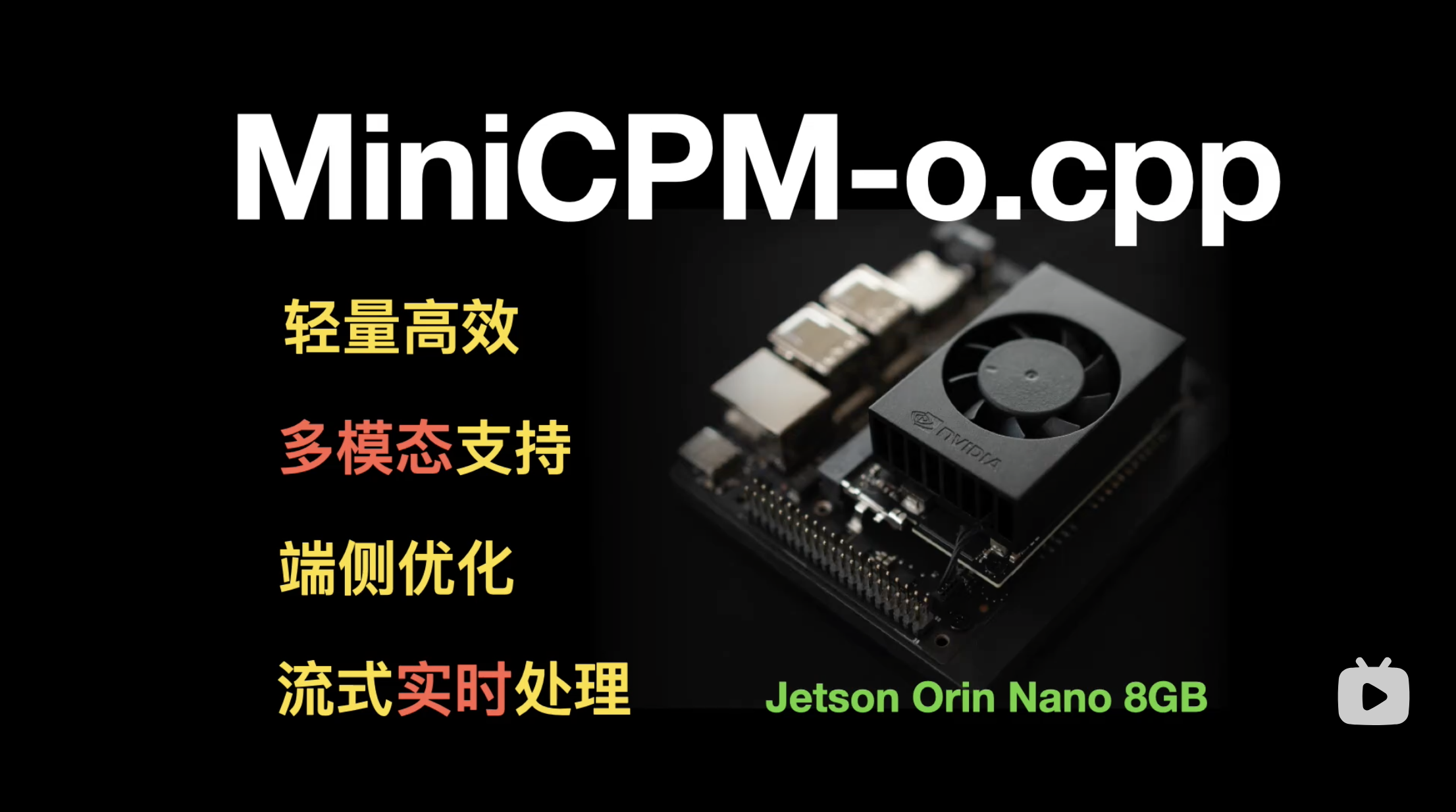

Inference of MiniCPM-o 2.6 in plain C/C++

- Plain C/C++ implementation based on ggml.

- Requires only 8GB of VRAM for inference.

- Supports streaming processing for both audio and video inputs.

- Optimized for real-time video streaming on NVIDIA Jetson Orin Nano Super.

- Provides Python bindings, a web demo, and additional integration possibilities.

Clone and initialize the repository.

# Clone the repository

git clone https://github.com/360CVGroup/MiniCPM-o.cpp.git

cd MiniCPM-o.cpp

# Initialize and update submodules

git submodule update --init --recursiveSet up the Python environment and install the package:

# We recommend using uv for Python environment and package management

pip install uv

# Create and activate a virtual environment

uv venv

source .venv/bin/activate

# For fish shell, use: source .venv/bin/activate.fish

# Install the package in editable mode

uv pip install -e . --verboseFor detailed installation steps, please refer to the installation guide.

Use Pre-converted and Quantized gguf Models (Recommended). link: Google Drive or ModelScope

Download and place all models in the models/ directory.

For ease of integration, we provide a Python binding. Run the script:

# in project root path

python test/test_minicpmo.py --apm-path models/minicpmo-audio-encoder_Q4_K.gguf --vpm-path models/minicpmo-image-encoder_Q4_1.gguf --llm-path models/Model-7.6B-Q4_K_M.gguf --video-path assets/Skiing.mp4We also provide a C/C++ interface. For details, please refer to the C++ Interface Documentation.

Real-time video interaction demo:

# in project root path

uv pip install -r web_demos/minicpm-o_2.6/requirements.txt

python web_demos/minicpm-o_2.6/model_server.py# Make sure Node and PNPM are installed.

sudo apt-get update

sudo apt-get install nodejs npm

npm install -g pnpm

cd web_demos/minicpm-o_2.6/web_server

# create ssl cert for https, https is required to request camera and microphone permissions.

bash ./make_ssl_cert.sh # output key.pem and cert.pem

pnpm install # install requirements

pnpm run dev # start serverOpen https://localhost:8088/ in your browser for real-time video calls.

We have deployed the MiniCPM-omni model on the NVIDIA Jetson Orin Nano Super 8G embedded device.

This project supports real-time inference on NVIDIA Jetson Orin Nano Super 8Gb in MAXN SUPER mode.

If your embedded device is not running the Super system package, please refer to the installation manual for instructions on installing the system package on your board.

We recorded a video of the model running on the Jetson device in real time, with no speed-up applied.

For NVIDIA Jetson Orin Nano Super performance, including inference time and first-token latency data, see Inference Performance Optimization.

This project is licensed under the Apache 2.0 License. For model usage and distribution, please comply with the official model license.

- llama.cpp: LLM inference in C/C++

- whisper.cpp: Port of OpenAI's Whisper model in C/C++

- transformers: Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

- MiniCPM-o: A GPT-4o Level MLLM for Vision, Speech and Multimodal Live Streaming on Your Phone.