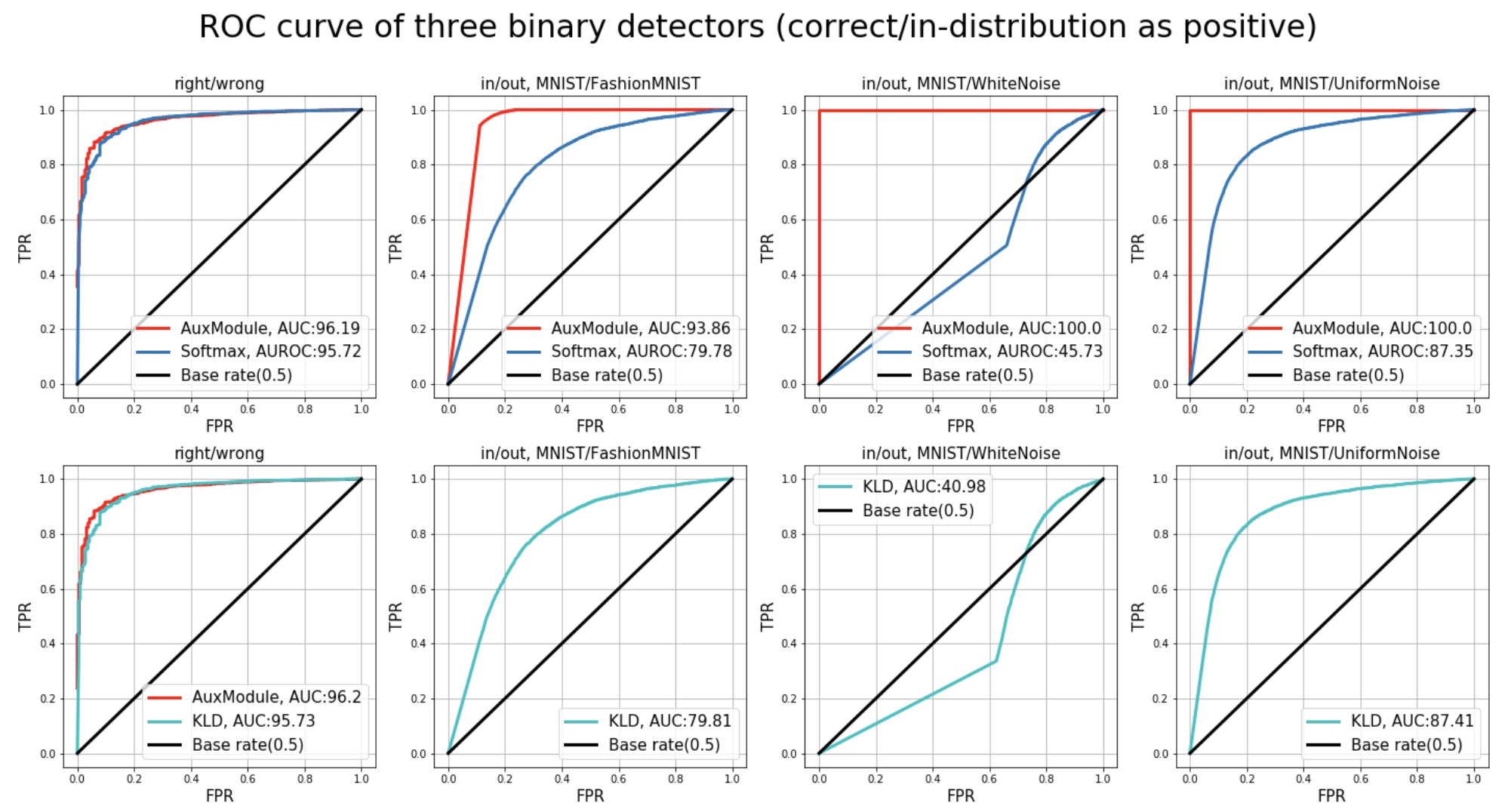

It is known that neural network cannot discriminate anomaly data(e.g. when putting alphabets to MNIST classifier) reliably only with their softmax output scores.

This repository contains reproduced vision experiments in paper

'A Baseline for Detecting Misclassified and Out-of-Distribution Examples in Neural Networks',

(by Hendrycks et al.), which discusses about the topic intensely.

It uses Tensorflow Keras API to build model blocks.

To see references(Papers, original code), please check below resources.

Any suggestions or corrections are welcome.

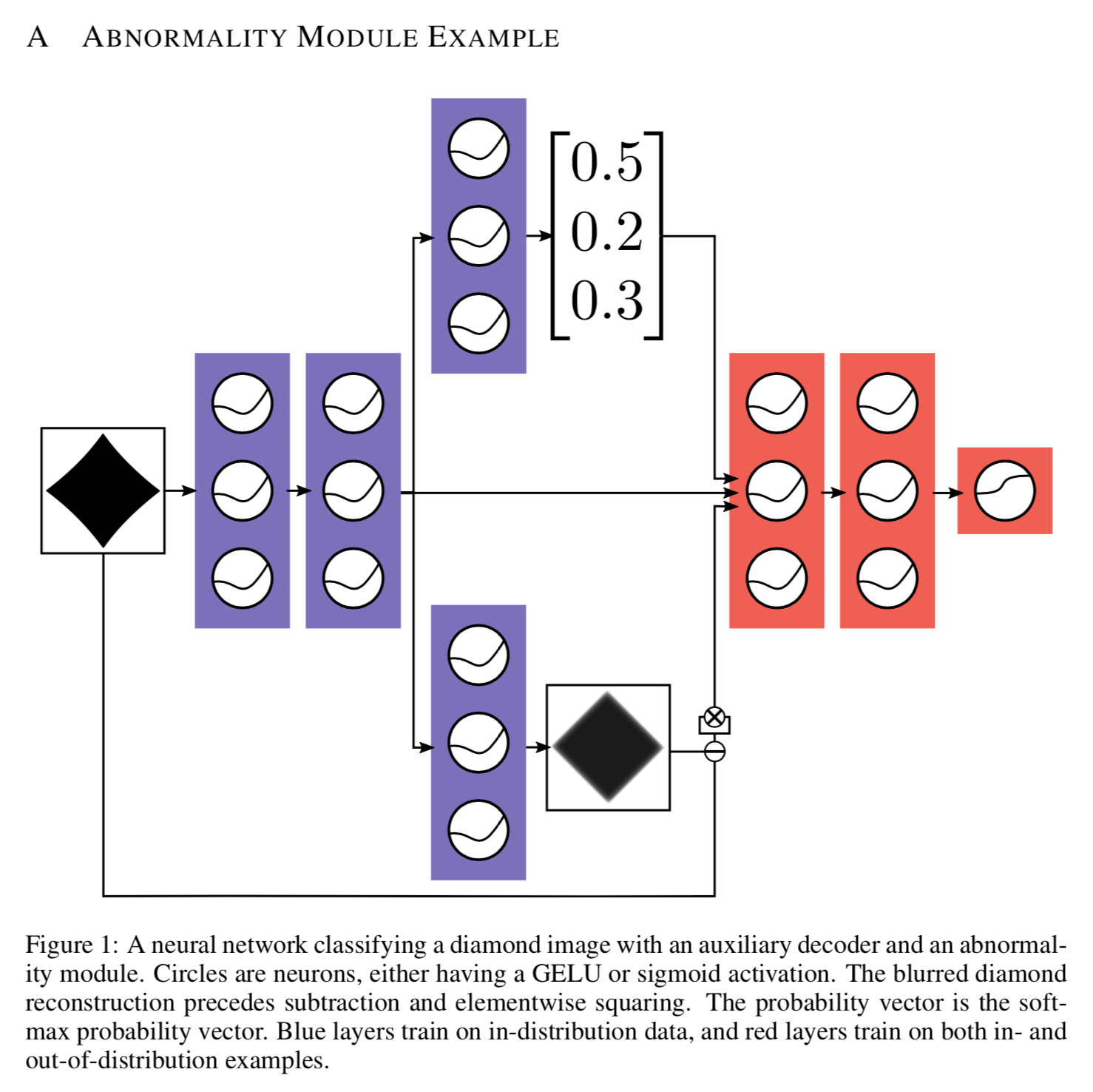

The paper suggests utilizing Anomality module to enhance overall performance.

- python>=3.4

- tensorflow>=1.8

- numpy

- scikit-learn

- h5py (for saving/loading Keras model)

- jupyter, matplotlib (optional, for visualization)

python3 mnist_softmax.py # Pure softmax detector

python3 mnist_abnormality_module.py # Anomaly detector with auxiliary decoder- Apply moving average to trained parameters, using tf.train.ExponentialMovingAverage()

- Gelu Nonlinearity

- https://arxiv.org/abs/1610.02136

- https://github.com/hendrycks/error-detection (Original repository)

@inproceedings{hendrycks17baseline,

author = {Dan Hendrycks and Kevin Gimpel},

title = {A Baseline for Detecting Misclassified and Out-of-Distribution Examples in Neural Networks},

booktitle = {Proceedings of International Conference on Learning Representations},

year = {2017},

}