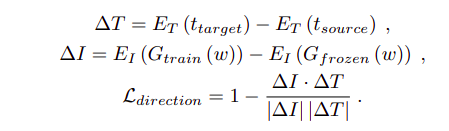

StyleGan-NADA allows to adapt the domain of a StyleGan2 generator to a new domain. It does so by minimizing the directional clip loss:

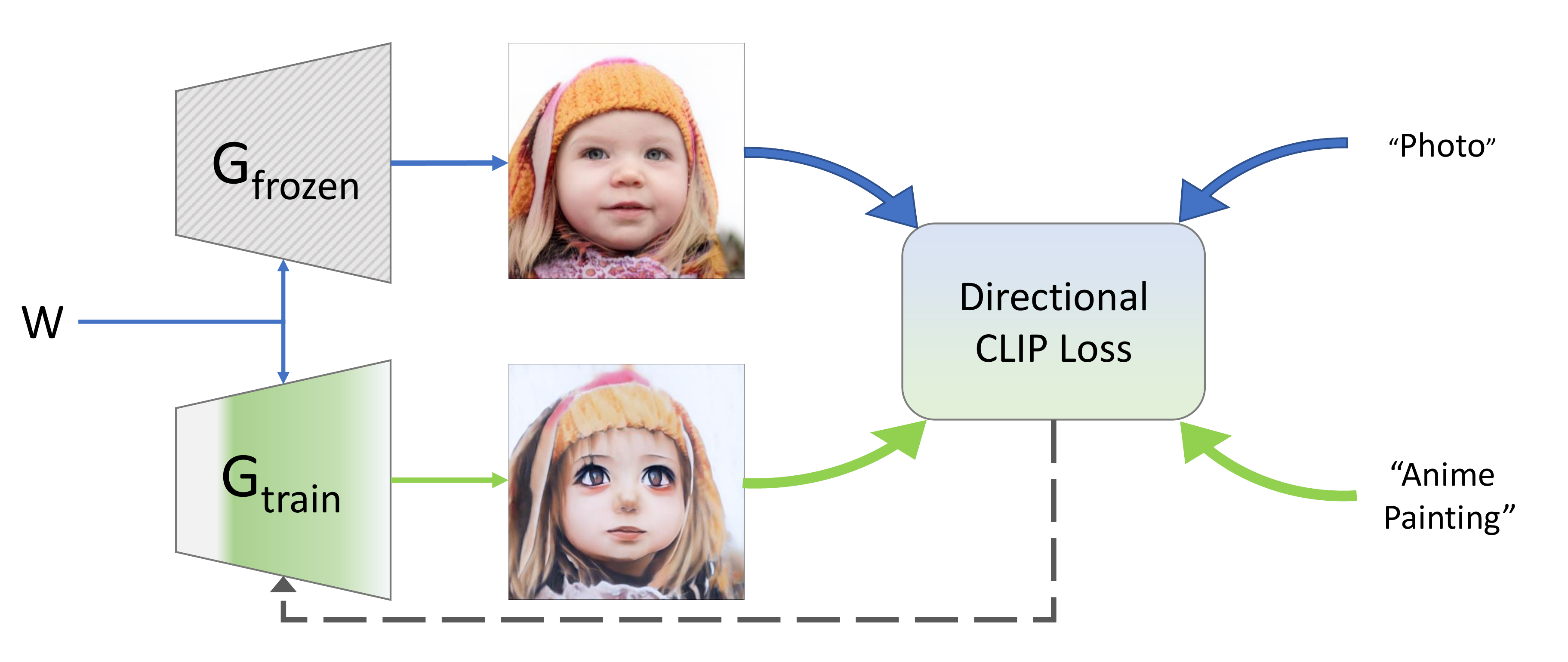

where E_T and E_I are the text and image encoders that the CLIP model provides. G_train is the new generator that StyleGan-NADA produces while G_frozen is the original generator that is kept witohut training.

Conceptually, it calculates a direction in CLIP space using text prompts and shifts the generator in CLIP space accordingly to that direction.

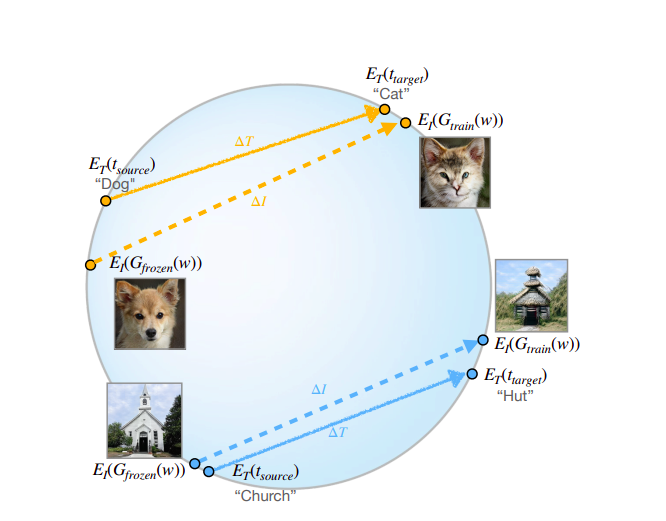

Not all layers of the G_frozen network are trained. A subset of layers is chosen based on how much they weight on the output. This is called adaptive layer freezing.

For more details, the original paper is avaiable here

To train and run the newly generated network, a public accessible colab is avaiable here. It allows to select a model to adapt, insert source and target domains, train the network and use it to generate an arbitrary number of images.

Some details of the implementation where changed. Here we present some results and comparision with the original model.

- The adaptive layer freezing approach was made scalable. This means that instead of computing the best

klayers to train at every iteration it's done only everyauto_layer_interval. Also everyauto_layer_falloffthe number of trained layer decreases, allowing for better fine tuning. - Global loss was reintroduced. The loss is now comuted as a weighted sum between Directional and Global Clip Loss. This can be adjusted via a slider in the colab.

- The original paper uses a set of prompts generated from templates starting from the insterted prompts. I Experimented without this feature and concluded there are no major changes. I removed the feature by default but it's still possible to use templates.

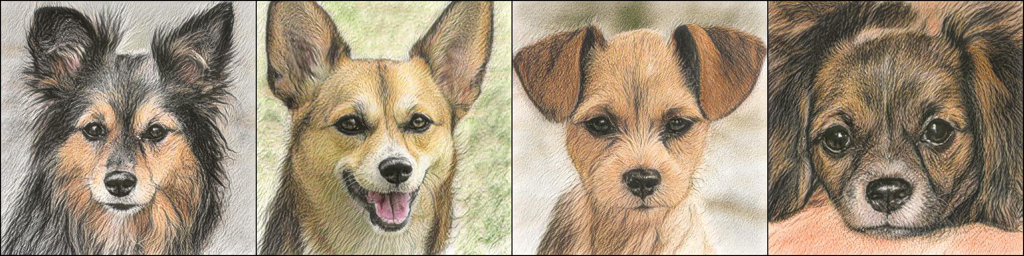

Starting:  Original:

Original:  Ours:

Ours:  Improved: x5 training speed and less artifacts

Improved: x5 training speed and less artifacts

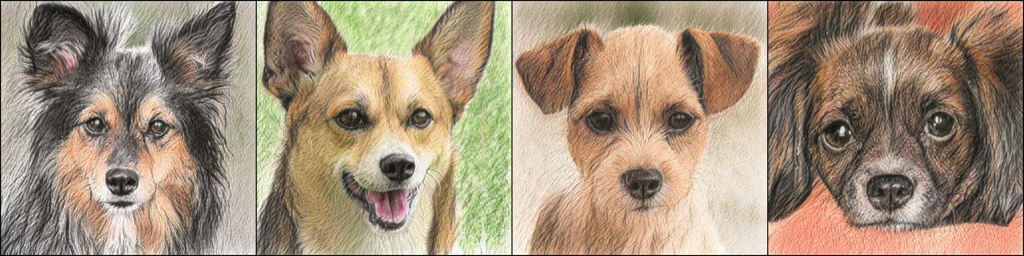

Starting:  Original:

Original:  Ours:

Ours:  Improved: x4 speedup

Improved: x4 speedup

Starting:  Original:

Original:  Ours:

Ours:  Improved: x3 speedup

Improved: x3 speedup

Starting:  Original:

Original:  Ours:

Ours:  Improved: x3 speedup

Improved: x3 speedup

(Note less artifcts!)

Starting:  Original:

Original:  Ours:

Ours:  Improved: x5 speedup and keeps color

Improved: x5 speedup and keeps color

(Note less artifcts!)

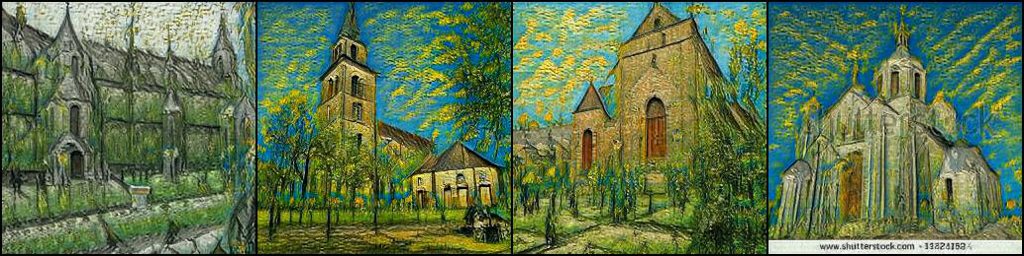

Starting:  Original:

Original:  Ours:

Ours:  Improved: 80% directional, 20% global

Improved: 80% directional, 20% global