A zero to master API made in Node.js, Express, MongoDb, and AWS.

- Simple CRUD operations for Users REST API using Express and Mongoose

- Secure the API (Authentication and Authorization concerns)

- Research and apply good practices on top of a NodeJS Express API

-

Apply some resilience layer on top of database connection (maybe using some sort of circuit breaker)*¹ - Migrate the MongoDB workload to AWS, setting up a Multi-AZ infrastructure to provide High Availability

- Configure an OpenAPI Specification work environment

- Migrate the Users' microservice to AWS in smalls Linux Machines

- Configure Continuous Deployment with AWS CodeDeploy

- Set up a High Available and Fault-Tolerant environment for the API, by using at least 3 AZ in a Region/VPC (ELB)

- Integrate CodeDeploy Blue/Green Deployment with Auto-Scaling and Application Load Balancer

- Set up Observability in the application at general, using AWS X-Ray, CloudTrail, VPC Flow Logs, and (maybe) ELK Stack.

- Provide infrastructure as a service by creating a CloudFormation Stack of all the stuff

At this point we have a simple REST API with basic settings and CRUD operations using Mongo running in Docker.

A lot of improvement needs to be done yet, we've some security vulnerabilities (eg. CSRF, CORS), some code layer separations that can be done to avoid code duplication in case of application growth (eg. code in userController) and even we can still provide a better architectural organization and separation to reach a ready-to-production stage.

We will leverage microservices' concerns later on.

We need to improve our REST implementation as well to reach level 3 of Richardson's Maturity Model (RMM), and implement some OpenAPI specification in some way (eg. swagger docs).

Instructions:

- Execute

docker-compose up -dat the root level to runmongoandmongo-expressservices. - Then you can hit

http://localhost:8081in your browser to all mongodb management tasks needed for this project. - Run the app with

npm start=P

Note: There is a postman collection (with all used endpoint) at the root level that can be imported.

We have a common user authentication flow leveraging JWT implementation, may be used for the production stage of small apps. Apply some resilience layer on top of database User management and authentication tasks usually comes with lots of undesirables time-efforts, and complexities, so, doesn't worth waste so much time on a sample of implementation of it, it's always a good idea we consider an "Authentication as a Service" Providers like Auth0, Firebase, AWS Cognito and read their docs, this way we can focus on our business goals.

But a solid understanding of OAuth2 and OpenID specification will be so helpful in your life...

Instructions:

All instructions of section 1 are applied here, plus you may import the postman collection again 'cause it was updated with the new register and login endpoints.

- You need to call the

register, and thenloginand take thetokenresponse and use it asAuthorization: JWT <token>HTTP header for all the endpoints that are all secured now.

How it is a huge topic, I split it up to some releases.

How Node.js is a single-threaded framework that works on top of the delegation of tasks to the Event Loop

we need to take care about our synchronous operations (eg. API, Database Calls) to avoid blocking of our app,

so weed to leverage asynchronous methods as soon as possible.

Therefore we're invoking all Mongoose, and bcrypt methods async available now

and handling properly the application errors either sync or async through a handle middleware.

We still need to work in a Cluster way and forking process, leveraging the number of cores available to us (we'll do it later using PM2)

We can consider this API now REST level 2 of RMM, we are properly representing resources and working with HTTP Status Codes, so at this point, we've a solid API that can work fine inside an organization,

but if your goal is to build a public API we need to think about a way to make our API self-documenting and provides a solid response format across the entire API,

and is exactly at this point that level 3 of RMM solves our problems, introducing the concept of Hypermedia and Mime Types.

We need to work with an OpenApi spec and still talking about REST APIs, providing a cache response layer too.

Note: The API has versioning now.

Instructions:

Just a note here, you'll need to import the updated POSTMAN collection again.

Important security aspects to handle attacks as Cross Site Request Forgery (CSRF), and CORS settings are Ok now.

We need to go back here when a UI will have to integrate with this API

Some common performance settings as gzip compression were done, but we need to keep in mind that lots of stuff at this field can be (and maybe will) done in the Reverse Proxy later.

The Configuration aspects of the API were reorganized to better readability.

Instructions:

For the configurations, this project works which a combination of dotenv (using dotenv-safe) and convict, so,

the values that should be stored as environment variables (such as keys/secrets/passwords) you may notice at .env.example file

and the others values that there is no problem to reveal them among other team members can be stored in files like

development.json and production.json under the configdirectory.

Tl;dr: No multi-threading model, however you need to scale your app earlier with Node.js Cluster model, but you don't need to do it on your own, you may use a Node Process Manager like

PM 2instead.

Like I said earlier Node.js is a single-threaded language and works on top on the Event Loop model

and simplifies a lot our development model about multi-threading and race-condition concerns that

we need to handle in other languages like C# and Java to perform better in our multi-core server machine.

In Node.js we don't need to worry about this kind of stuff, but on the other hand, we need to be concerned

about the Node.js Cluster module and how it handles the worker processes, how they are spawned according

the number of Cores from the host machine, how to fork a new child worker, and so on.

To help us which this task, we need to use a Node Process Manager, the most popular nowadays is the PM 2.

PM 2 will fork process according to new requests are coming X host server capacity, besides it will

handle restarts, errors, monitoring, among other tasks.

If we need, PM 2 will handle some stuff like deploy in production directly in the server destination host,

but I prefer to use PM 2 limited to cluster management responsibilities, one reason is that working with AWS,

the deployments using EC2 hosts is faster (and well-organized) through "Golden AMI" strategy, this way we've

the PM 2 package installed globally and a symlink to it at the project level.

We need to handle database close connection whenever the PM2 process is killed,

I'll reorganize the database later in some kind of repository of a hexagonal architecture (maybe Onion architecture).

I'll show off how to work with "Golden AMI" + ASG + Placement Groups + Blue-Green Deployments in AWS later.

To boost the build time and app initialization we may install globally all the main packages like

express,body-parser.

Instructions:

Install pm2 package globally and create an npm link to it in this project.

Notice that npm start script will now run pm2 start ./pm2.config.js and

I made a choice to still use nodemon for development, so I created a new npm script dev for it.

- All PM2 settings are in the

pm2.config.jsfile - All scripts needed for daily-basis tasks are included in the

package.jsonscripts section.

I'm using the main settings needed for a ready-to-production app.

-

Health Checks - In order to signalize to your Load Balancer that an instance is fine and there is no need to restarts by checking whatever you want eg. your Db connections.

-

Graceful Shutdown - In order to have a chance to dispose of all your unmanaged resources by Node.js before your app is killed eg. Db connections.

There are so many ways to handle errors in a Node.JS' API, here I've made a choice to work closely with Middleware and HTTP Status Codes.

I'm just pointing it here to reinforce the importance of handling API errors, but to understand better you can start looking at the handleError middleware.

We're still using console.log for logging, but how it's a blocking code, we need to change it to a non-blocking library like Winston with some kind of correlation requests strategy,

besides Winston has great capabilities to integrate with others APM solutions through the idea of transports, and transformations operations we may ended up redoing some work when integrating our logging strategy with our final APM solution and Cloud Provider tools for logging.

AWS offers great solutions for logging, Azure has its own solutions too, and so on.

Plus, in some cases, we may still need a third-party APM solution like ElasticSearch APM, Dynatrace, Datadog among others.

Therefore we need to keep all of it in mind, before either choice and configure a non-blocking library or

even start spreading lots of console.log in our app.

I won't do any application architecture decisions here, the better way to do that, is in another repository, focused on the architecture itself.

Apply some resilience layer on top of database connection (maybe using some sort of circuit breaker)

There is no need for us to handle such thing, mongoose take care of it already, you may adjust some advanced options though

Migrate the MongoDB workload to AWS, setting up a Multi-AZ infrastructure to provide High Availability

Prefer moving towards a fully managed service for "ready-to-production" databases, AWS does not offer a service like this for MongoDB, and the most popular way to achieve this in AWS today is through MongoDB Atlas.

MongoDB Atlas has a free tier, but you should not use it for production workloads at all.

To attend a reliable and high scalable production workload at general our database infrastructure would need to have at minimum a

Master and two Slaves hosts all in separated AZ with Multi-AZ failover enabled (with Read Replicas sometimes), plus,

we would have a significant performance improvement by hitting our database through VPC Endpoints (for VPC internal communication),

instead of going over to the public internet to reach our database.

In order to reach the goal above in MongoDB Atlas we would need to have an M10 Dedicated Cluster - that offers 2 GB RAM, 10 GB of storage, 100 IOPs, running in an isolated VPC with VPC Peering (and VPC Endpoint) enabled.

The estimated price for this 3-node replica set is 57 USD per month, despite the costs is not the focus of this project, I can't avoid some comparison with others AWS Database as a service solutions.

- Atlas X AWS DynamoDb - We can reach much more capacity in terms of Data Storage, Operations per second, and high scalability in DynamoDB, by a much smaller price, but I believe that MongoDB offers better ORMs options like

mongoosethat help us in terms of development effort.

We may end up using a Redis cache layer to avoid hits in our database all the time, it's a very common pattern regardless of our database solution, it will incur in costs and development effort increases. In AWS DynamoDB we can use AWS DAX Accelerator that is a cache layer for DynamoDB and can improve our queries from milliseconds to microseconds without coding changes needed by the half of price of a MongoDB Atlas minimal production settings.

-

Atlas X AWS DocumentDB - AWS DocumentDB is a MongoDB-compatible fully managed Database-as-a-service product, but the initial costs are so high, that won't worth compare it, it's a product for Big Data solutions. I'm just pointing it here because of the MongoDB compatibilities.

-

Atlas X Amazon Aurora Serverless - If you're willing to go with

sequelizerelational ORM, Amazon Aurora is your best option, mainly for early stages projects or MVPs that you don't know your workload yet. You'll have a fully managed and high available database with a serverless pricing model, it means in short terms you'll pay for resources that are consumed while your DB is active only, not for underutilized databases hosts provisioned that needs to be up and running all the time. Plus, after some evolving of your solution and knowledge of your workload, you can decrease your costs by purchasing Reserved Instances. -

Atlas X Custom MongoDB cluster - You may build your Replica Set with minimal effort by using a

CloudFormationtemplate, AWS offers a good one also you can find great options at the community, includingTerraformtemplates, plus you can use a MongoDB Certified by Bitnami AMI as a host instead of a fresh EC2 in these templates. Optionally you can set up an AWS Backup on your EC 2 hosts, and then you may update theCloudFormationtemplates that you've used if it is not already included. The cons here are that you won't have a fully managed service, of course, but the cluster was configured by one. You can start by using an infrastructure-as-a-code template and later move towards a fully managed product like MongoDB Atlas, though.

For the sake of simplicity, I'll use MongoDB Atlas free-tier at this project.

A rock-solid API must start by your design, team collaboration, mocking, and approval thereafter we can start the hands-on it. Engage the team to develop by using that approach is beneficial in so many ways and to enable it I like to use a GUI editor, my preferred is Apicurio Studio a web-based OpenAPI editor that enables team collaboration and mock of your API.

Apicurio - How a OpenAPI GUI can help us

Now, we can check and test our API through the link http://localhost:4000/v1/api-docs/

Starting from scratch to a master production-ready, high-available, and elastic infrastructure is a journey. Therefore, I can't go deep on it I'll go over it in some articles later, but I'll focus on all pieces that matters for the purpose of this project, but still, I'll show off all main details that we may come across while setting up our application.

Before thinking about scalability, load-balancing, high-availability, and disaster recovery concerns, we need to have our app up and running in a single AWS EC2 instance.

Launch your ec2, install everything needed and copy your app to it, thus, ensure your app is up and running.

You may create a public directory at the root level with mkdir -m777 public to be able to copy files from your machine with scp command using

a non-root user like so scp -i "MyKeyPair.pem" /path/SampleFile.txt ec2-user@ec2-54-56-251-246.compute-1.amazonaws.com:public/

Tip: Prefer using an Amazon Linux 2 AMI to launch your EC2

Don't worry so much about Security concerns here, just focus on letting your app in a valid state as soon as possible

Create a resource role for your EC 2 (later we'll add some policies to it)

For this kind of apps I like to use general-purpose t3 instance family (

micro, to be more specific), because it´s cheaper but network-efficient, and burstable.

Imagine now if you need to recreate your EC2, think about everything you'll need to install and settings up again. Plus, imagine all of it being done whenever a new EC2 is triggered by an Auto Scaling activity by running tons of scripts in UserData section.

Think about how long it takes to create a new EC2 instance...

The proper way to handle it is by a strategy called Golden AMI that consists of a prebuilt application stored inside an AMI to save time whenever a new instance needs to be provisioned.

To save your time I've created a Golden AMI for Node.js apps, 1-Click Ready Node.js LTS on Amazon Linux 2 by using this AMI you'll have a "ready-to-production" Node.js server already configured.

How the AMI name suggests, you need just launch your fresh EC 2 from this AMI. You will have an up and running EC 2 with Node.js LTS (stable version) with the PM 2 package installed globally.

You should consider some packages that you're using in your app locally as global packages, think about how you are using them in another apps, and how your devs/colleagues have those packages installed in theirs machines too. Take a look at those node.js' packages that we have installed as local packages, but that is constantly installed using the same version and code for each new app that we have created: npm common packages list.

I'm listing cors, bcrypt, helmet, pm2, convict, and body-parser, so you'll notice their packages' releases doesn't occur

weekly, it's more about monthly updates, also, some packages as helmet - a package that handles security concerns in your app, as soon a breaking changing version has released, you'll probably like to update it in all app across your organization - asap according to every single app, of course.

If you're thinking that I'm getting crazy, just notice that pm2 is usually installed as global (check their docs and google it), so

packages like convict, cors, or even helmet can't be really shared across our apps in the same way as pm2 does?

That is a polemic thread, there are pros and cons here I'm just pointing out as something to be considered, personally I like to have pm2, and helmet for security/compliance reasons as global and the others as local.

TL;DR: You may have cost savings by moving some of your local packages as global because you'll decrease the overall deployment time of your app, this way you can update the ASG

scale-outpolicy to be executed whenever it reaches 85% ofCPU Utilization, instead of 70% as an example.

Once you have your Node.js app running from a Golden AMI I recommend setting up your continuous deployment via CodeDeploy and after your Single Instance with CodeDeploy is working properly move to the next topic in your infrastructure settings, Auto-Scaling + ELB.

Note: You'll need to go back to CodeDeploy later to configure your Blue-Green Deployment over ASG.

AWS CodeDeploy is a powerful service for automating deployments to Amazon EC2, AWS Lambda, and on-premises servers. However, it can take some effort to get complex deployments up and running or to identify the error in your application when something goes wrong.

The proper way to deal with CodeDeploy is by testing and debugging it locally and once everything working properly fire your deployment process in AWS CodeDeploy.

However, to have a CodeDeploy environment up and running locally is not so trivial, at least until now! 🍻

AmazonLinux 2 instance on it along with the CodeDeploy Agent (and other stuff),

all instructions needed can be checked at: richardsilveira/amazonlinux2-codedeploy

Note: Follow the instructions that I've prepared there and take a deeper look at my appspec.yml and the scripts files in this repository.

I'll show off some detailed points that are not so easy to find out, and which you may be would come across along your CodeDeploy environment settings journey.

You'll need to attach a Service Role in your Deployment Group, and to integrate it with your Auto-Scaling Group environment you'll need to create one can be named CodeDeployServiceRole

and attach to it the policies managed by AWS AWSCodeDeployRole, AutoScalingFullAccess plus a custom policy like this on my gist: CodeDeployAdditionalPermissionsForEC2ASG.

Tag your EC2 instances properly will help you in so many aspects, about CodeDeploy I always try to have two Deployment Groups for a single Application like so: ProductionInstances-SingleMachine / ProductionInstances and tagging my instances help me by letting CodeDeploy identify them by tags.

You can install the CodeDeploy agent either as part of your Golden AMI or by User Data Scripts. AWS doesn't recommend the installation as part of your Golden AMI because you'll need to update the Agent manually, and you may face some issues at some point (when you'll find out a need to update the agent), although, you'll have a considerable time reduction at the deployment time, thus, it is not a so bad practice.

See more about it on Ordering execution of launch script section at AWS docs Under the Hood: AWS CodeDeploy and Auto Scaling Integration

Install script: EC2 User Data to Install the Code Deploy Agent

Set up a High Available and Fault-Tolerant environment for the API, by using at least 3 AZ in a Region/VPC (ELB) - v7.0.0

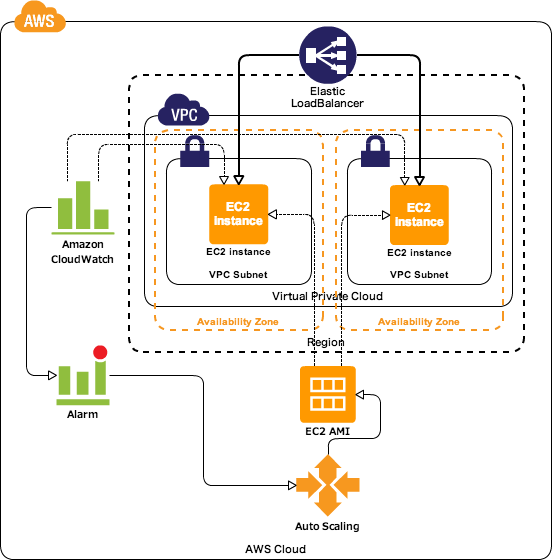

We'll build an elastic and high available environment in AWS like in the image bellow, but instead of two AZ, try to work with at least 3 AZ, it's a common approach/best practice strategy for many scenarios about infrastructure scaling.

How I don't want you bored I'll move fast through the key points of ASG + ELB at sequence.

I'll take notes only at properties that either you may face issues or I in cases that I feel a needs to share some personal experience.

- You can go with the almost default settings. Also, you'll need to use

/healthcheckfor theHealth check pathand override thePortto use3000instead of the default80accordingly what was made in Health Check and Graceful Shutdown section)

-

Go with an Application Load Balancer with port 80 as your Listener

-

Select at least three Availability Zones for your Load Balancer, plus, you must use them in your Auto Scaling later on.

-

In your Security Group settings, enable Port

80and3000(the health check server) from the internet (0.0.0.0/0 and ::/0 ipv4 and ipv6 CIDRs) inInbound rules. -

Update the

Inbound rulesof the Security Group associated with your EC2 to allows ONLY HTTP traffic (port 80) coming from this Security Group you're using in ELB. -

You may enable AWS WAF (Web Application Firewall) to protect your website from common attack techniques like SQL injection and Cross-Site Scripting (XSS)

-

You may enable AWS Config for compliance (you can define rules about AWS resource configurations and AWS Config will helps you to evaluate and take actions about it)

-

In order to be happy you must reference a Golden AMI for Node.js apps that I've created and gives me a review, 1-Click Ready Node.js LTS on Amazon Linux 2. By using this AMI you'll have a "ready-to-production" Node.js server already configured.

-

You must select the Security Group you've created for the EC 2 (not that one for ALB)

-

Tag your instances properly here (I like to use

NameandStagetags). -

Select a Placement Group since the beginning of your project, for Startups and small project at early stages is common to use the

SpreadPlacement Group, for large apps,Partition, andClusterstrategy for Big Data/Machine Learning batch processes because of low network latency and high network throughput.

It's a summarized and opinionated point of view, I recommend a deep view of Placement Groups and how they work.

- In order to integrate your ASG with CodeDeploy, you need to install the CodeDeploy agent,

therefore, at

User datasection use the script as follows: EC2 User Data to Install the Code Deploy Agent

-

Select the same AZ that you have selected in ALB settings.

-

For

Health Check TypeselectELB. -

Create a Scale-out and a Scale-in policy, to add and remove instances accordingly some CloudWatch threshold, e.g. CPU (for memory you'll need to install the Cloudwatch Agent)

Pay attention at the cooldown period for your Scale-in policy, usually this time should be lower than that for scale-out policy to help you reduce costs significantly.

-

You can create a Scheduled scaling policy for predictable load changes, e.g. if your app has a traffic spike every day at lunch-time, its a good idea to scale out some extra machines to handle the incoming load properly.

-

Create a notification for launch/terminate, and fail activities sounds professional, but to do so, you'll need to create a Lambda Function to be subscribed to it, plus, coding this function to notify someone via Slack, Telegram, or other channels.

You've already done the hard work at "Configure Continuous Deployment with AWS CodeDeploy" section (at least you've read about it), now you'll just update some settings to deploy your application through a "Blue/Green" deployment strategy.

Don't forget to test your deployment local with Docker with: richardsilveira/amazonlinux2-codedeploy

Replaces the instances in the deployment group with new instances and deploys the latest application revision to them. After instances in the replacement environment are registered with a load balancer, instances from the original environment are deregistered and can be terminated. -by AWS.

I'll show off quickly common settings for production apps.

- Deployment type:

Blue/green - Environment configuration:

Automatically copy Amazon EC2 Auto Scaling group - Traffic rerouting:

Reroute traffic immediately - Terminate the original instances in the deployment group:

1 hour. - Deployment configuration:

CodeDeployDefault.OneAtTime - Load balancer: Enable and select your ALB and its Target Group

- Rollbacks:

Roll back when a deployment fails

Set up Observability in the application at general, using AWS X-Ray, CloudTrail, VPC Flow Logs, and (maybe) ELK Stack - v9.0.0

Observability is a term related with logging, tracing, and analysis of microservices inter-communication and user requests interactions with your application. In order to promote observability across your microservices, the better strategy nowadays in by the adoption of an APM tool such as AWS X-Ray, Elastic APM, Dynatrace, and DataDog. An APM tool provides an easy way to instrument your app by offering an easy-to-use SDK.

Instrumentation means the measure of the product's performance, diagnose errors, and to write trace information.

Instead of coding here, it sounds a better idea to have a dedicated repository about AWS X-Ray and other about Elasticsearch

You need to trace and audit not only your application user interactions, but everything that is changed in your AWS account. To accomplish it, AWS CloudTrail should be your first choice. CloudTrail offers:

- Internal monitoring of API calls being made

- Audit changes to AWS Resources by your users

- Visibility into user and resource activity

AWS CloudTrail is enabled by default and you can either create a new trail or check for recent events that happened in your AWS account.

VPC Flow Logs is an AWS service that enables you to do network monitoring. Build a network-monitored environment in AWS is a must-have activity about security and compliance of your infrastructure, also, at the same time is a huge topic. I have wrote an article about it - published by the huge codeburst.io medium editors - Network monitoring with AWS VPC Flow Logs and Amazon Athena.

At this article you'll learn how to have a monitored network environment in your VPC in a professional way.

You will learn how to use and integrate AWS VPC Flow Logs, Amazon Athena, Amazon CloudWatch, and S3 to help us with analyzing networking traffic tasks, plus, to get notified for threats.

We've already built a highly available environment for this User API earlier at Set up a High Available and Fault-Tolerant environment for the API section, thus we'll reproduce that environment via infrastructure-as-code by using CloudFormation.

That is another huge topic, so, I'll write some sort of How-to article about it later. For now, I'll move through the key points about CloudFormation.

Forget about the CloudFormation Designer it's not so helpful even for beginners, a better option is working with a good tooling setup instead.

I'm a big fan of IntelliJ IDEA's family and don't like so much of VSCode, but its ability and popularity in terms of extension can be awesome sometimes and that is the case about CloudFormation template building.

AWS created a Linter wich validates in real-time the issues in our CloudFormation YAML/JSON files. This way you can catch errors in your template while you're creating it. Also, you can use it as a pre-commit hook: AWS cfn-lint

Firstly, install pip pip install cfn-lint, then install the VSCode extension.

There are many options about code-completion in CloudFormation templates and despite the fact that is not so popular, I liked a lot of the CloudFormation YAML Snippets for VS Code.

Write down every resource that you need to add to your Stack before building the template and build it in baby steps - That's it!

Deploy your CloudFormation template at the end of each step.

I've build this template through 6 steps, take a look at each of them for a better understanding.

Extra step to add all mappings needed, parameters descriptions, typos, and so on.

Stack Policy should be part of your daily-basis tasks while working with CloudFormation, protecting your stacks against unintentional resource updates/deletions and not-authorized template updates - plus, don't forget the principle of least privilege.

To know more about it take a look at Prevent updates to stack resources

{

"Statement" : [

{

"Effect" : "Allow",

"Action" : "Update:*",

"Principal": "*",

"Resource" : "*"

},

{

"Effect" : "Deny",

"Action" : "Update:*",

"Principal": "*",

"Resource" : "LogicalResourceId/WebServerRole"

}

]

}It's a sample of how I'm handling stack resources updates protection in our scenario.

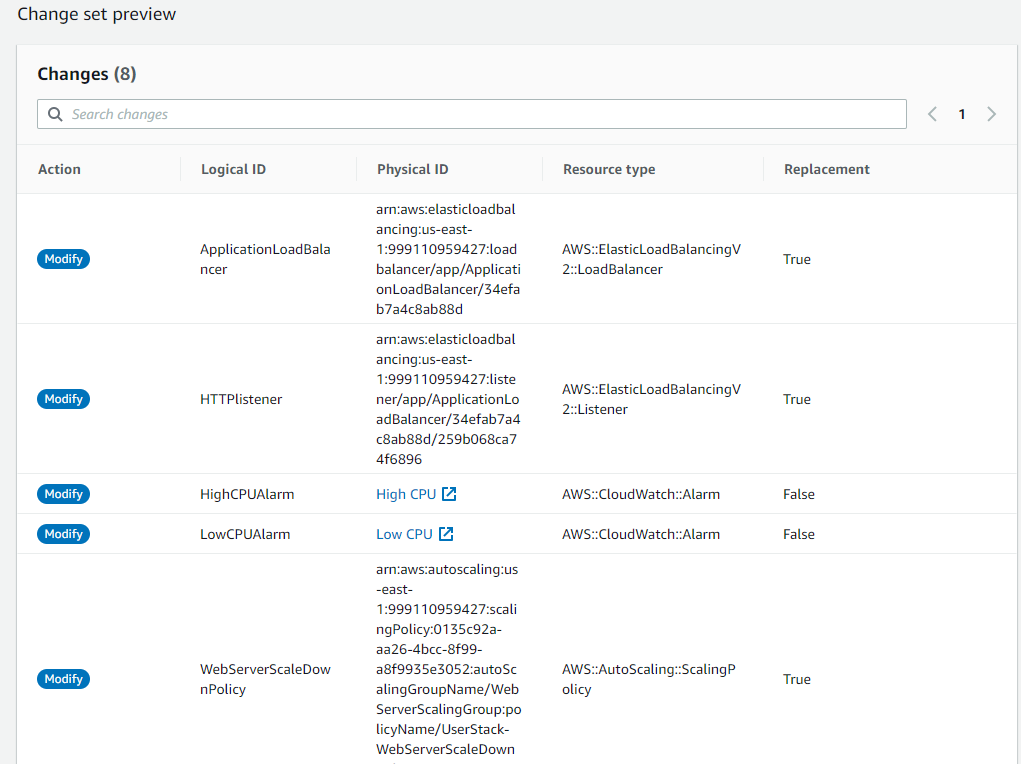

We must be aware of Update Behaviors of our stack resources to understand the downtime of your application.

If you have some experience with CloudFormation you should be familiar with the AWS resource and property types reference - if not, favorite it, I use it at every new template that I build. For every resource property you can notice an Update requires field that indicates how an update at that property may affect your end-user in terms of application downtime, the values can be Replacement, Some Interruption, and No Interruption.

Still talking about application downtime, other great allied that you can pay attention is the Change Sets feature. Whenever you update your Stack you can compare what is being updated, plus, if a Replacement of the resource is required.

CloudFormation is infrastructure as code and like in a programming language we need to leverage code reuse.

There is no problem with the CloudFormation template that we build for this project. However taking a deeply look to it you'll notice there are many concerns about Networking - VPC, Subnets, Security - Security Groups, Elasticity and High Availability - ALB, ALG and all of it was done by one single person. In a small-to-medium company you'll probably have a Network team, an Architectural team, some Security specialist guy and the Product's teams and those separations of responsibilities can be reflected in CloudFormation through Cross-stack references. Cross-stack references let you use a layered or service-oriented architecture. Instead of including all resources in a single stack, you create related AWS resources in separate stacks; then you can refer to required resource outputs from other stacks. By restricting cross-stack references to outputs, you control the parts of a stack that are referenced by other stacks.

In our scenario we could have a network stack, a stack for Elasticity with ASG + ALB included, and another for the Node.JS Application Server itself. This way each team remains focused on its specialties and goals.

To know more about CloudFormation Cross-stack references take a look at Walkthrough: Refer to resource outputs in another AWS CloudFormation stack

There are blocks of codes in this template that in the daily-basis activities you'd repeat often like the WebServerTargetGroup, and WebServerScalingGroup, plus, always using them together, changing other settings like Scaling policies. Those kinds of codes you must group in Nested Stacks and reference them in other stacks leveraging code reusability easily.

Instead of coding here, it sounds a better idea to have a dedicated repository to demonstrate both cross-stack and nested stacks features

Whenever a resource is changed directly rather than in the CloudFormation template you've built, e.g. an Ingress Rule in a Security Group, we have a Drift scenario. A Drift occurs when a CloudFormation stack has changed from its original configuration and no longer matches the template which built it.

A Drift is something that needs to be avoided - just think about Disaster Recovery Scenarios when we need to recreate our stack in another region. In case of some drift, we can't reproduce the current state our stack of resources accordingly.

Drift Detection can very quickly be run to determine if drift has occurred and steps can be taken to correct the issue. You can run it directly from the Console. Plus we can leverage AWS Config for monitoring and notification whenever a drift happens at your infrastructure.

A good resource about Drift Detection is AWS - CloudFormation Drift Detection

You can create a stack from its template and do some changes at the Security Group of the Application Server to play around Drift detection and remediation

There is so much to talk about CloudFormation, even only about advanced and hidden features topics, I believe that I could be never handled all of them here because it is so huge content. I'll point some of those and you may google about it.

- Custom Resources

- Continuous Delivery with CloudFormation

- Stack Sets

- CloudFormation Best Practices

- Serverless Application Model

But still, I've made a deep dive about CloudFormation here focused on everything you need to know to work confident with CloudFormation.