Shaopeng Guo, Yujie Wang, Quanquan Li, and Junjie Yan.

Our paper Arxiv

This repository is implemented by Zeren Chen when he is an intern at SenseTime Research.

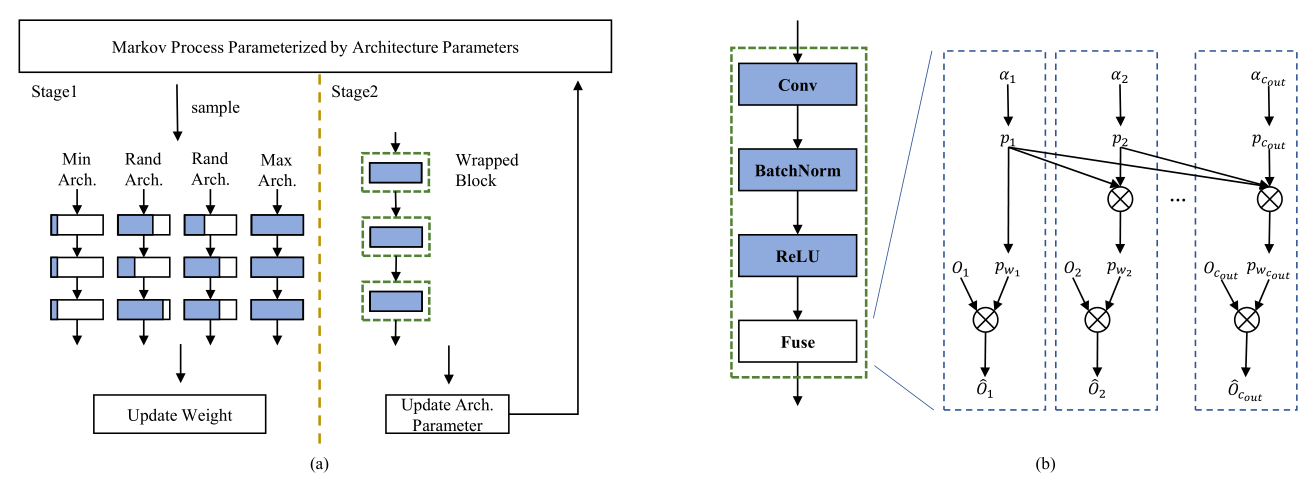

We proposed a differentiable channel pruning method named Differentiable Markov Channel Pruning (DMCP). The channel pruning is modeled as the Markov process to eliminate duplicated solutions from the straight forward modeling (e.g. independent Bernoulli variables). See our paper for more details.

The code is tested with python 3.6, pytorch 1.1 and cuda 9.0. Other requirements can be install via:

pip install -r requirements.txtpython main.py --mode <M> --data <D> --config <C> --flops <F> [--chcfg <H>]

-M, --mode, running mode: train | eval | calc_flops | calc_params | sample, default: eval.

-D, --data: specify the path to imagenet dataset folder, required.

-C, --config, specify config file, required.

-F, --flops, specify target flops, required.

--chcfg, specify channel config file when retrain pruned model from scratch.One example to train mbv2-300m:

# run dmcp process

# result in `results/DMCP_MobileNetV2_43_MMDDHH/`

python main.py --mode train --data path-to-data --config config/mbv2/dmcp.yaml --flops 43

# use above pruned channel config to retrain the model from scratch

# result in `results/Adaptive_MobileNetV2_43_MMDDHH`

python main.py --mode train --data path-to-data --config config/mbv2/retrain.yaml \

--flops 43 --chcfg ./results/DMCPMobileNetV2_43_MMDDHH/model_sample/expected_chWe provide pretrained models, including

-

dmcp-stage1 checkpoint (e.g. stage1/res18-stage1.pth)

-

dmcp-stage2 checkpoint (pruned model after dmcp process, e.g. res18-1.04g/checkpoints/pruned.pth)

-

retrained models (e.g. res18-1.04g/checkpoints/retrain.pth)

You can download it from release and change the recovery setting in config file.

Note: The original implementation is based on the internal version of sync batchnorm. We use torch.nn.BatchNorm2d in this release. So the accuracy may have some fluctuations compared with the report of the paper.

| model | target flops | top 1 |

|---|---|---|

| mobilenet-v2 | 43M | 59.0 |

| 59M | 62.4 | |

| 87M | 66.2 | |

| 97M | 67.0 | |

| 211M | 71.6 | |

| 300M | 73.5 | |

| resnet-18 | 1.04G | 69.0 |

| resnet-50 | 279M | 68.3 |

| 1.1G | 74.1 | |

| 2.2G | 76.5 | |

| 2.8G | 76.6 |

If you find DMCP useful in your research, please consider citing:

@inproceedings{guo2020dmcp,

title = {DMCP: Differentiable Markov Channel Pruning for Neural Networks},

author = {Shaopeng, Guo and Yujie, Wang and Quanquan, Li and Junjie Yan},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2020},

}

The usage of this software is under CC-BY-4.0.

We thank Yujing Shen for his exploration at the beginning of this project.