Rotate-and-Render: Unsupervised Photorealistic Face Rotation from Single-View Images (CVPR 2020)

Hang Zhou*, Jihao Liu*, Ziwei Liu, Yu Liu, and Xiaogang Wang.

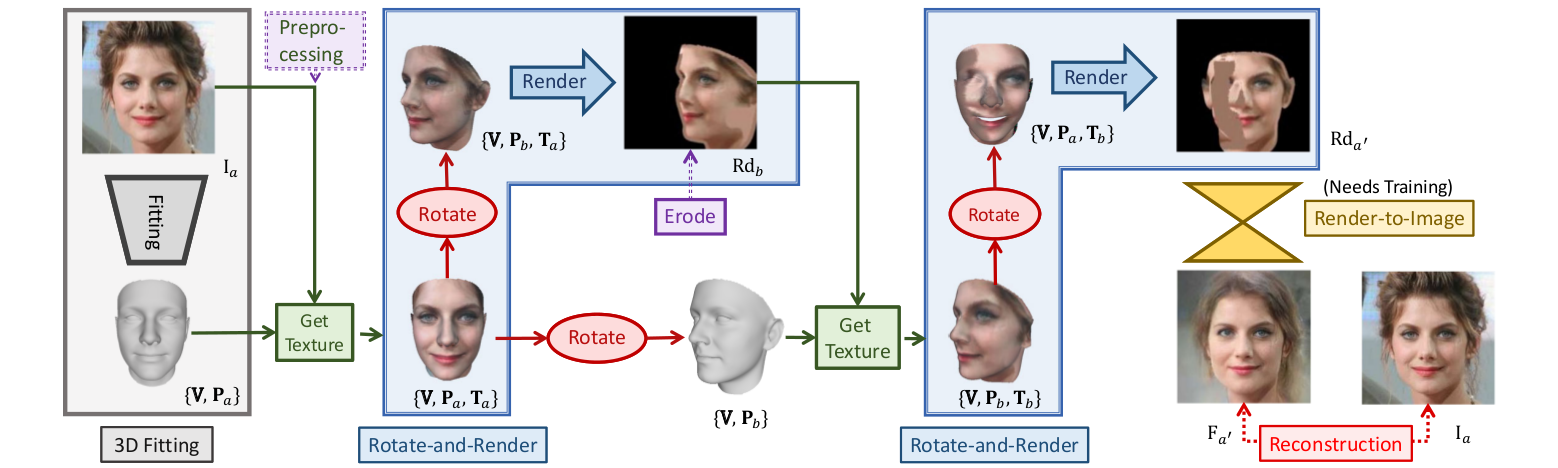

We propose a novel un-supervised framework that can synthesize photorealistic rotated faces using only single-view image collections in the wild. Our key insight is that rotating faces in the 3D space back and forth, and re-rendering them to the 2D plane can serve as a strong self-supervision.

Requirements

- Python 3.6 is used. Basic requirements are listed in the 'requirements.txt'.

pip install -r requirements.txt

-

Install the Neural_Renderer following the instructions.

-

Download checkpoint and BFM model from ckpt_and_bfm.zip, put it in

3ddfaand unzip it. Our 3D models are borrowed from 3DDFA.

DEMO

Rotate faces with examples provided

-

Download the checkpoint and put it in

./checkpoints/rs_model. -

Run a simple Rotate-and-Render demo, the inputs are stored at

3ddfa/example.

-

Modify

experiments/v100_test.sh, the--posesare the desired degrees (range -90 to 90), choose 0 as frontal face. -

Run

bash experiments/v100_test.sh, results will be saved at./results/.

DEVELOP

Prepare your own dataset for testing and training.

Preprocessing

- Save the 3D params of human faces to

3ddfa/resultsby 3ddfa.

cd 3ddfa

python inference.py --img_list example/file_list.txt --img_prefix example/Images --save_dir results

cd ..Data Preparation

Modify class dataset_info() inside data/__ini__.py, then prepare dataset according to the pattern of the existing example.

You can add the information about a new dataset to each instance of the class.

prefixThe absolute path to the dataset.file_listThe list of all images, the absolute path could be incorrect as it is defined in theprefixland_mark_listThe list that stores all landmarks of all images.params_dirthe path that stores all the 3D params processed before.dataset_namesthe dictionary that maps dataset NAMEs to their information. This is used in the parsers as--dataset NAME.folder_levelthe level of folders from theprefixto images (.jpgs). For example the folder_level is 2 if a image is stored asprefix/label/image.jpg.

Training and Inference

-

Modify

experiments/train.shand usebash experiments/train.shfor training on new datasets. -

Visualization is supported during training with Tensorboard.

-

Please see the DEMO part for inference.

Details of the Models

We provide two models with trainers in this repo, namely rotate and rotatespade. The "rotatespade" model is an upgraded one which is different from that described in our paper. A conditional batchnorm module is added according to landmarks predicted from the 3D face. Our checkpoint is trained on this model. We have briefly described this model in our supplementary materials.

License and Citation

The usage of this software is under CC-BY-4.0.

@inproceedings{zhou2020rotate,

title = {Rotate-and-Render: Unsupervised Photorealistic Face Rotation from Single-View Images},

author = {Zhou, Hang and Liu, Jihao and Liu, Ziwei and Liu, Yu and Wang, Xiaogang},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2020},

}