This repository implements Butterfly Net (for vertebra localization) in PyTorch 1.0 or higher.

- Error Correction: In view of some mistakes related to the structure of Btrfly Net in the original paper (Sekuboyina A, et al.), we correct the Figure 2 in the paper and build the right structure through PyTorch after contacting with the author many times.

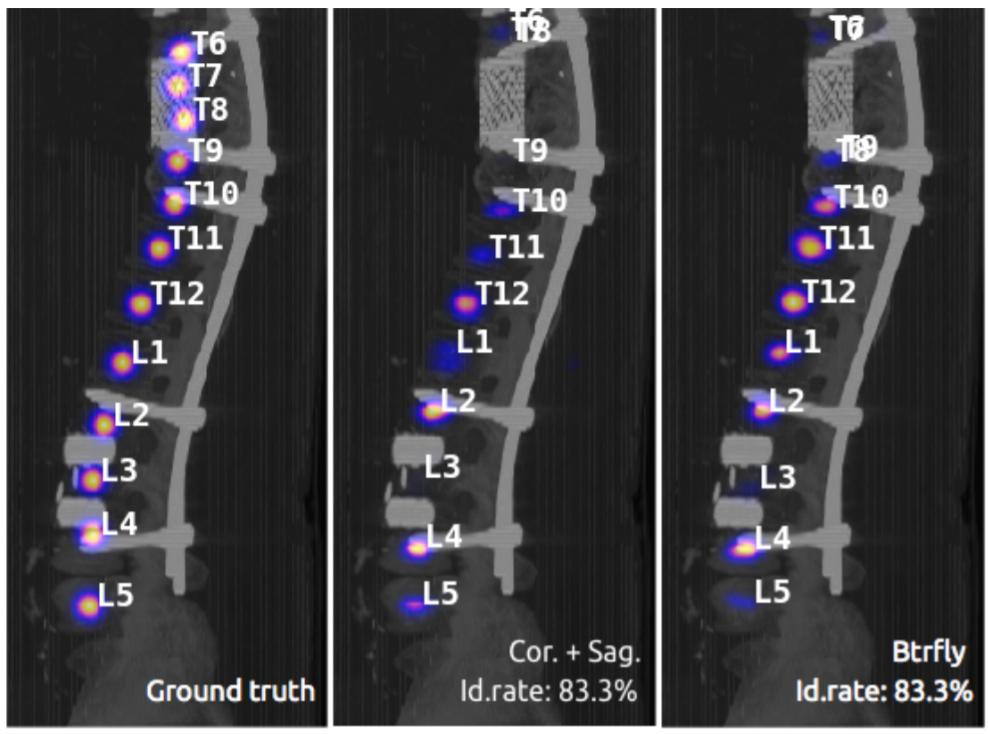

- Method Improvement: We create a new position-inferencing method in order to promote the performance of the model. Now you can see two different kinds of id rate results (using the paper's method and the method we proposed) during training. Roughly speaking, we use the weighted average of the positions inferenced from the two 2D heat maps instead of the direct outer product of them to get the final positions of vertebrae. This helps promote the id rate by nearly 5% in our experiment.

- Configs: We use YACS to manage the parameters, including the devices, hyperparameters of the model and some directory paths. See configs/btrfly.yaml. You can change them freely.

- Smooth and Enjoyable Training Procedure: We save the state of model, optimizer, scheduler, training iter, you can stop your training and resume training exactly from the save point without change your training

CMD. - TensorboardX: We support tensorboardX and the log directory is outputs/tf_logs. If you don't want to use it, just set the parameter

--use_tensorboardto0, according to the "Train Using Your Parameters" section. - Evaluating during training: Evaluate you model every

eval_stepto check performance improving or not.

See Verse2019 challenge for more information.

The original data directory should be like the following structure.

OriginalPath

|__ raw

|_ 001.nii

|_ 002.nii

|_ ...

|__ seg

|_ 001_seg.nii

|_ 002_seg.nii

|_ ...

|__ pos

|_ 001_ctd.json

|_ 002_ctd.json

|_ ...

-

The dataset has three files corresponding to one data sample, structured as follows:

-

-

- verse.nii.gz - Image

- verse_seg.nii.gz - Segmentation Mask

- verse_ctd.json - Centroid annotations

- verse_snapshot - A PNG overview of the annotations.

-

-

The images need NOT be in the same orientation. Their spacing need NOT be the same. However, an image and its corresponding mask will be in the same orientation.

-

Both masks and centroids are linked with the label values [1-24] corresponding to the vertebrae [C1-L5]. Some cases might contain 25, the label L6.

-

The centroid annotations are with respect to the coordinate axis fixed on an isotropic scan (1mm) and a (P, I, R) or (A, S, L) orientation, described as:

-

-

- Origin at Superior (S) - Right (R) - Anterior (A)

- 'X' corresponds to S -> I direction

- 'Y' corresponds to A -> P direction

- 'Z' corresponds to R -> L direction

- 'label' corresponds to the vertebral label

-

python train.py For example, you can change the save_step by

python train.py --save_step 10

See more changeable parameters in train.py file.

The prediction result will be saved as a .pth file in the pred_list directory. You can set the parameter is_test in test.py to 0 or 1 to determine if the trained model is used for validation set or test set.