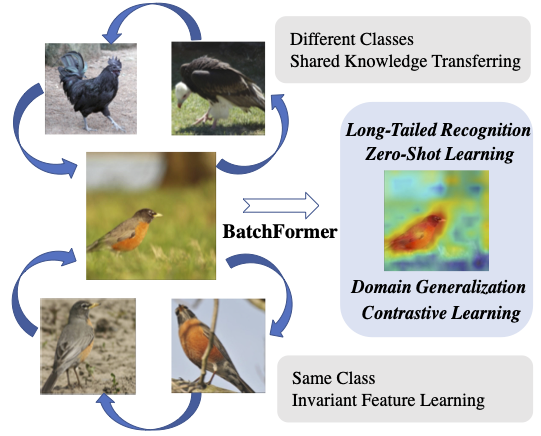

This is the official PyTorch implementation of BatchFormer for Long-Tailed Recognition, Domain Generalization, Compositional Zero-Shot Learning, Contrastive Learning.

Sample Relationship Exploration for Robust Representation Learning

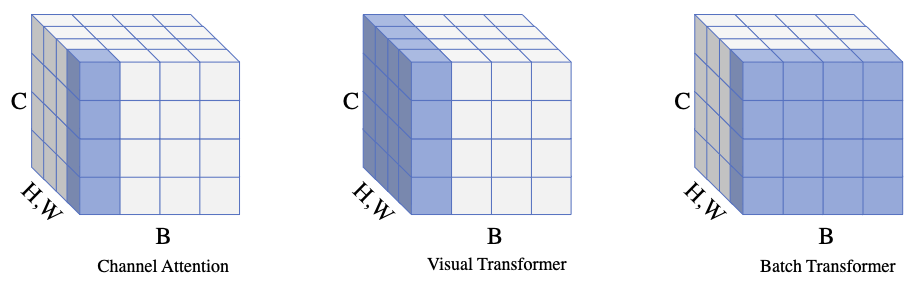

Please also refer to BatchFormerV2, in which we introduce a BatchFormerV2 module for vision Transformers.

| All(R10) | Many(R10) | Med(R10) | Few(R10) | All(R50) | Many(R50) | Med(R50) | Few(R50) | |

|---|---|---|---|---|---|---|---|---|

| RIDE(3 experts)[1] | 44.7 | 57.0 | 40.3 | 25.5 | 53.6 | 64.9 | 50.4 | 33.2 |

| +BatchFormer | 45.7 | 56.3 | 42.1 | 28.3 | 54.1 | 64.3 | 51.4 | 35.1 |

| PaCo[2] | - | - | - | - | 57.0 | 64.8 | 55.9 | 39.1 |

| +BatchFormer | - | - | - | - | 57.4 | 62.7 | 56.7 | 42.1 |

Here, we demonstrate the result on one-stage RIDE (ResNext-50)

| All | Many | Medium | Few | |

|---|---|---|---|---|

| RIDE(3 experts)* | 55.9 | 67.3 | 52.8 | 34.6 |

| +BatchFormer | 56.5 | 66.6 | 54.2 | 36.0 |

| All | Many | Medium | Few | |

|---|---|---|---|---|

| RIDE(3 experts) | 72.5 | 68.1 | 72.7 | 73.2 |

| +BatchFormer | 74.1 | 65.5 | 74.5 | 75.8 |

| AP | AP50 | AP75 | APS | APM | APL | Model | |

|---|---|---|---|---|---|---|---|

| DETR | 34.8 | 55.6 | 35.8 | 14.0 | 37.2 | 54.6 | |

| +BatchFormerV2 | 36.9 | 57.9 | 38.5 | 15.6 | 40.0 | 55.9 | download |

| Conditional DETR | 40.9 | 61.8 | 43.3 | 20.8 | 44.6 | 59.2 | |

| +BatchFormerV2 | 42.3 | 63.2 | 45.1 | 21.9 | 46.0 | 60.7 | download |

| Deformable DETR | 43.8 | 62.6 | 47.7 | 26.4 | 47.1 | 58.0 | |

| +BatchFormerV2 | 45.5 | 64.3 | 49.8 | 28.3 | 48.6 | 59.4 | download |

The backbone is ResNet-50. The training epoch is 50.

| PQ | SQ | RQ | PQ(th) | SQ(th) | RQ(th) | PQ(st) | SQ(st) | RQ(st) | AP | |

|---|---|---|---|---|---|---|---|---|---|---|

| DETR | 43.4 | 79.3 | 53.8 | 48.2 | 79.8 | 59.5 | 36.3 | 78.5 | 45.3 | 31.1 |

| +BatchFormerV2 | 45.1 | 80.3 | 55.3 | 50.5 | 81.1 | 61.5 | 37.1 | 79.1 | 46.0 | 33.4 |

| Epochs | Top-1 | Pretrained | |

|---|---|---|---|

| MoCo-v2[3] | 200 | 67.5 | |

| +BatchFormer | 200 | 68.4 | download |

| MoCo-v3[4] | 100 | 68.9 | |

| +BatchFormer | 100 | 70.1 | download |

Here, we provide the pretrained MoCo-V3 model corresponding to this strategy.

| PACS | VLCS | OfficeHome | Terra | |

|---|---|---|---|---|

| SWAD[5] | 82.9 | 76.3 | 62.1 | 42.1 |

| +BatchFormer | 83.7 | 76.9 | 64.3 | 44.8 |

| MIT-States(AUC) | MIT-States(HM) | UT-Zap50K(AUC) | UT-Zap50K(HM) | C-GQA(AUC) | C-GQA(HM) | |

|---|---|---|---|---|---|---|

| CGE*[6] | 6.3 | 20.0 | 31.5 | 46.5 | 3.7 | 14.9 |

| +BatchFormer | 6.7 | 20.6 | 34.6 | 49.0 | 3.8 | 15.5 |

Experiments on CUB.

| Unseen | Seen | Harmonic mean | |

|---|---|---|---|

| CUB[7]* | 67.5 | 65.1 | 66.3 |

| +BatchFormer | 68.2 | 65.8 | 67.0 |

| Top-1 | Top-5 | |

|---|---|---|

| DeiT-T | 72.2 | 91.1 |

| +BatchFormerV2 | 72.7 | 91.5 |

| DeiT-S | 79.8 | 95.0 |

| +BatchFormerV2 | 80.4 | 95.2 |

| DeiT-B | 81.7 | 95.5 |

| +BatchFormerV2 | 82.2 | 95.8 |

- Long-tailed recognition by routing diverse distribution-aware experts. In ICLR, 2021

- Parametric contrastive learning. In ICCV, 2021

- Improved baselines with momentum contrastive learning.

- An empirical study of training self-supervised vision transformers. In CVPR, 2021

- Domain generalization by seeking flat minima. In NeurIPS, 2021.

- Learning graph embeddings for compositional zero-shot learning. In CVPR, 2021

- Contrastive learning based hybrid networks for long- tailed image classification. In CVPR, 2021

The proposed BatchFormer can be implemented with a few lines as follows,

def BatchFormer(x, y, encoder, is_training):

# x: input features with the shape [N, C]

# encoder: TransformerEncoderLayer(C,4,C,0.5)

if not is_training:

return x, y

pre_x = x

x = encoder(x.unsqueeze(1)).squeeze(1)

x = torch.cat([pre_x, x], dim=0)

y = torch.cat([y, y], dim=0)

return x, y

If you find this repository helpful, please consider cite:

@inproceedings{hou2022batch,

title={BatchFormer: Learning to Explore Sample Relationships for Robust Representation Learning},

author={Hou, Zhi and Yu, Baosheng and Tao, Dacheng},

booktitle={CVPR},

year={2022}

}

@article{hou2022batchformerv2,

title={BatchFormerV2: Exploring Sample Relationships for Dense Representation Learning},

author={Hou, Zhi and Yu, Baosheng and Wang, Chaoyue and Zhan, Yibing and Tao, Dacheng},

journal={arXiv preprint arXiv:2204.01254},

year={2022}

}

Feel free to contact "zhou9878 at uni dot sydney dot edu dot au" if you have any questions.