training speed is slow

Tangzy7 opened this issue · comments

Using gpu, the training speed is 4s/iteration when the batch size is 64. It is quite of slow.

I want to know your training speed.

The training speed is 2.x sec/iter while using GTX 1080.

Any way to speed up the training is welcome to comment below.

@ywk991112 Hi, I want to know what paramter you used to get 2.x sec/iter. I got 4.x sec/iter when using the default setting -la 1 -hi 512 -lr 0.0001 -it 50000 -b 64 -p 500 -s 1000 with MAX_LENGTH=15 on nvidia P100.

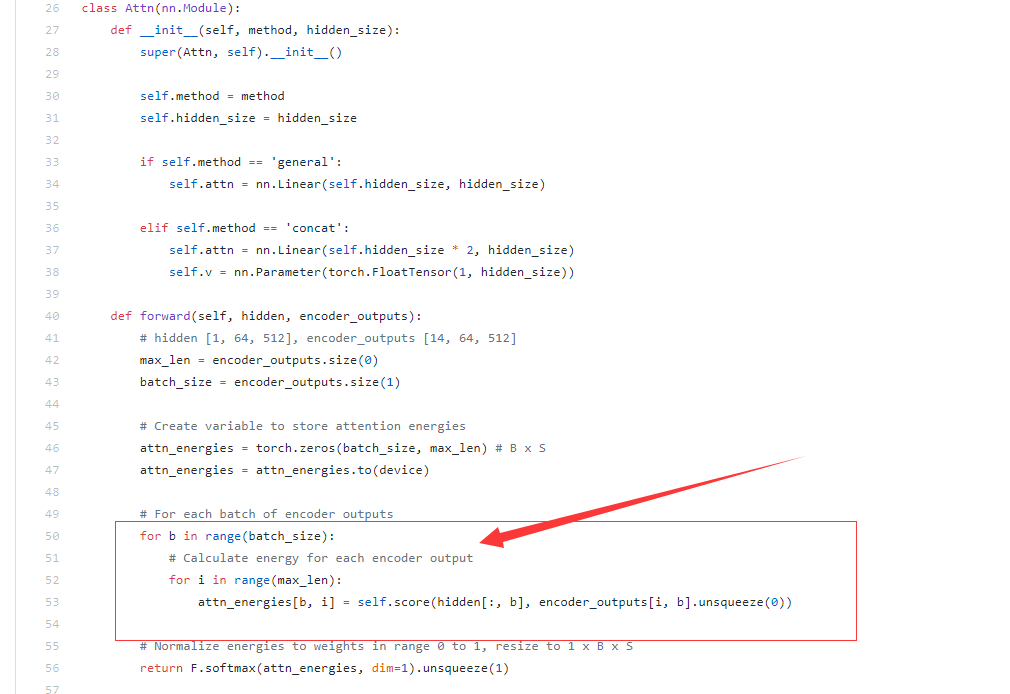

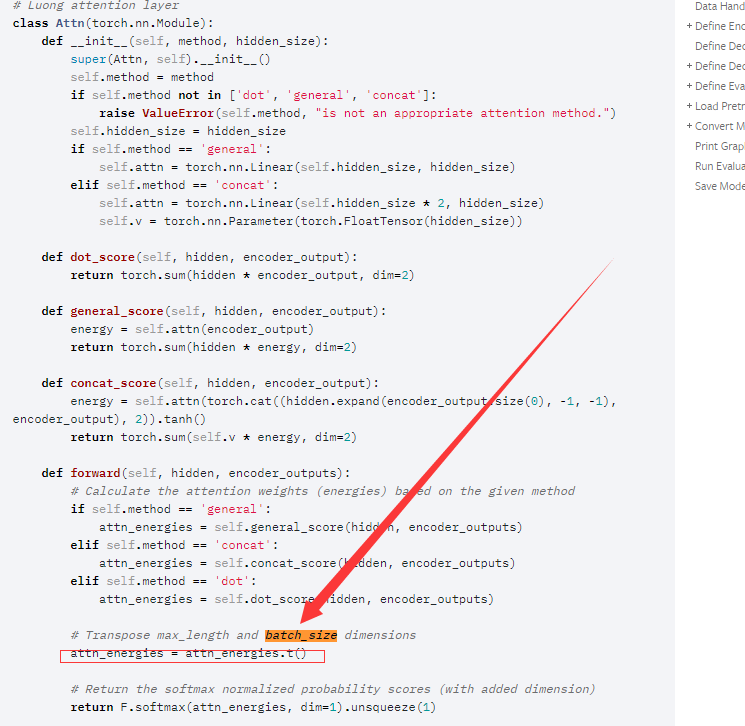

I just found that for-loop in pytorch will decrease the speed of both forward and backward, just like the for-loop here.

for t in range(max_target_len): decoder_output, decoder_hidden, decoder_attn = decoder( decoder_input, decoder_hidden, encoder_outputs )

I just vectorized the input of decoder and only used teacher-forcing, and got a speed of 21 it/s with 256 batch size. Previewly ,it's 16 s/it