01/22/2023 We are currently updating our codebase, you may see inconsistent code versions

This is the official codebase for paper DC-BENCH: Dataset Condensation Benchmark.

It covers the following:

- Condensed Dataset

- How to set up

- Ran an evaluation

- How to integrate

- Commands to reproduce all the SOTA methods

The corresponding leaderboard can be found here: DC Leaderboard

A quick Youtube demo for adding new methods

Condensed Data

We provide all condensed data here, this will be needed to reproduce the benchmark results. Please see the following sections for details on how to use the data.

How to set up

Step 1

Run the following command to download the benchmark.

git clone git@github.com:justincui03/dc_benchmark.git

Step 2

Download all(or part) of the data from this shared link and put them under distilled_results folder in the project root directory.

The project structure should look like the following:

- dc_benchmark

- distilled_results

- DC

- DSA

- ...

- ...

- distilled_results

Step 3

Run the following command to create a conda environment

cd dc_benchmark

conda env create -f environment.yml

Run an evaluation

Running an evaluation is very simple with DCBench.

Simply run the following command from the project root director you just downloaded.

bash scripts/eval.sh --dataset CIFAR10 --ipc 1 --model convnet --aug autoaug

Here are the configurable parameters

- dataset: choose between CIFAR10, CIFAR100 and tinyimagenet

- ipc: choose between 1, 10 and 50

- model: choose between convnet, convnet4, mlp, resnet18, resnet152, etc

- aug: choose between autoaug, randaug, imagenet_aug, dsa,etc

Neural Architecture Search

We incorparete the standard NAS-Bench-201 library into our codebase. You can start a simple NAS experiment by running the following command

cd darts-pt

bash darts-201.sh --dc_method tm

- dc_method: which dataset condensation method to test, choose between random, kmeans-emb, dc, dsa, dm, tm

For the detailed setup, please refer to DARTS-PT

How to integrate

DC-Bench is designed to be extensible. In the following sections, we will introduce how to integrate new methods and introduce new datasets which can be as simple as a few lines of code.

Integrate a new method

A new method can be introduced in the following 3 steps:

- Define a new dataloader(if not already exist)

- Config the code to use the new dataloader

- Run the eval command same as all other methods

A detailed tutorial can be found new method integration

Introduce a new dataset

Introducing a new dataset is even easier, it can be done in the following 2 steps:

- Extend the code to read from the new dataset(if the dataset is not already included in pytorch)

- Run the eval command by specifying the new dataset

A detailed tutorial can be found new dataset integration

Introduce new augmentations

Adding new augmentations can be done with one line of code, e.g.

data_transforms = transforms.Compose([transforms.RandAugment(num_ops=1)])

More details can be found at new aug integration

Introduce new models

With DC-Bench's modularized design, it's also easy to introduce new models for evaluation.

As long as the new model follows the standard PyTorch interfacenn.Module, you can intergare with model with the following example code

if model_name == 'mlp':

return MLP(channel, num_classes, im_size)

Detailed instructions can be found at new model integration

SOTA commands

We collected all the commands to reproduce the SOTA synthetic results in our codebase. All the parameters are provided by the original authors.

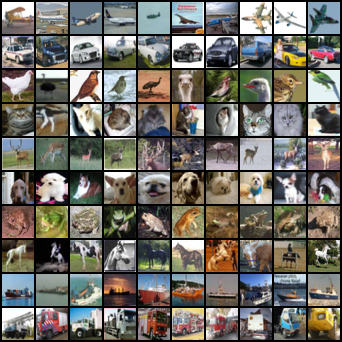

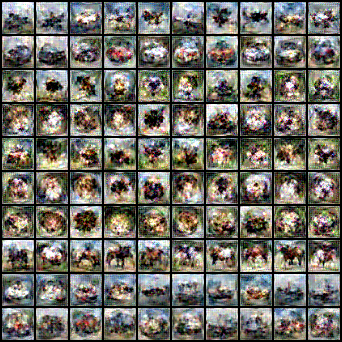

Example condensed datasets

|

|

|---|---|

| Randomly selected images | Kmeans-emb selected images |

|

|

| Synthetic images by DC | Synthetic images by DSA |

|

|

| Synthetic images by DM | Synthetic images by TM |

Acknowledgments

Part of our code are adpoted from the following github repositories with license

Citation

@article{cui2022dc,

title={DC-BENCH: Dataset Condensation Benchmark},

author={Cui, Justin and Wang, Ruochen and Si, Si and Hsieh, Cho-Jui},

journal={arXiv preprint arXiv:2207.09639},

year={2022}

}