Yuxin Jin, Ming Qian, Jincheng Xiong, Nan Xue, Gui-Song Xia

Accepted by IEEE International Conference on Multimedia and Expo (ICME) 2023

This is a PyTorch implementation of D-DFFNet, which detects defocus blur region with depth prior and DOF cues. Our D-DFFNet achieves SOTA on public benchmarks (e.g. DUT, CUHK, and CTCUG) with different splits. Besides, we collect a new benchmark EBD dataset for analysis DBD model with more DOF settings.

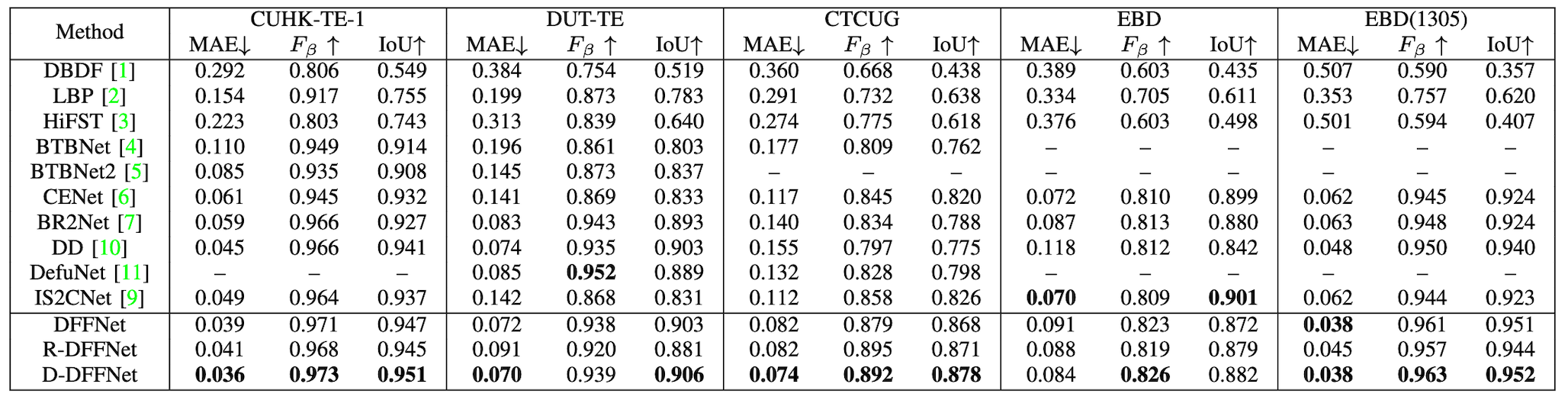

We compare our method with 14 recent methods, including DBDF, LBP, HiFST, BTBNet and its later version BTBNet2, CENet, BR2Net, AENet, EFENet, DD, DefusionNet2(results: CTCUG(extraction code:kwpd),DUT(extraction code:ui5e)), IS2CNet, LOCAL, and MA-GANet. We retrained the BR2Net model and used data from the papers for LOCAL and MA- GANet, as they did not provide codes and results. For the other methods, we downloaded their results or pre-trained models and tested them using our test1.py and test1_iou.py as testing code.

We compare our method with 14 recent methods, including DBDF, LBP, HiFST, BTBNet and its later version BTBNet2, CENet, BR2Net, AENet, EFENet, DD, DefusionNet2(results: CTCUG(extraction code:kwpd),DUT(extraction code:ui5e)), IS2CNet, LOCAL, and MA-GANet. We retrained the BR2Net model and used data from the papers for LOCAL and MA- GANet, as they did not provide codes and results. For the other methods, we downloaded their results or pre-trained models and tested them using our test1.py and test1_iou.py as testing code.

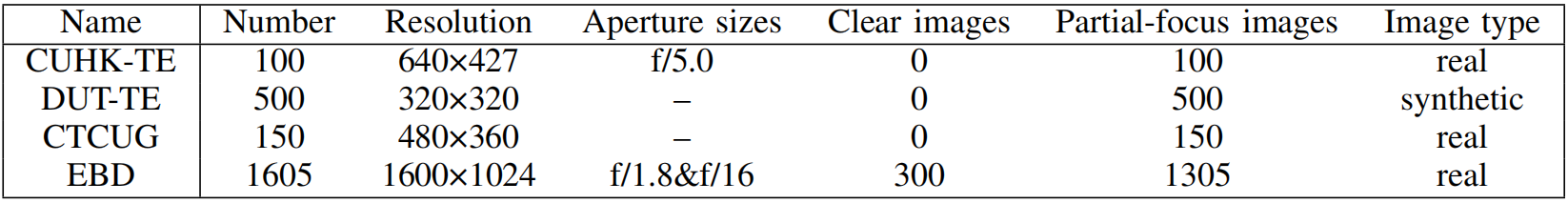

We collect a new dataset EBD for testing. Please download from this link if you plan to use it. EBD dataset Comparisons with existing DBD test datasets are as follows.

- We provide pre-trained models of our DFFNet and D-DFFNet using three different training data.

| Traing Datasets | CUHK-TR-1 | CUHK-TR-1&DUT-TR | CUHK-TR-2&DUT-TR |

|---|---|---|---|

| DFFNet | DFFNet | DFFNet | DFFNet |

| D-DFFNet | D-DFFNet | D-DFFNet | D-DFFNet |

- Pre-trained model for depth model: midas_v21-f6b98070.pt

We provide results on four test datasets. Since we use three different training data for a fair comparison with previous works, we provide all results related to the three different training data.

| Traing Datasets | CUHK-TR-1 | CUHK-TR-1&DUT-TR | CUHK-TR-2&DUT-TR |

|---|---|---|---|

| Results | Results | Results | Results |

- Pytorch 1.12.0

- OpenCV 4.4.0

- Numpy 1.21.2

- PIL

- glob

- imageio

- matplotlib

- Download related datasets and put them to /D-DFFNet/data/.

- Download the depth pre-trained model and put it to /D-DFFNet/depth_pretrained/.

- Training in stage 1:

python train_single.pyThe checkpoint is saved to /D-DFFNet/checkpoint/ as DFFNet.pth. - Training in stage 2:

python train_kd.pyThe checkpoint is saved to /D-DFFNet/checkpoint/ as D-DFFNet.pth.

bash test.sh

Codes for the depth model and depth pre-trained model are from MiDaS.

Part of our code is based upon EaNet and CPD.

If you find our code & paper useful, please cite us:

@inproceedings{jin2023depth,

title={Depth and DOF Cues Make A Better Defocus Blur Detector},

author={Jin, Yuxin and Qian, Ming and Xiong, Jincheng and Xue, Nan and Xia, Gui-song},

booktitle={IEEE International Conference on Multimedia and Expo},

year={2023},

}

If you have any questions or suggestions, please contact (Yuxin Jin jinyuxin@whu.edu.cn).