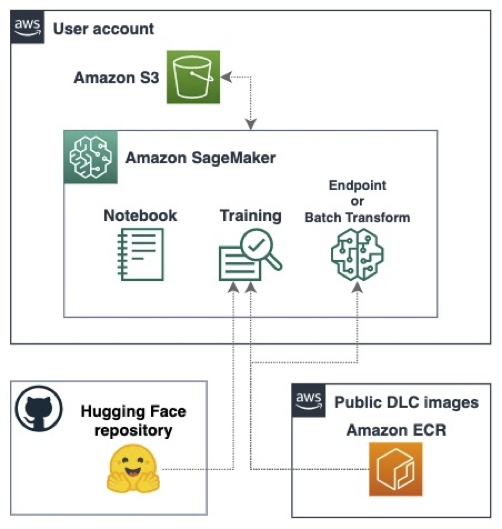

In this example notebook, we explore how to bring your own data for a Text Classification task, by fine-tuning and deploying SOTA models with Amazon SageMaker, the Hugging Face containers, and the SageMaker Python SDK.

We also rely on the library of pre-trained models available in Hugging Face. We will demonstrate how you can bring your own custom data to fine tune the models, and use the processing scripts available in the Hugging Face hub for speeding up the process on tasks such as tokenization and data loading. Finally, we will deploy the model to a SageMaker endpoint and perform real-time inferences with sample phrases on our text classification use case.

In this example, the Amazon SageMaker Estimator is pointing directly towards the entry point script “run_glue.py” located in the Hugging Face repository in GitHub, i.e., there is NO need to copy this script manually to our environment. You can rely on this script for bringing any custom data in CSV or JSON format for your text classification tasks, as long as it includes the classification label and text fields. Note that you have other equivalent scripts also available in the Transformers repository for other text processing tasks, such as: summarization, text-generation, etc. In our news classification example, this script together with the SageMaker and HuggingFace integration will automatically:

- Pre-process our input data, e.g., encoding text labels and the likes.

- Perform the relevant tokenization in the text automatically for us

- Prepare the data for training our BERT model for text classification.

This represents a huge improvement in development time saving, and an operational efficiency increase compared to developing and performing these tasks manually in an equivalent PyTorch implementation.

For more details on this example check the AWS ML Blog post: here

Note this example uses the AG News dataset cited in the paper Character-level Convolutional Networks for Text Classification by Xiang Zhang and Yann LeCun. This dataset is available on the AWS Open Data Registry.

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.