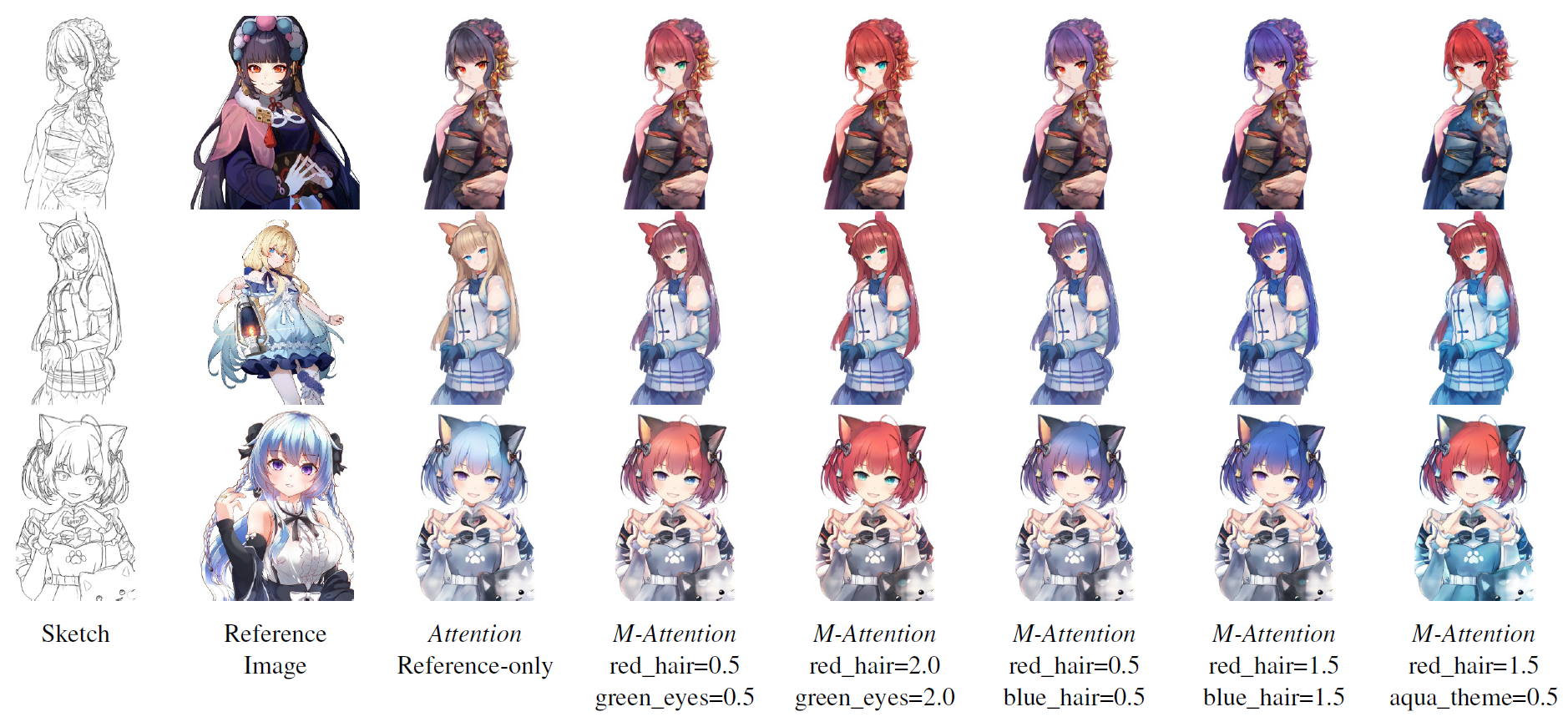

This repository is the official implementation of paper Two-step Training: Adjustable Sketch Colorization via Reference Image and Text Tag.

To run our code, please install all the libraries required in our implementation by running the command:

pip install -r requirements.txt

The training dataset can be downloaded from Danbooru2019 figures, or using the rsync command:

rsync --verbose --recursive rsync://176.9.41.242:873/biggan/danbooru2019-figures ./danbooru2019-figures/

Generate sketch and reference images with any methods you like, or apply data/data_augmentation.py in this repository and sketchKeras for reference and sketch images, respectively.

Please organize the training/validation/testing dataset as:

├─ dataroot

│ ├─ color

│ ├─ reference

│ ├─ sketch

If you don't want to generate reference images for training (which is a little time-consuming), you can use the latent shuffle function in models/modules.py during training by cancelling the comment in the forward function in draft.py. Note that this only works when the reference encoder is semantically aware (for example, pre-trained for classification or segmentation), and this will suffer approximately 10%-20% deterioration.

Before training our colorization model, you need to prepare a pre-trained CNN which is used as the reference encoder in our method. We suggest you adopt a CNN which is pre-trained on both ImageNet and Danbooru2020 to achieve best colorization performance. By the way, clip image encoder is found inefficient in our paper, but this deterioration can be solved by combining our models with latent diffusion according to our latest experiments.

Using the following command for 1st training:

python train.py --name [project_name] -d [dataset_path] -pre [pretrained_CNN_path] -bs [batch_size] -nt [threads used to read input] -at [add,fc(default)]

and the following command for 2nd training:

python train.py --name [project_name] -d [dataset_path] -pre [pretrained_CNN_path] -pg [pretrained_first_stage_model] -bs [batch_size] -nt [threads used to read input] -m mapping

More information regarding training and testing options can be found in options/options.py or by using --help.

To use our pre-trained model for sketch colorization, download our pre-trained networks from Releases using the following commands: For colorization model:

python test.py --name [project_name] -d [dataset_path] -pre [pretrained_CNN_path]

For controllable model:

python test.py --name [project_name] -d [dataset_path] -pre [pretrained_CNN_path] -m mapping

We didn't implement user interface for changing the tag values, so you need to change the values of tags manually in the modify_tags function in mapping.py. Corresponding tag id can be found in materials. Besides, you can activate --resize to control the output image size as load_size. All the generated images are saved in checkpoints/[model_name]/test/fake. Details of training/testing options can be found in options/options.py.

We offer an evaluation using FID distance, using the following command for evaluation. Activate --resize if you want to change the evaluation image size.

python evaluate.py --name [project_name] --dataroot [dataset_path]