Texformer: 3D Human Texture Estimation from a Single Image with Transformers

This is the official implementation of "3D Human Texture Estimation from a Single Image with Transformers", ICCV 2021 (Oral)

Featured as the Cover Article of the ICCV DAILY Magazine

Highlights

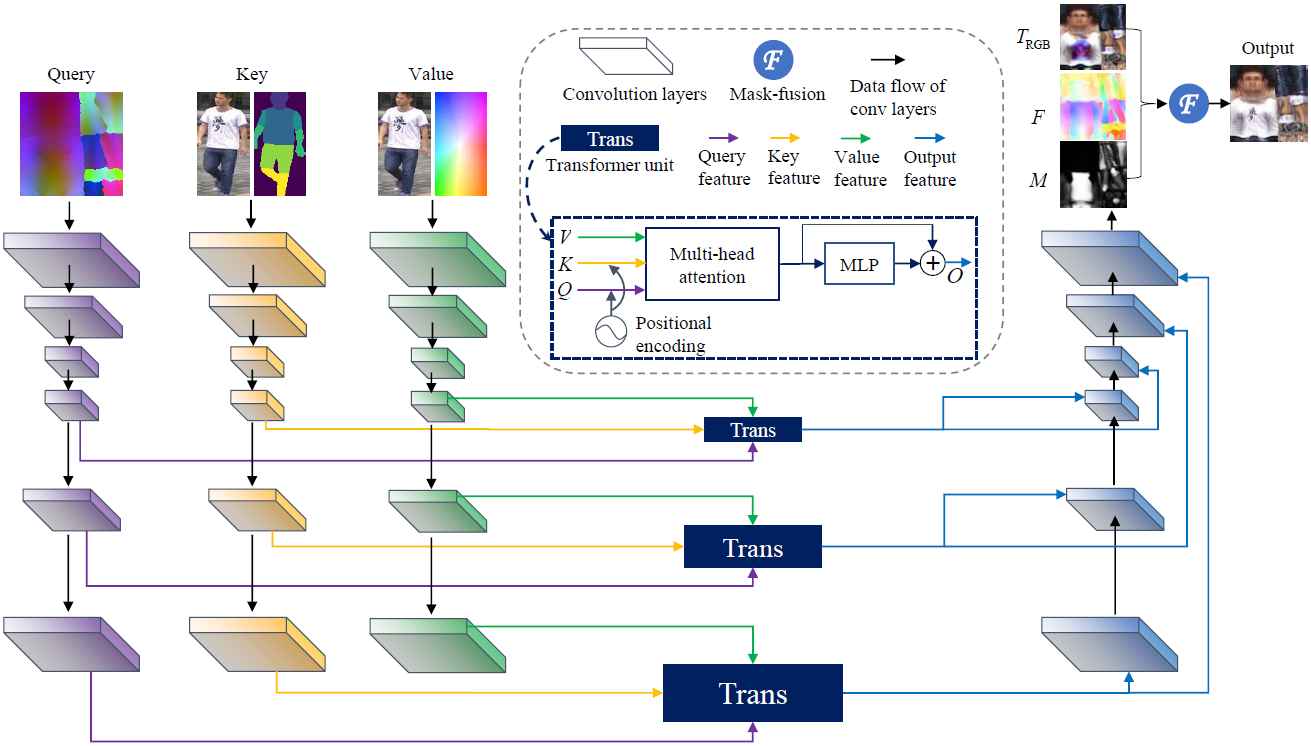

- Texformer: a novel structure combining Transformer and CNN

- Low-Rank Attention layer (LoRA) with linear complexity

- Combination of RGB UV map and texture flow

- Part-style loss

- Face-structure loss

BibTeX

@inproceedings{xu2021texformer,

title={{3D} Human Texture Estimation from a Single Image with Transformers},

author={Xu, Xiangyu and Loy, Chen Change},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

year={2021}

}

Abstract

We propose a Transformer-based framework for 3D human texture estimation from a single image. The proposed Transformer is able to effectively exploit the global information of the input image, overcoming the limitations of existing methods that are solely based on convolutional neural networks. In addition, we also propose a mask-fusion strategy to combine the advantages of the RGB-based and texture-flow-based models. We further introduce a part-style loss to help reconstruct high-fidelity colors without introducing unpleasant artifacts. Extensive experiments demonstrate the effectiveness of the proposed method against state-of-the-art 3D human texture estimation approaches both quantitatively and qualitatively.

Overview

The Query is a pre-computed color encoding of the UV space obtained by mapping the 3D coordinates of a standard human body mesh to the UV space. The Key is a concatenation of the input image and the 2D part-segmentation map. The Value is a concatenation of the input image and its 2D coordinates. We first feed the Query, Key, and Value into three CNNs to transform them into feature space. Then the multi-scale features are sent to the Transformer units to generate the Output features. The multi-scale Output features are processed and fused in another CNN, which produces the RGB UV map T, texture flow F, and fusion mask M. The final UV map is generated by combining T and the textures sampled with F using the fusion mask M. Note that we have skip connections between the same-resolution layers of the CNNs similar to [1] which have been omitted in the figure for brevity.

Visual Results

For each example, the image on the left is the input, and the image on the right is the rendered 3D human, where the human texture is predicted by the proposed Texformer, and the geometry is predicted by RSC-Net.

Install

- Manage the environment with Anaconda

conda create -n texformer anaconda

conda activate texformer

- Pytorch-1.4, CUDA-9.2

conda install pytorch==1.4.0 torchvision==0.5.0 cudatoolkit=9.2 -c pytorch

- Install Pytorch-neural-renderer according to the instructions here

Download

-

Download meta data, and put it in "./meta/".

-

Download pretrained model, and put it in "./pretrained".

-

We propose an enhanced Market-1501 dataset, termed as SMPLMarket, by equipping the original data of Market-1501 with SMPL estimation from RSC-Net and body part segmentation estimated by EANet. Please download the SMPLMarket dataset and put it in "./datasets/".

-

Other datasets: PRW, surreal, CUHK-SYSU. Please put these datasets in "./datasets/".

-

All the paths are set in "config.py".

Demo

Run the Texformer with human part segmentation from an off-the-shelf model:

python demo.py --img_path demo_imgs/img.png --seg_path demo_imgs/seg.png

If you don't want to run an external model for human part segmentation, you can use the human part segmentation of RSC-Net instead (note that this may affect the performance as the segmentation of RSC-Net is not very accurate due to the limitation of SMPL):

python demo.py --img_path demo_imgs/img.png

Train

Run the training code with default settings:

python trainer.py --exp_name texformer

Evaluation

Run the evaluation on the SPMLMarket dataset:

python eval.py --checkpoint_path ./pretrained/texformer_ep500.pt

References

[3] "SMPL: A Skinned Multi-Person Linear Model", SIGGRAPH Asia, 2015

[5] "Learning Factorized Weight Matrix for Joint Filtering", ICML, 2020