If you find this work useful for your research,please cite our paper: ULME-GAN: a generative adversarial network for micro-expression sequence generation.

For details, please refer to the above paper. The follwing briefly summarize our work here.

- 1.Using AU matrix re-encoded

- 2.The model has a good generation effect

- 3.Adopting transfer learning

- 4.Linear fitting of image sequence

- 5.The input target expression can be the AU matrix or face image of any expression state

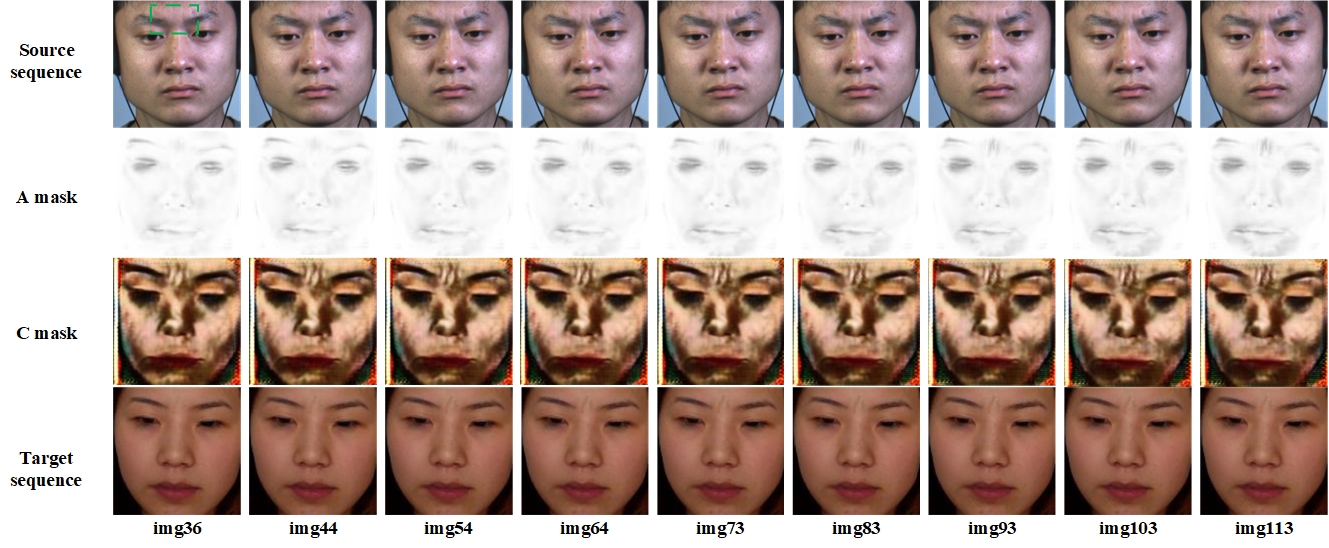

Our generaters consists of two parts:Attention mask generator and Color intensity extraction.We show A and C in the figure below.

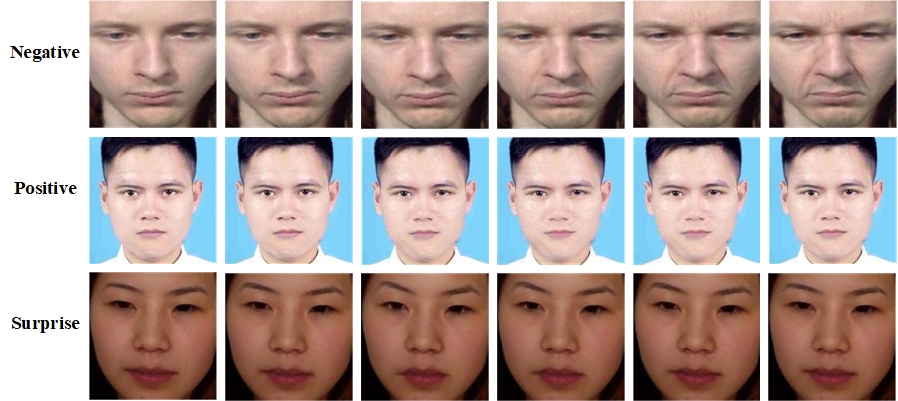

We can also generate consistent images on the template face according to the input Au matrix or image of any state.

We can also generate consistent images on the template face according to the input Au matrix or image of any state.

Our generation has achieved good results. The results of MEGC2021 in ../MEGC2021_results and The more results(no_recode,just_AU_recode,recode_apex_frame and expert_opinion) in Google Drive or BaiduNetdisk(Code:72xv) The following gifs is the result of paper,for the convenience of display,a.gif is the result without AU matrix re-encoded,b.gif is the result just after AU matrix re-encoded and c.gif is the result after Au matrix re-encoded and linear-fitting.

a.gif b.gif c.gif

Note: Windows system only now!

python 3.7.6

torch 1.0.0

torchvision 0.2.0

numpy 1.18.1

pillow 7.1.2

opencv 4.2.0

visdom 0.1.8.9(Optional,just train)

pandas 1.0.4

tqdm 4.42.1

(If you want use it in creating a new envirenment,you should follow init_envirenment.txt)

you can Install requirements.txt (pip install -r requirements.txt) to install the above Package

The code requires a directory containing the following files:

To train(all images size must be consistent):

imgs/: folder with all training image

To test:

imgs/: folder with the source expression imageimgs2/: folder with the target image

When you have a au tense table The following are needed(the format you can refer to ../test/casme2_Negative_asianFemale)

aus_openface.pkl: it's every line contains filename,the tense of AU1,AU2,AU4,AU5,AU6,AU7,AU9,AU10,AU12,AU14,AU15,AU17,AU20,AU23,AU25,AU26 and AU45test_ids.csv: each line is the image file name, including the expression and template face

To train

python main.py --data_root [data_path] --gpu_ids [-1,0,1,2...] --visdom_display_id [0,1]

# e.g. python main.py --data_root datasets\train_data --gpu_ids 0 --sample_img_freq 500 --pre_treatment

To test

our model,coding tools and trained model all in Google Drive or BaiduNetdisk(Code:vtw2),after decompression, put ckpts and openface directly into the directory of ULME-GAN

python main.py --mode test --data_root [data_path] --batch_size [num] --max_dataset_size [max_num]

--gpu_ids [-1,0,1,2...] --ckpt_dir [ckpt_dir] --load_epoch [num] [--serial_batches] --n_threads [num] [--linear_fitting|--apex_frame] [--pre_treatment] [--re_code] [--save_video]

#if you want get a MEGC2021 Micro Expression Generation result,please enter the followed command(you can delete all *.csv and *pkl initially,them will be regenerate after our program runs)

# e.g. python main.py --mode test --data_root test/casme2_Negative_asianFemale --batch_size 128 --max_dataset_size 9999 --gpu_ids -1 --ckpt_dir ckpts\ULMEGAN\210619_212934/ --load_epoch 40 --serial_batches --n_threads 0 --linear_fitting --pre_treatment --re_code --save_video

#if you want get all MEGC2021 Micro Expression Generation results,please enter the followed command

# e.g. python for_megc2021.py

The output file will be written into results folder.

Specified micro-expression generation

#if you have AU tense table ,please enter the followed command

# e.g. python main.py --mode test --data_root test/casme2_Negative_asianFemale --batch_size 128 --max_dataset_size 9999 --gpu_ids -1 --ckpt_dir ckpts\ULMEGAN\210619_212934/ --load_epoch 40 --n_threads 0 --save_video

#In addition,You can edit demo_for_AU_table_generate.py correctly get the wanted images or videos.(Already embedded with 1.2 times Positive)

python demo_for_Au_Table_generate.py --mode test --data_root test/Au_Table_Positive_asianFemale --batch_size 128 --max_dataset_size 9999 --gpu_ids -1 --ckpt_dir ckpts\210619_212934/ --load_epoch 40 --n_threads 0 --save_video

To finetune

python main.py --data_root [data_path] --gpu_ids [-1,0,1,2...] --ckpt_dir [ckpt_dir] --load_epoch [num] --epoch_count [num] --niter [num] --niter_decay [num]

# e.g. python main.py --data_root weitiao --gpu_ids 0 --sample_img_freq 300 --n_threads 0 --ckpt_dir ckpts\ULMEGAN\210619_212934 --load_epoch 40 --epoch_count 41 --niter 40 --niter_decay 10 --pre_treatment