CVPR 2023 (highlight paper) [Paper] [Video]

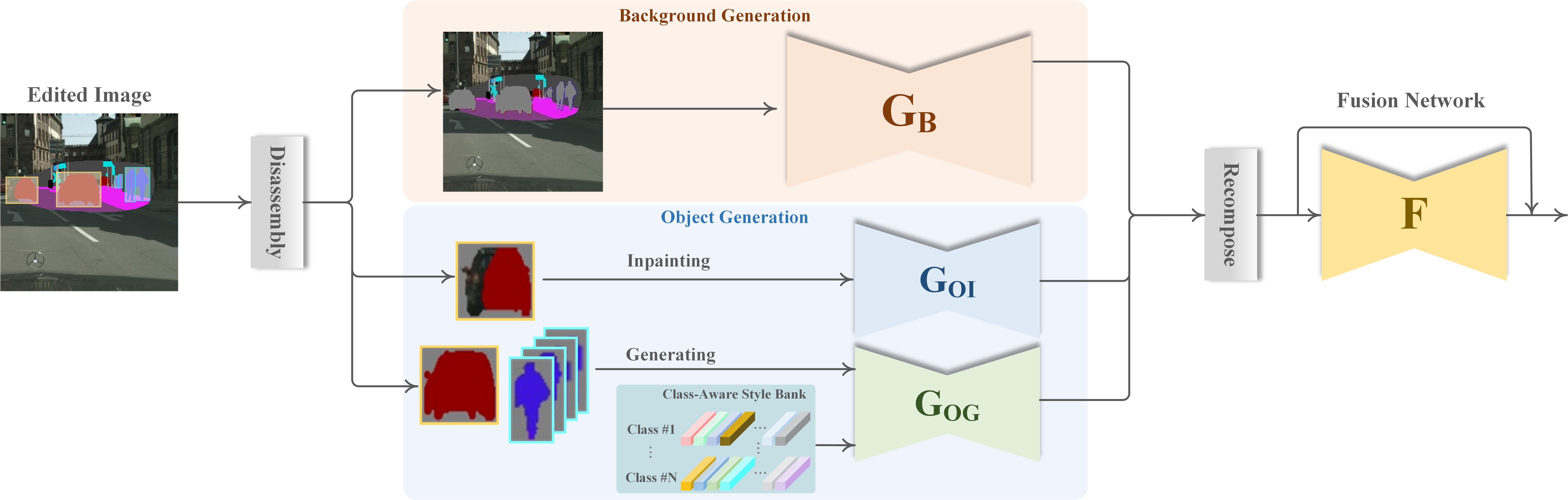

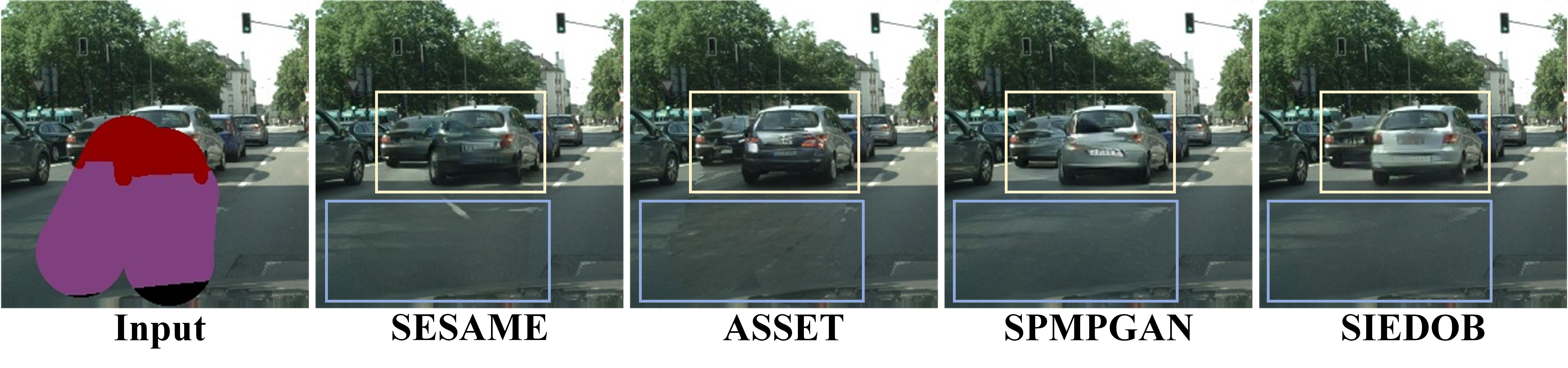

Semantic image editing provides users with a flexible tool to modify a given image guided by a corresponding segmentation map. In this task, the features of the foreground objects and the backgrounds are quite different. However, all previous methods handle backgrounds and objects as a whole using a monolithic model. Consequently, they remain limited in processing content-rich images and suffer from generating unrealistic objects and texture-inconsistent backgrounds. To address this issue, we propose a novel paradigm, Semantic Image Editing by Disentangling Object and Background (SIEDOB), the core idea of which is to explicitly leverages several heterogeneous subnetworks for objects and backgrounds.

Our method can well handle dense objects overlapping each other and generate texture-consistent background.

- The code has been tested with PyTorch 1.10.1 and Python 3.7.11. We train our model with a NIVIDA RTX3090 GPU.

Here, let's take Cityscapes dataset as an example. Download the original dataset Cityscapes. Create folder data/cityscapes512x256/ with subfolders train/, test/, and object_datasets/.

train/ has the subfolders named images/, labels/, inst_map/, images2048/. We resize all training images to 512x256 resolution.

images/: Original images.labels/: Segmentation maps.inst_map/: Instance maps.images2048/: Original 2048x1024 resolution images are used to crop high quality object images for training.

test/ only has images/, labels/, inst_map/. We resize all testing images to 512x256 resolution and randomly crop them to 256x256 image patches.

We crop the object images of each foreground category from the original resolution image to build object_datasets/, which has the subfolders train/ and test/. train/ and test/ should each have their own subfolders images/ and inst_map/.

We include some examples in data/.

data/predefined_mask/ and data/cityscapes512x256/object_datasets/object_mask/ contains pre-generated mask maps for evaluating.

Step1: Train an object inpinting sub-network.

Go into instance_inpainting/ and run:

python train.py

Step2: Train a Style-Diversity Object Generator.

Go into instance_style/ and run:

python train.py

Step3: Train a background generator.

Go into background/ and run:

python train.py

Step4: Train a final fusion network.

Go into fusion/ and run:

python train.py

Download pretrained sub-networks from BaiDuYun (password:rlg6) | GoogleDrive and set the relevant paths.

Go into fusion/ and run:

python test_one_image.py

If you use this code for your research, please cite our paper.

@InProceedings{Luo_2023_CVPR,

author = {Luo, Wuyang and Yang, Su and Zhang, Xinjian and Zhang, Weishan},

title = {SIEDOB: Semantic Image Editing by Disentangling Object and Background},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {1868-1878}

}