Learning to Cluster under Domain Shift

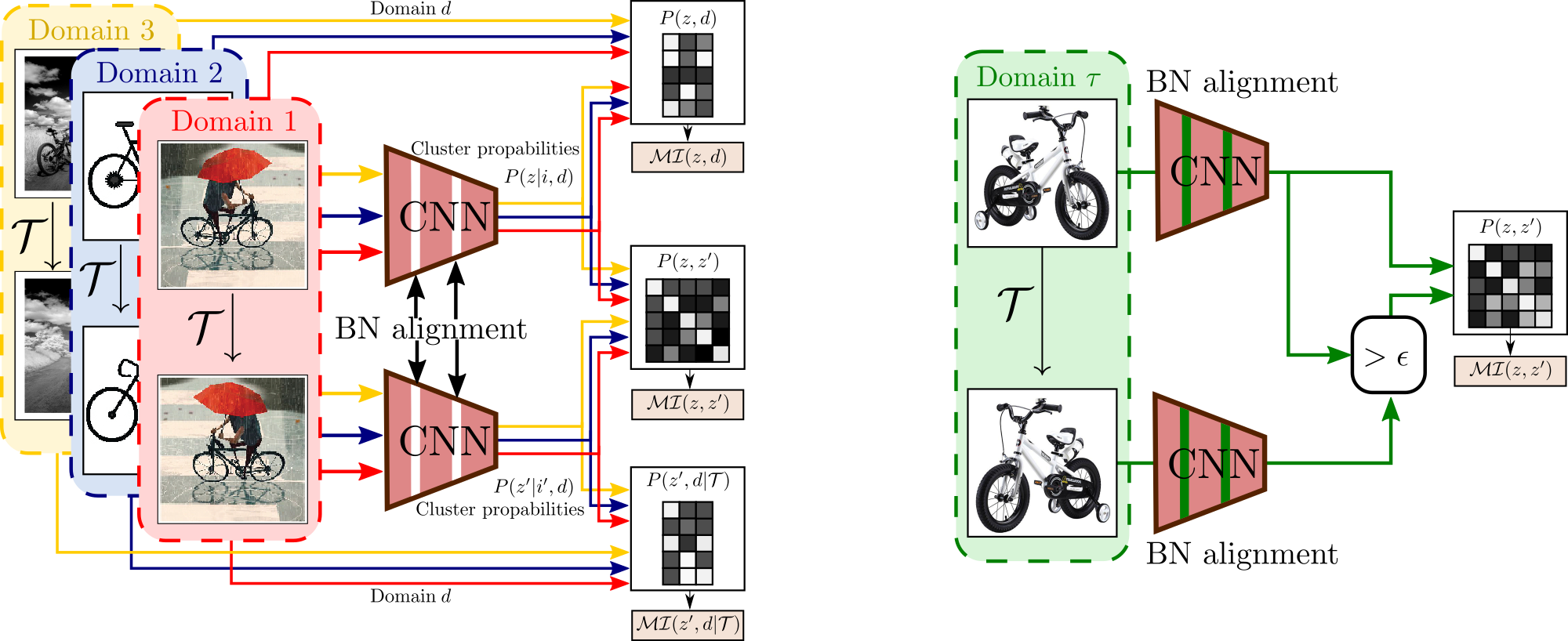

Figure 1. Illustration of the proposed Unsupervised Clustering under Domain Shift setting.

Learning to Cluster under Domain Shift

Willi Menapace, Stéphane Lathuilière, Elisa Ricci

ECCV 2020

Paper: arXiv

Abstract: While unsupervised domain adaptation methods based ondeep architectures have achieved remarkable success in many computervision tasks, they rely on a strong assumption, i.e. labeled source datamust be available. In this work we overcome this assumption and we ad-dress the problem of transferring knowledge from a source to a target do-main when both source and target data have no annotations. Inspired byrecent works on deep clustering, our approach leverages information fromdata gathered from multiple source domains to build a domain-agnosticclustering model which is then refined at inference time when target databecome available. Specifically, at training time we propose to optimize anovel information-theoretic loss which, coupled with domain-alignmentlayers, ensures that our model learns to correctly discover semantic labelswhile discarding domain-specific features. Importantly, our architecturedesign ensures that at inference time the resulting source model can beeffectively adapted to the target domain without having access to sourcedata, thanks to feature alignment and self-supervision. We evaluate theproposed approach in a variety of settings, considering several domainadaptation benchmarks and we show that our method is able to au-tomatically discover relevant semantic information even in presence offew target samples and yields state-of-the-art results on multiple domainadaptation benchmarks. We make our source code public.

2. Proposed Method

Our ACIDS framework operates in two phases: training on the source domains and adaptation to the target domain.

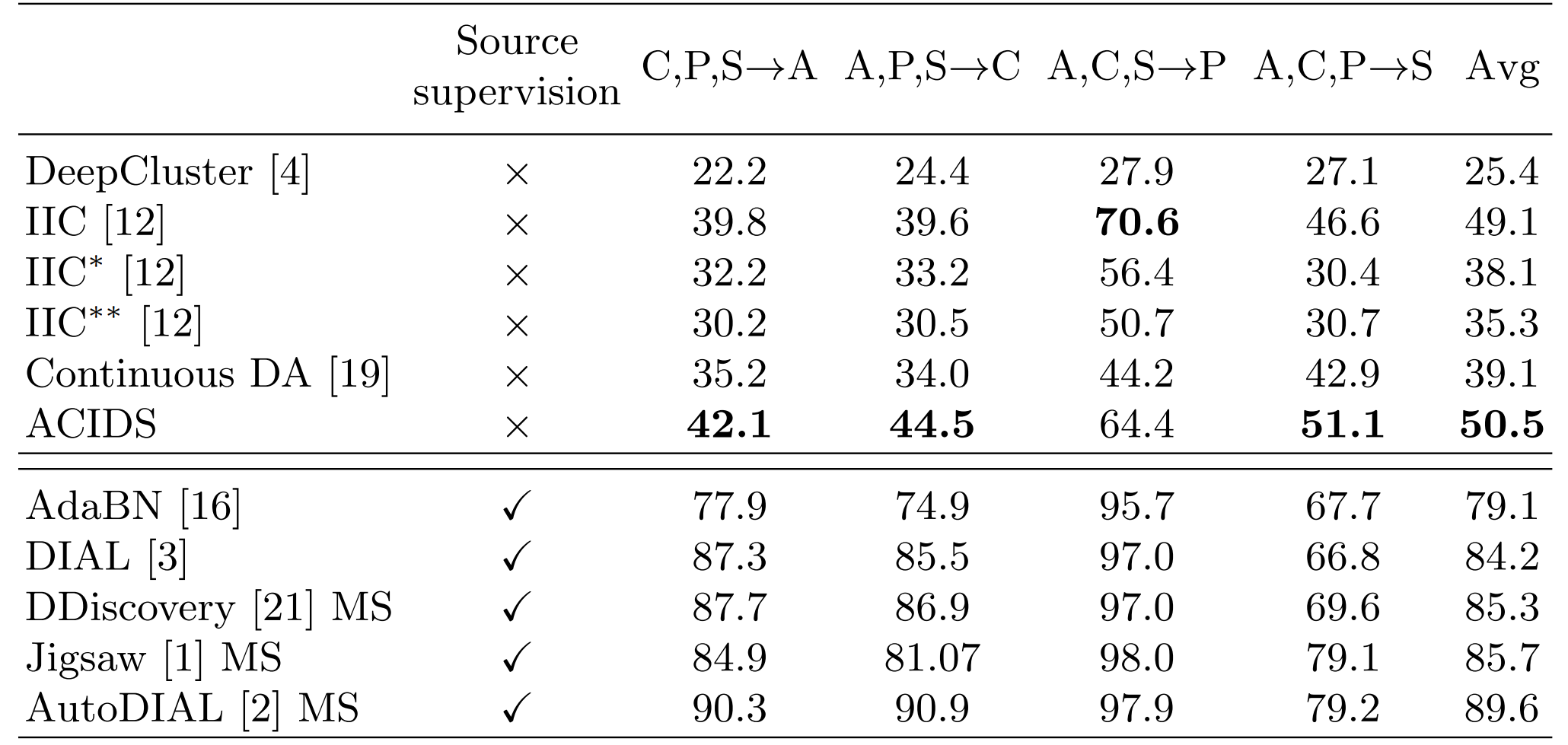

In the first phase, we employ an information-theoretic loss based on mutual information maximization to discover clusters in the source domains. In order to favor the emergence of clusters based on semantic information rather than domain style differences, we propose the use of a novel mutual information loss for domain alignment which is paired with BN-based feature alignment.

In the second phase, we perform adaptation to the target domain using a variant to the mutual information loss used in the first phase which uses high confidence points as pivots to stabilize the adaptation process.

Figure 2. Illustration of the proposed ACIDS method for Unsupervised Clustering under Domain Shift setting. (Left) training on the source domains, (Right) adaptation to the target domain

3. Results

3.1 Numerical Evaluation

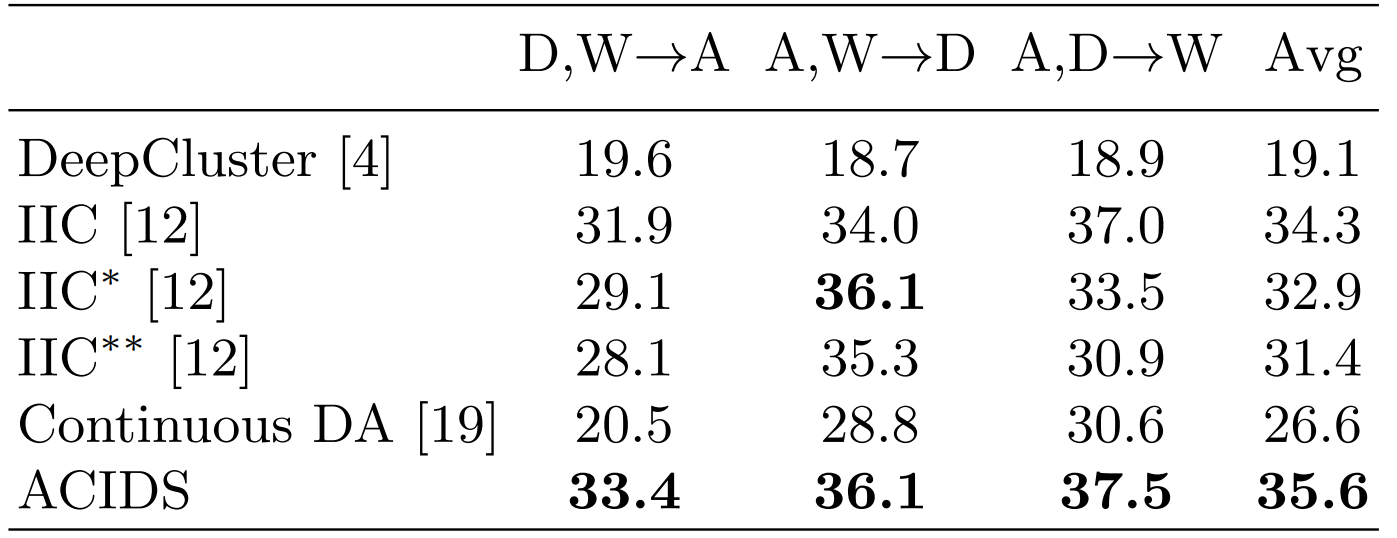

Table 1. Comparison of the proposed approach with SOTA on the PACS dataset. Accuracy (%) on target domain. Methods with source supervision are provided as upper bounds. MS denotes multi source DA methods.

Table 2. Comparison of the proposed approach with SOTA on the Office31 dataset. Accuracy (%) on target domain.

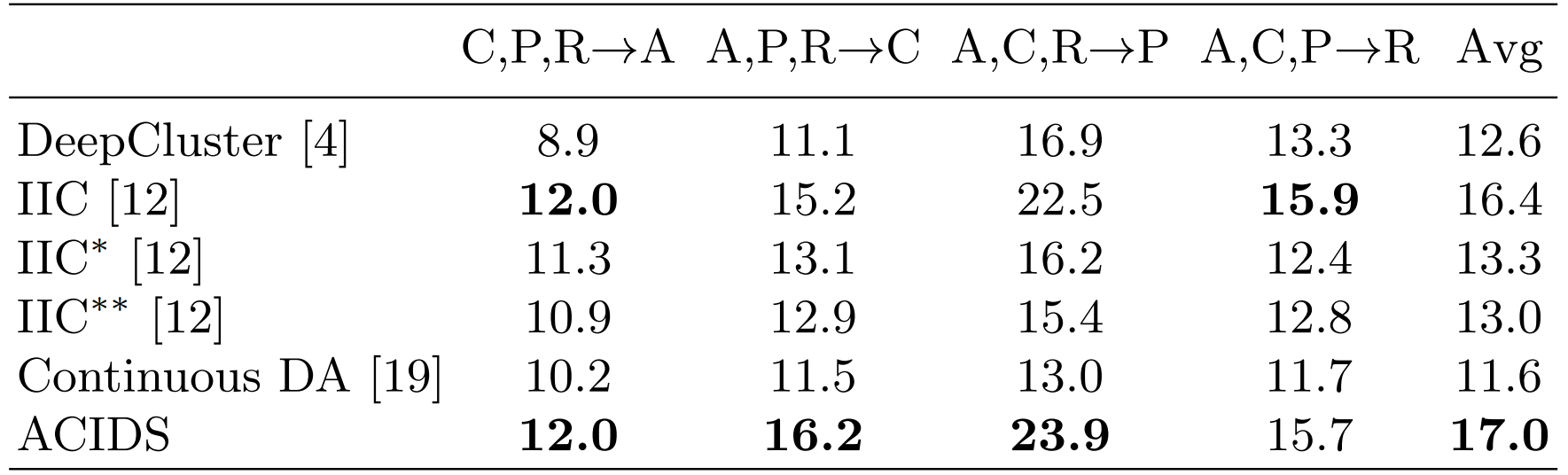

Table 3. Comparison of the proposed approach with SOTA on the Office-Home dataset. Accuracy (%) on target domain.

3.1 Qualitative Evaluation

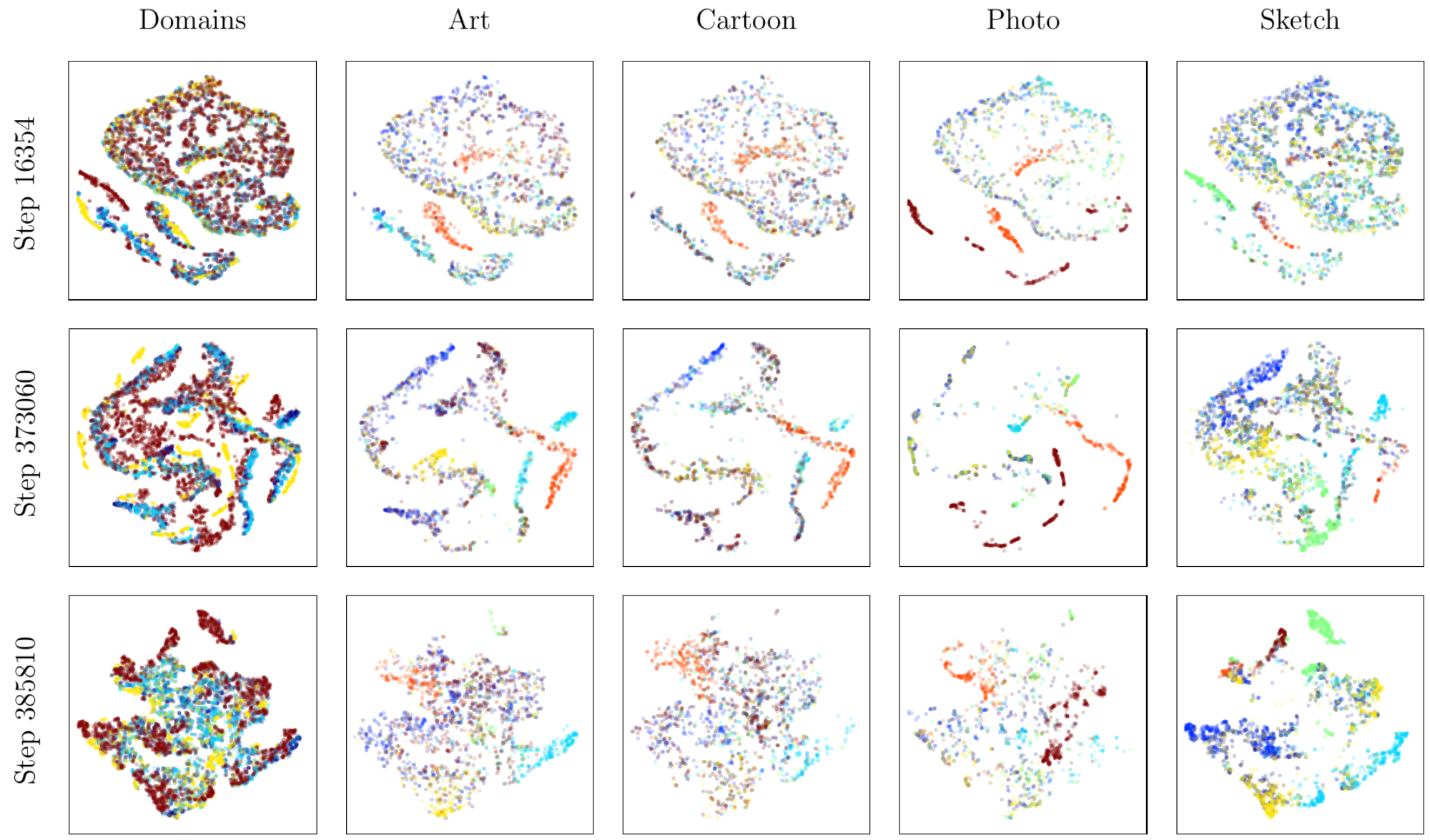

Figure 3. Evolution of the feature space during training on PACS with Art, Cartoon and Photo as the source domains and Sketch as the target domain. The first two rows depict feature evolution during training on the source domains, the last row depicts the feature space after adaptation to the target domain. In the first column, color represents the domain, while in the others, color represents the ground truth class of each point.

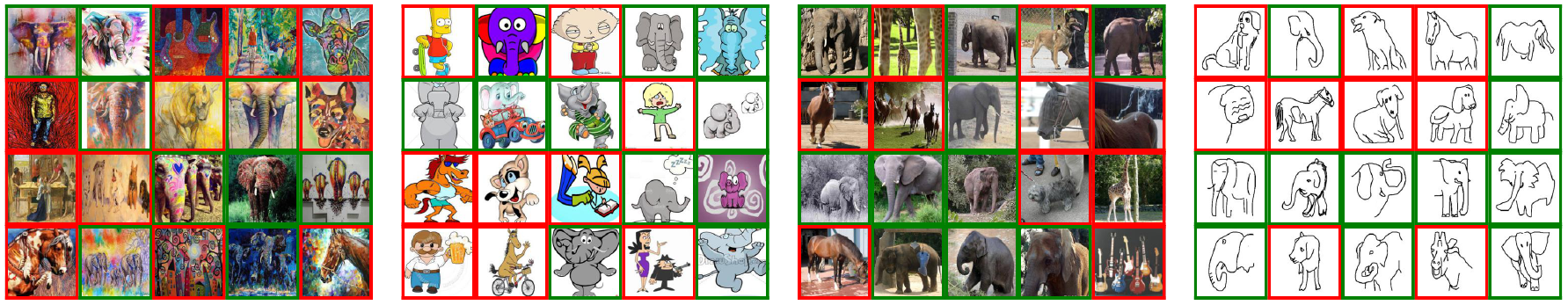

Figure 4. Clusters corresponding to the PACS "Elephant" class from Art, Cartoon, Photo and Sketch domains.

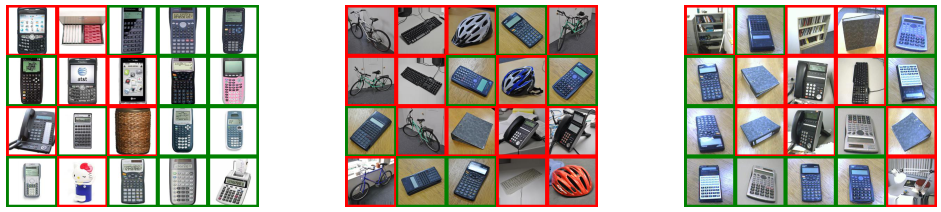

Figure 4. Clusters corresponding to the Office31 "Calculator" class from Amazon, DSLR and Webcam domains.

4. Configuration and Execution

We provide a Conda environment that we recommend for code execution. The environment can be recreated by executing

conda env create -f environment.yml

Activate the environment using

conda activate acids

Execution of the model is composed of two phases. The first consists in training on the source domains and is performed with the following command

python -m codebase.scripts.cluster.source_training --config config/<source_training_config_file>

Once training on the source domains is completed, adaptation to the target domain is performed with the following command. Note that the first execution will only create the results directory and return an error. A valid checkpoint named start.pth.tar originating from the source domain training phase must inserted in the newly created directory and will be taken as the starting point from which to perform adaptation to the target domain.

python -m codebase.scripts.cluster.target_adaptation --config config/<target_adaptation_config_file>

The state of each training process can be monitored with

tensorboard --logdir results/<results_dir>/runs

5. Data

The PACS, Office31 and Office-Home datasets must be placed under the data directory and each image must be resized to the resolution of 150x150px. For conveniency, we provide an already configured version of each dataset.

Google Drive: link.

6. Citation

@inproceedings{menapace2020learning,

author = {Willi Menapace and

St{\'{e}}phane Lathuili{\`{e}}re and

Elisa Ricci},

title = {Learning to Cluster Under Domain Shift},

booktitle = {Computer Vision - {ECCV} 2020 - 16th European Conference, Glasgow,

UK, August 23-28, 2020, Proceedings, Part {XXVIII}},

series = {Lecture Notes in Computer Science},

volume = {12373},

pages = {736--752},

publisher = {Springer},

year = {2020}

}