This repository provides the implementation of the paper:

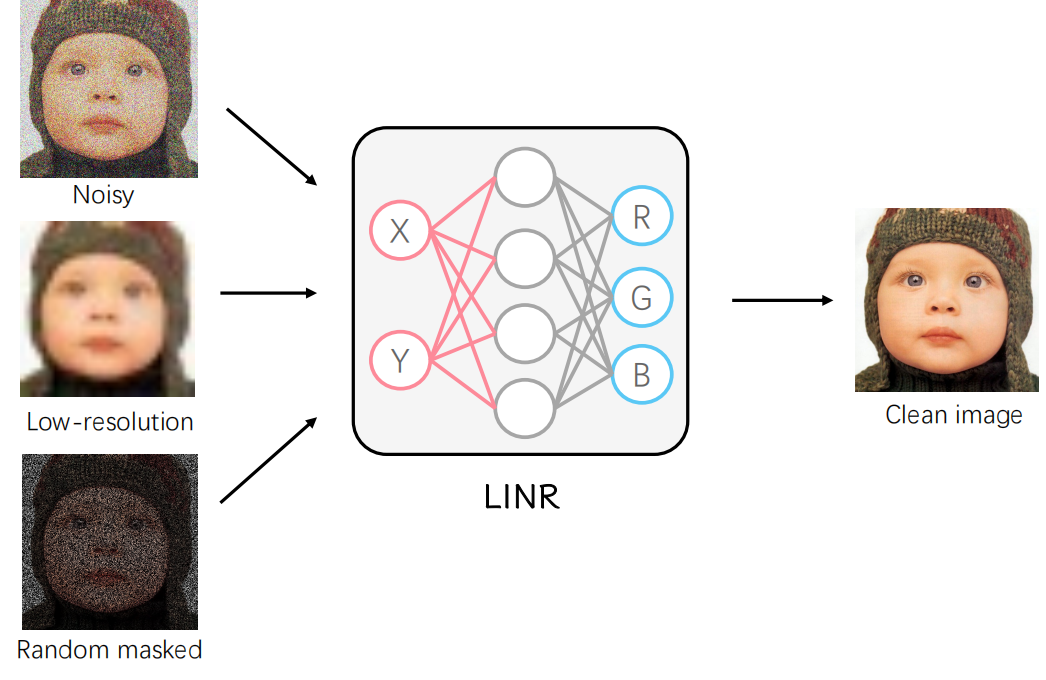

Wentian Xu and Jianbo Jiao. Revisiting Implicit Neural Representations in Low-Level Vision. In ICLR-NF (2023).

We provide Jupyter Notebooks for each task in the paper. We also provide a denosing example based on .py file.

To install the dependencies into a new conda environment, simply run:

conda env create -f environment.yml

source activate linrAlternatively, you may install them manually:

conda create --name linr

source activate linr

conda install python=3.9

conda install pytorch=1.12 torchvision cudatoolkit=11.6 -c pytorch

conda install numpy

conda install matplotlib

conda install scikit-image

conda install jupyter- Change the file path in jupyter Notebooks or in

train_denoising.pyto the image you want - Run each cell in jupyter Notebooks or run the code in

train_denoising.py

The data used in the paper is stored in the Data folder. You can also use your own data by changing the file path.

If you find LINR useful, please cite the following BibTeX entry:

@inproceedings{linr,

title={Revisiting Implicit Neural Representations in Low-Level Vision},

author={Wentian Xu and Jianbo Jiao},

booktitle="International Conference on Learning Representations Workshop",

year={2023},

}