MIGC: Multi-Instance Generation Controller for Text-to-Image Synthesis

Dewei Zhou, You Li, Fan Ma, Xiaoting Zhang, Yi Yang

conda create -n MIGC_diffusers python=3.9 -y

conda activate MIGC_diffusers

pip install -r requirement.txt

pip install -e .

Download the MIGC_SD14.ckpt (219M) and put it under the 'pretrained_weights' folder.

├── pretrained_weights

│ ├── MIGC_SD14.ckpt

├── migc

│ ├── ...

├── bench_file

│ ├── ...

By using the following command, you can quickly generate an image with MIGC.

CUDA_VISIBLE_DEVICES=0 python inference_single_image.py

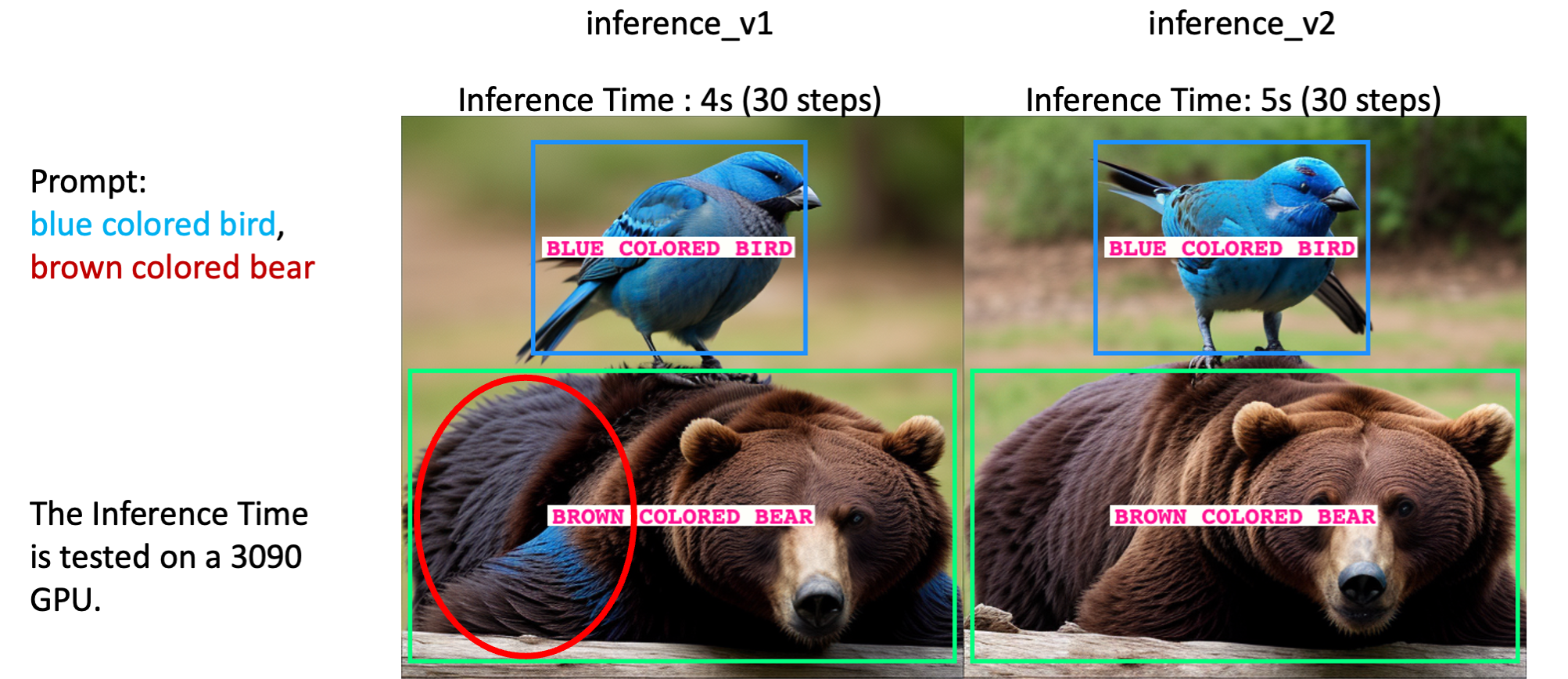

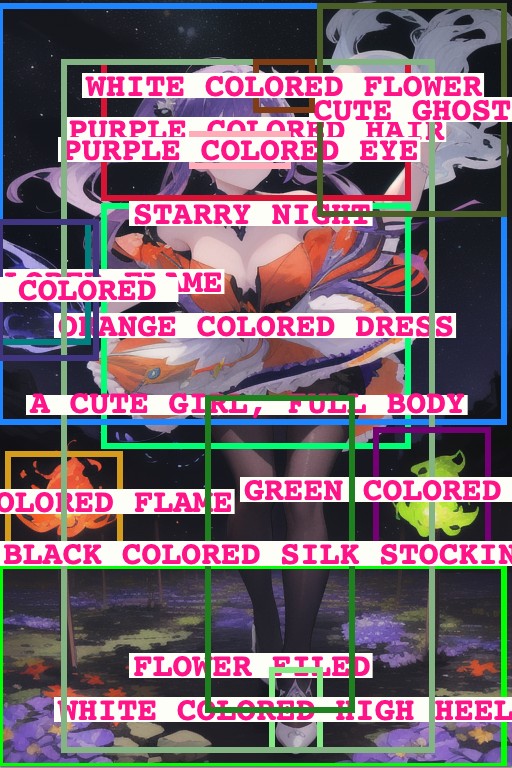

The following is an example of the generated image based on stable diffusion v1.4.

🚀 Enhanced Attribute Control: For those seeking finer control over attribute management, consider exploring the python inferencev2_single_image.py script. This advanced version, InferenceV2, offers a significant improvement in mitigating attribute leakage issues. By accepting a slight increase in inference time, it enhances the Instance Success Ratio from 66% to an impressive 68% on COCO-MIG Benchmark. It is worth mentioning that increasing the NaiveFuserSteps in inferencev2_single_image.py can also gain stronger attribute control.

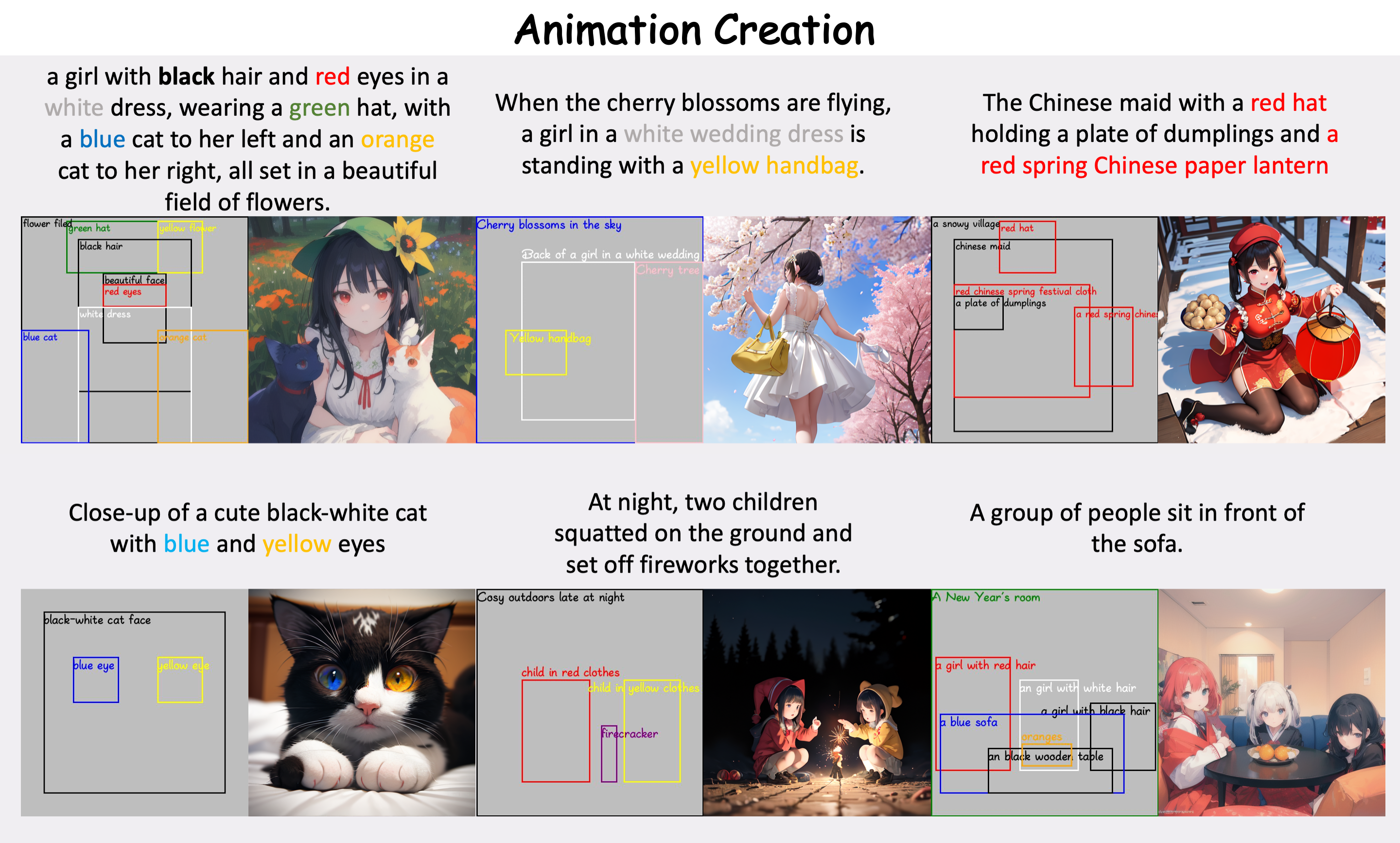

💡 Versatile Image Generation: MIGC stands out as a plug-and-play controller, enabling the creation of images with unparalleled variety and quality. By simply swapping out different base generator weights, you can achieve results akin to those showcased in our Gallery. For instance:

- 🎨 Cetus-Mix: This robust base model excels in crafting animated content, elevating your creative projects with its unique capabilities.

- 🌆 RV60B1: Ideal for those seeking lifelike detail, RV60B1 specializes in generating images with stunning realism.

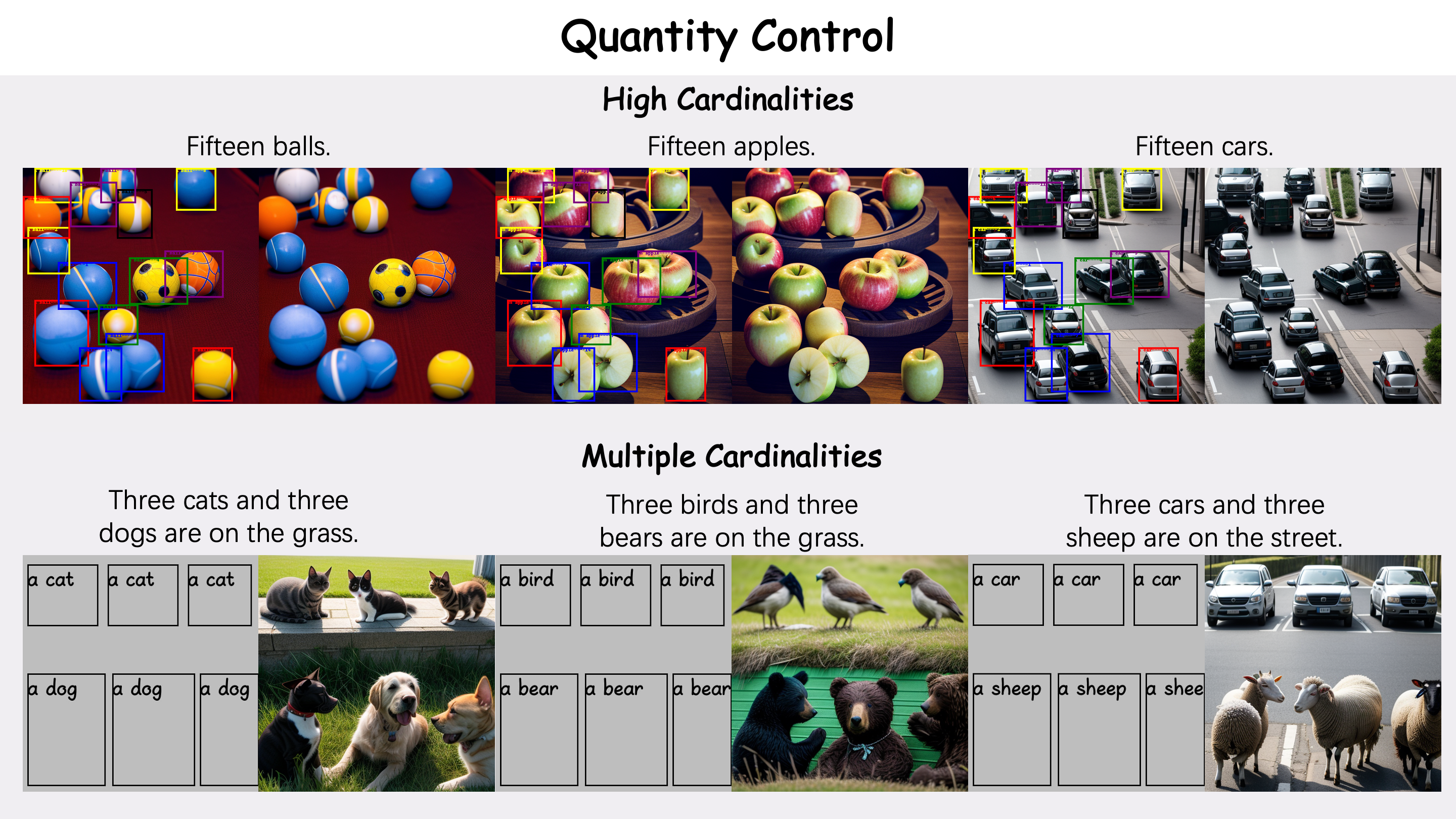

To validate the model's performance in position and attribute control, we designed the COCO-MIG benchmark for evaluation and validation.

By using the following command, you can quickly run inference on our method on the COCO-MIG bench:

CUDA_VISIBLE_DEVICES=0 python inference_mig_benchmark.py

We sampled 800 images and compared MIGC with InstanceDiffusion, GLIGEN, etc. On COCO-MIG Benchmark, the results are shown below.

| Method | MIOU↑ | Instance Success Rate↑ | Model Type | Publication | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L2 | L3 | L4 | L5 | L6 | Avg | L2 | L3 | L4 | L5 | L6 | Avg | |||

| Box-Diffusion | 0.37 | 0.33 | 0.25 | 0.23 | 0.23 | 0.26 | 0.28 | 0.24 | 0.14 | 0.12 | 0.13 | 0.16 | Training-free | ICCV2023 |

| Gligen | 0.37 | 0.29 | 0.253 | 0.26 | 0.26 | 0.27 | 0.42 | 0.32 | 0.27 | 0.27 | 0.28 | 0.30 | Adapter | CVPR2023 |

| ReCo | 0.55 | 0.48 | 0.49 | 0.47 | 0.49 | 0.49 | 0.63 | 0.53 | 0.55 | 0.52 | 0.55 | 0.55 | Full model tuning | CVPR2023 |

| InstanceDiffusion | 0.52 | 0.48 | 0.50 | 0.42 | 0.42 | 0.46 | 0.58 | 0.52 | 0.55 | 0.47 | 0.47 | 0.51 | Adapter | CVPR2024 |

| Ours | 0.64 | 0.58 | 0.57 | 0.54 | 0.57 | 0.56 | 0.74 | 0.67 | 0.67 | 0.63 | 0.66 | 0.66 | Adapter | CVPR2024 |

We have combined MIGC and GLIGEN-GUI to make art creation more convenient for users, and we will release our code in the next few days.

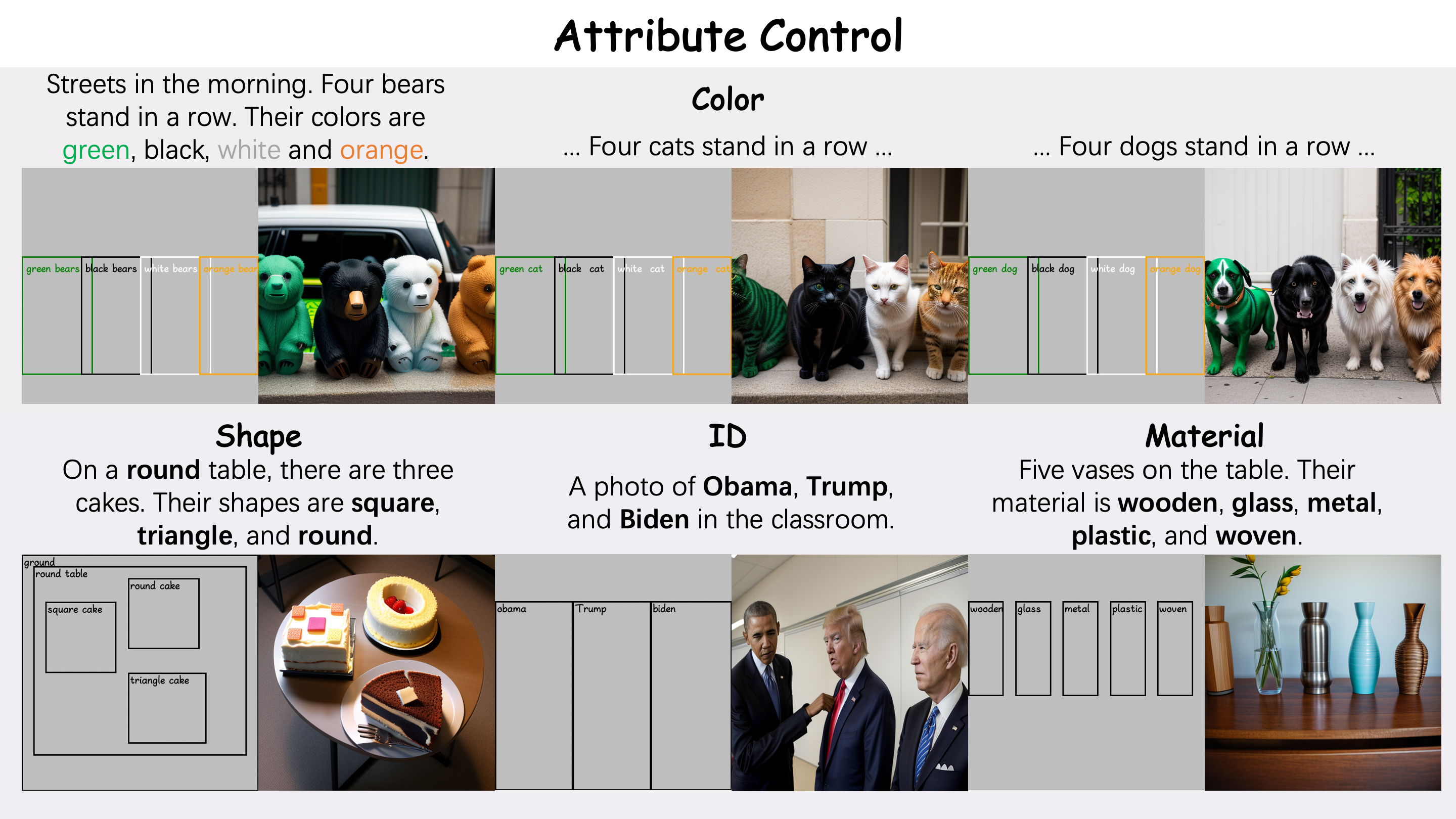

MIGC can achieve powerful attribute-and-position control capabilities while combining with LoRA. We will open this feature when we release the code of MIGC-GUI.

If you have any questions, feel free to contact me via email zdw1999@zju.edu.cn

Our work is based on stable diffusion, diffusers, CLIP, and GLIGEN-GUI. We appreciate their outstanding contributions.

If you find this repository useful, please use the following BibTeX entry for citation.

@misc{zhou2024migc,

title={MIGC: Multi-Instance Generation Controller for Text-to-Image Synthesis},

author={Dewei Zhou and You Li and Fan Ma and Xiaoting Zhang and Yi Yang},

year={2024},

eprint={2402.05408},

archivePrefix={arXiv},

primaryClass={cs.CV}

}