People-Scene Interaction (PSI)

This is the code page for people-scene interaction modeling, and generating 3D people in scenes without people.

License & Citation

Software Copyright License for non-commercial scientific research purposes. Please read carefully the terms and conditions and any accompanying documentation before you download and/or use the model, data and software, (the "Model & Software"), including 3D meshes, blend weights, blend shapes, textures, software, scripts, and animations. By downloading and/or using the Model & Software (including downloading, cloning, installing, and any other use of this github repository), you acknowledge that you have read these terms and conditions, understand them, and agree to be bound by them. If you do not agree with these terms and conditions, you must not download and/or use the Model & Software. Any infringement of the terms of this agreement will automatically terminate your rights under this License. Note that this license applies to our PSI work. For the use of SMPL-X, PROX, Matterport3D and other dependencies, you need to agree their licenses.

When using the code/figures/data/video/etc., please cite our work

@inproceedings{PSI:2019,

title = {Generating 3D People in Scenes without People},

author = {Zhang, Yan and Hassan, Mohamed and Neumann, Heiko and Black, Michael J. and Tang, Siyu},

booktitle = {Computer Vision and Pattern Recognition (CVPR)},

month = jun,

year = {2020},

url = {https://arxiv.org/abs/1912.02923},

month_numeric = {6}

}

Code Description

It is noted to check the paths in scripts before running the code; otherwise errors occur.

Content

chamfer_pytorchThe third-part dependency for computing Chamfer loss, see below.human_body_priorThe third-part dependency for VPoser, see below.data/ade20_to_mp3dlabel.npyThe dictionary to map annotation from ADE20K to Matterport3D.data/resnet18.pthThe pre-trained ResNet for scene encoding in our work.frontend_sh_scriptsThe bash scripts using the python scripts.sourceThe python code of model, data loading, training, testing and fitting.utilsThe python code of evaluation, visualization and others.demo.ipynbThe script for an interactive demo. Seeimages/PSI_demo_use.mp4for how to use it.checkpointsTrained models are put here.body_modelsSMPL-X body mesh model, and VPoser. See below.

Dependencies

Note that different dependency versions may lead to different results from ours.

Install requirements via:

pip install -r requirements.txt

Install the following packages following their individual instructions:

- Perceiving Systems Mesh Package

- Chamfer Pytorch, which is also included in our repo.

- Human Body Prior, which is also included in our repo.

- SMPL-X

Examples

We provide a demo.ipynb file to summarize our methods. To perform systematic experiments, we recommend to use traing, fitting and evaluation scripts in frontend_sh_scripts. To visualize the test results, one can check utils_show_test_results.py, utils_show_test_results_habitat.py and run_render.sh for details.

Datasets

PROX-E

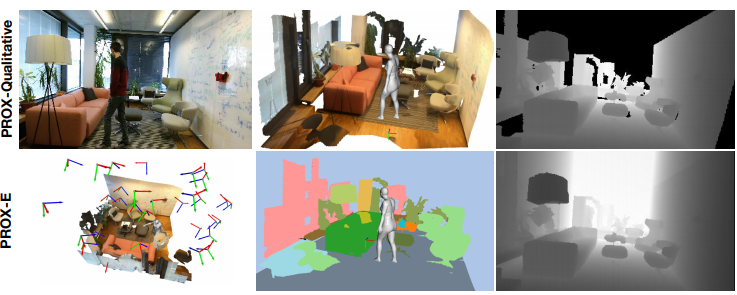

The PROX-E dataset is developed on top of PROX, and one can see the comparison between PROX and PROX-E in our manuscript. When using PROX-E, please first download the PROX dataset, and also cite PROX. As an expansion pack of PROX, we additionally provide scene meshes with semantic annotation, scene/human body data captured from virutal and real cameras, and other auxilariy files.

PROX-E can be downloaded here. After downloading PROX and the PROXE expansion pack, there will be:

body_segmentsThe body segments from PROX.PROXE_box_verts.jsonThe vertices of virtual walls, fitted ceiling and floors.scenesThe scene mesh from PROX.scenes_downsampledThe downsampled scene mesh.scenes_sdfThe signed distance function of scenes. SDF can be dowloaded herescenes_semanticsThe scene mesh with vertex color as object ID. Note:verts_id=np.asarray(mesh.vertex_colors)*255/5.0.snapshot_for_testingThe snapshot from the real camera of PROX test scenes. Each mat file has the body configurations, scene depth/semantics, and camera pose of each frame. These files ease to load data during testing phase.realcams.hdf5The compact file of real camera snapshots, for which the frame rate is downsampled.virtualcams.hdf5The compact file of virtual camera snapshots. Seebatch_gen_hdf5.pyfor how to use these h5 files.

Note that the PROXE expansion pack may update as PROX updates.

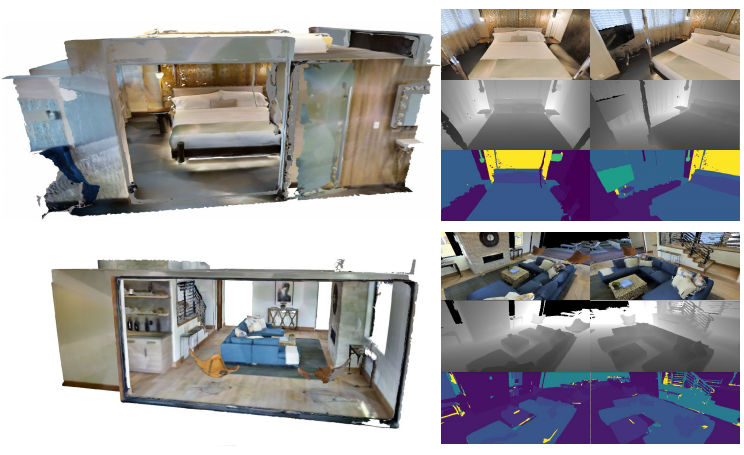

MP3D-R

We trim the room meshes from Matterport3D according to the room bounding box annotation, and capture scene depth/semantics using the Habitat simulator. Details are referred to our manuscript. Here we provide the scene names/ids, camera poses, captured depth/segmentation, and SDFs of the used scene meshes. Due to the data use agreement, we encourage users to download Matterport3D and install Habitat simulator, and then extract the corresponding scene meshes used in our work.

MP3D-R can be downloaded here. The package has:

{room_name}-sensorThe captured RGB/Depth/Semantics, as well as the camera poses. NOTE: the camera pose is relative to the world coordinate of the original Matterport3D building mesh, while the generated body meshes are relative to the camera coordinate. An additional transformation is necessary. Seeutils_show_test_results_habitat.pyfor our implementation.sdfThe computed signed distance function of the room meshes.*.pngRendered images of our extracted scene meshes.

Models

Body Mesh Models

We use SMPL-X to represent human bodies in our work. Besides installing the smplx module, users also need to download the smplx model and the VPoser model, as demonstrated in SMPL-X. It is noticed that different versions of smplx and VPoser will influece our generation results.

Our Trained Checkpoints

One can download our trained checkpoints here. The used hyper-parameters are included in the filename. For example,

checkpoints_proxtrain_modelS1_batch32_epoch30_LR0.0003_LossVposer0.001_LossKL0.1_LossContact0.001_LossCollision0.01

means using data in PROXE training scenes, stage-one model, batch size 32, epoch 30, learning rate 0.0003, as well as the specified loss weights.

Acknowledgments & Disclosure

Acknowledgments. We sincerely acknowledge: Joachim Tesch for all his work on rendering images and other graphics supports. Xueting Li for implementation advice about the baseline. David Hoffmann, Jinlong Yang, Vasileios Choutas, Ahmed Osman, Nima Ghorbani and Dimitrios Tzionas for insightful discussions on SMPL-X, VPoser and others. Daniel Scharstein and Cornelia Köhler for proof reading. Benjamin Pellkofer and Mason Landry for IT/hardware supports. Y. Z. and S. T. acknowledge funding by Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) Projektnummer 276693517 SFB 1233.

Disclosure. MJB has received research gift funds from Intel, Nvidia, Adobe, Facebook, and Amazon. While MJB is a part-time employee of Amazon, his research was performed solely at MPI. He is also an investor in Meshcapde GmbH.