Greedy InfoMax

We're going Dutch and explore greedy self-supervised training using the InfoMax principle. Surprisingly, it's competitive with end-to-end supervised training on certain perceptual tasks!

This repo provides the code for the audio experiments in our paper:

Sindy Löwe*, Peter O'Connor, Bastiaan S. Veeling* - Putting An End to End-to-End: Gradient-Isolated Learning of Representations

*equal contribution

What is Greedy InfoMax?

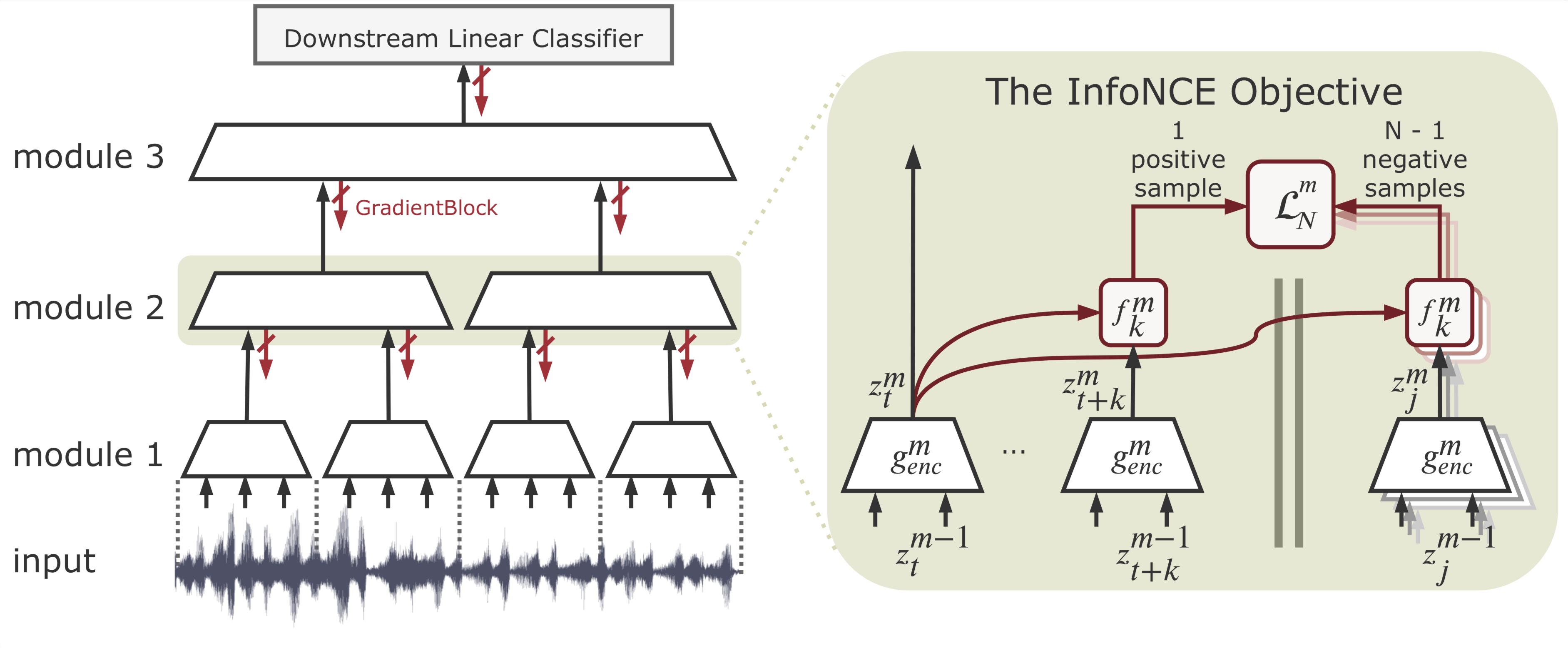

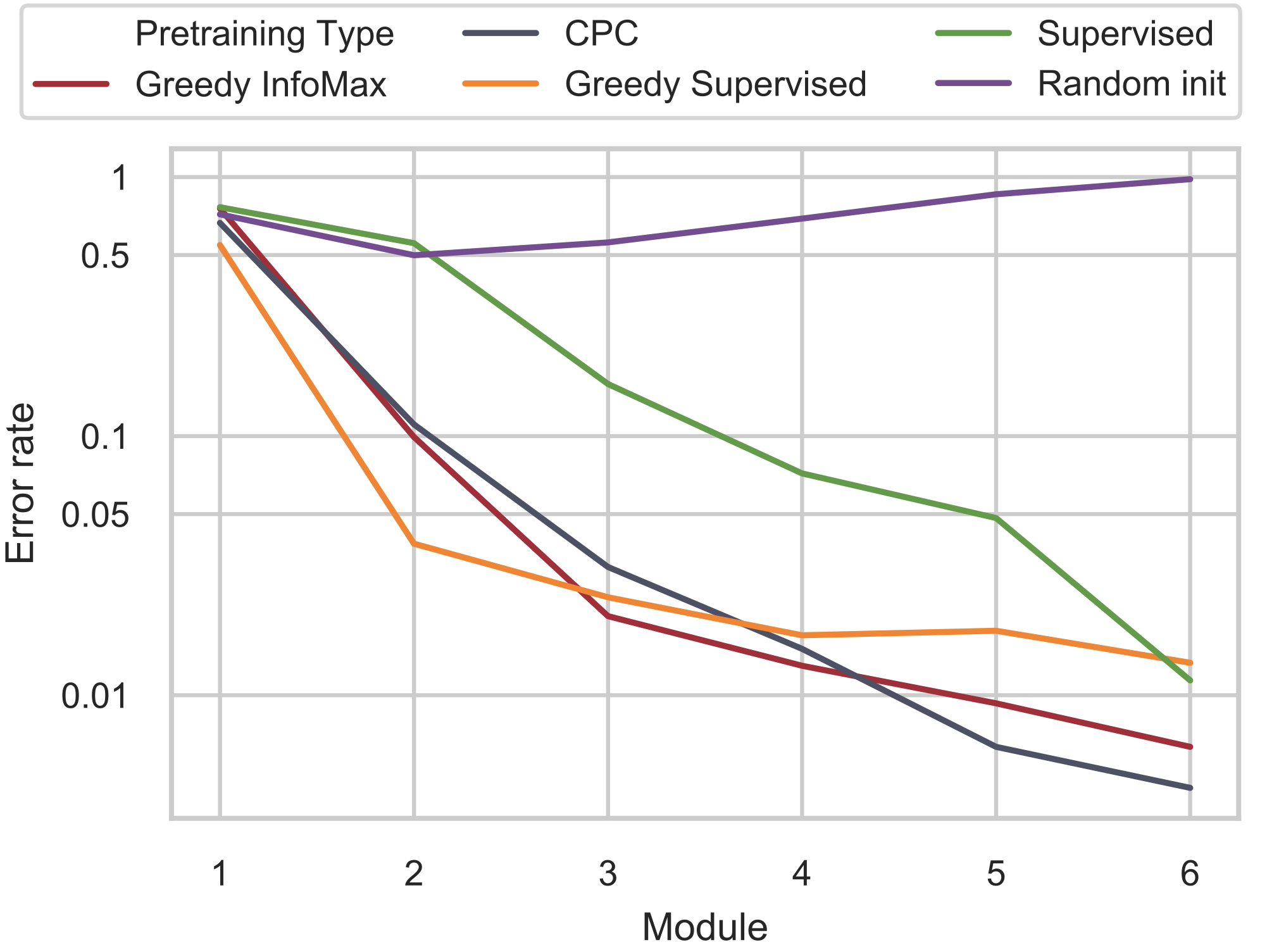

We simply divide existing architectures into gradient-isolated modules and optimize the mutual information between cross-patch intermediate representations.

What we found exciting is that despite each module being trained greedily, it improves upon the representation of the previous module. This enables you to keep stacking modules until downstream performance saturates.

How to run the code

Dependencies

-

Setup the conda environment

infomaxby running:bash setup_dependencies.sh

-

Install torchaudio in the

infomaxenvironment -

Download datasets

bash download_data.sh

Usage

-

To replicate the audio results from our paper, run

source activate infomax bash audio_traineval.shThis will train the Greedy InfoMax model as well as evaluate it by training two linear classifiers on top of it - one for speaker and one for phone classification.

-

View all possible command-line options by running

python -m GreedyInfoMax.audio.main_audio --help

Some of the more important options are:

- in order to train the baseline CPC model with end-to-end backpropagation instead of the Greedy InfoMax model set:

--model_splits 1

- If you want to save GPU memory, you can train layers sequentially, one at a time, by setting the layer to be trained (0-5), e.g.

--train_layer 0

Cite

Please cite our paper if you use this code in your own work:

@article{lowe2019putting,

title={Putting An End to End-to-End: Gradient-Isolated Learning of Representations},

author={L{\"o}we, Sindy and O'Connor, Peter and Veeling, Bastiaan S},

journal={arXiv preprint arXiv:1905.11786},

year={2019}

}