A perf analysis of deep learning inference performance over pytorch/tensorflow and TensorRT/XLA/TVM.

docker: nvidia/cuda:11.1.1-devel-ubuntu18.0

compile tvm with llvm (clang+llvm-11.0.1-x86_64-linux-gnu-ubuntu-16.04)

docker: nvcr.io/nvidia/tensorflow:20.07-tf2-py3

docker: nvcr.io/nvidia/tensorrt:19.09-py3

model

- vgg16

- mobilenet

- resnet50

- inception

optimizer

- TVM

- XLA

- TensorRT

front-end(dl framework)

- ONNX

- Pytorch

- Tensorflow

| Onnx | Pytorch | Tensorflow | |

|---|---|---|---|

| baseline | ✅ | ✅ | ✅ |

| tvm | #4 | ✅ 1.4 | ✅ 1.12 |

| XLA | - | - | ✅ |

| TensorRT | #5 | - | ✅ |

usage: python3 infer_perf/<script.py> <model_name> <options>

i.e. run tf resnet50 with xla enabled

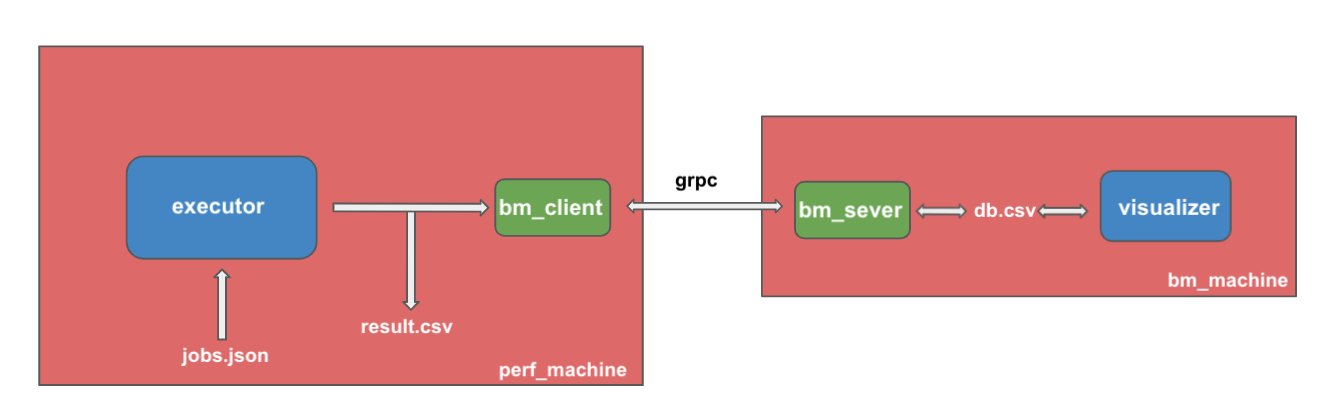

python3 infer_perf/to_xla resnet50 --xlausage: python3 executor <job.json> --report <outout.csv>

i.e.

python executor torch2tvm.json --report result.csvusage: python3 executor <job.json> --server <bm_server>

yapf infer_perf/*.py -i --style yapf.style