Load YOLOv5 from PyTorch Hub ⭐

glenn-jocher opened this issue · comments

📚 This guide explains how to load YOLOv5 🚀 from PyTorch Hub https://pytorch.org/hub/ultralytics_yolov5. See YOLOv5 Docs for additional details. UPDATED 26 March 2023.

Before You Start

Install requirements.txt in a Python>=3.7.0 environment, including PyTorch>=1.7. Models and datasets download automatically from the latest YOLOv5 release.

pip install -r https://raw.githubusercontent.com/ultralytics/yolov5/master/requirements.txt💡 ProTip: Cloning https://github.com/ultralytics/yolov5 is not required 😃

Load YOLOv5 with PyTorch Hub

Simple Example

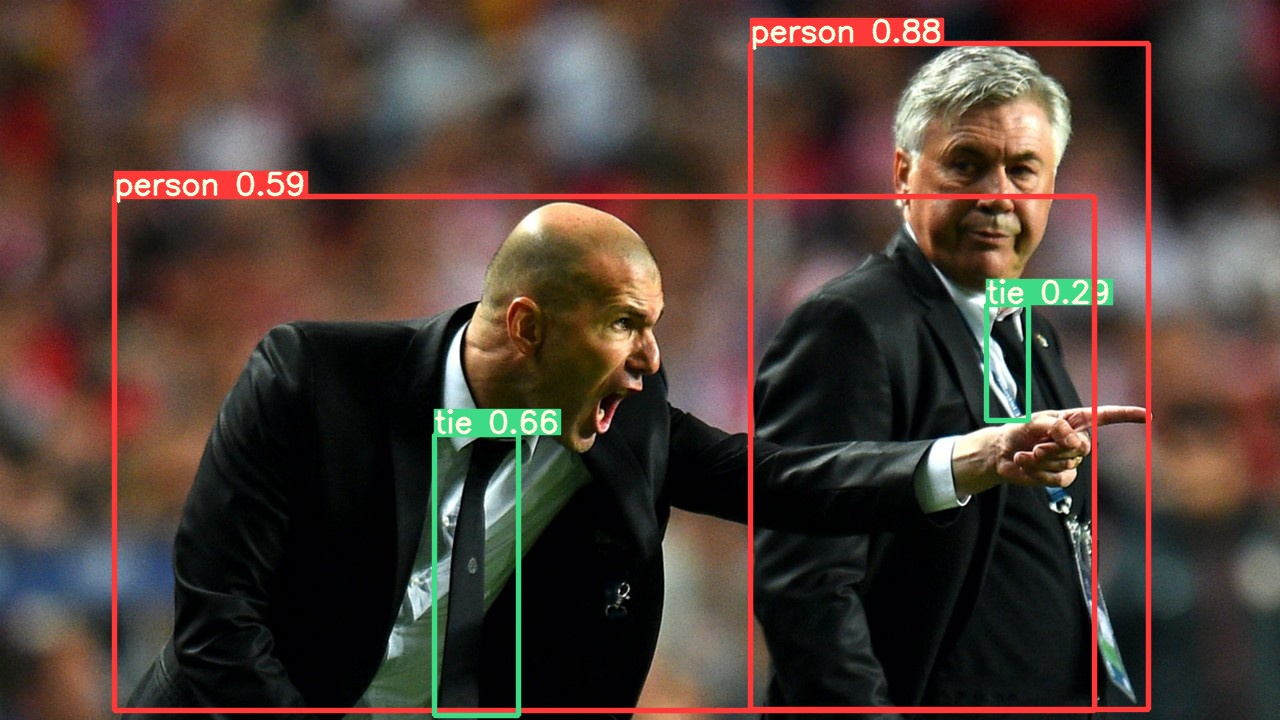

This example loads a pretrained YOLOv5s model from PyTorch Hub as model and passes an image for inference. 'yolov5s' is the lightest and fastest YOLOv5 model. For details on all available models please see the README.

import torch

# Model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

# Image

im = 'https://ultralytics.com/images/zidane.jpg'

# Inference

results = model(im)

results.pandas().xyxy[0]

# xmin ymin xmax ymax confidence class name

# 0 749.50 43.50 1148.0 704.5 0.874023 0 person

# 1 433.50 433.50 517.5 714.5 0.687988 27 tie

# 2 114.75 195.75 1095.0 708.0 0.624512 0 person

# 3 986.00 304.00 1028.0 420.0 0.286865 27 tieDetailed Example

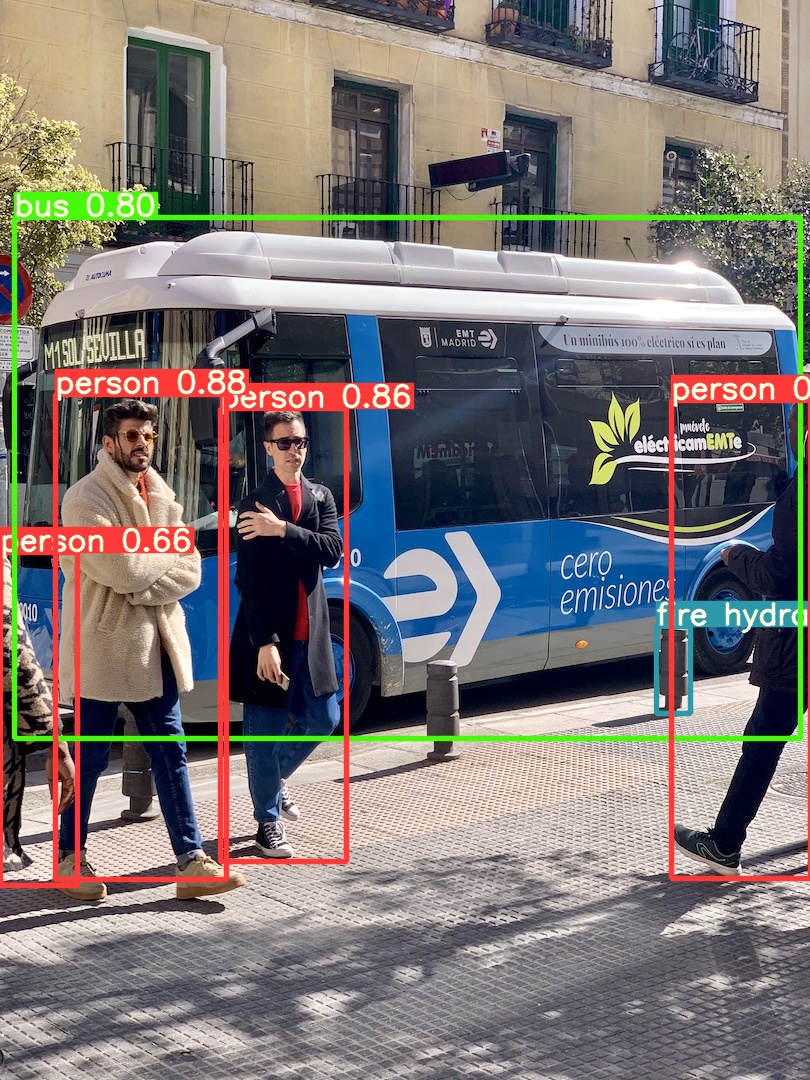

This example shows batched inference with PIL and OpenCV image sources. results can be printed to console, saved to runs/hub, showed to screen on supported environments, and returned as tensors or pandas dataframes.

import cv2

import torch

from PIL import Image

# Model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

# Images

for f in 'zidane.jpg', 'bus.jpg':

torch.hub.download_url_to_file('https://ultralytics.com/images/' + f, f) # download 2 images

im1 = Image.open('zidane.jpg') # PIL image

im2 = cv2.imread('bus.jpg')[..., ::-1] # OpenCV image (BGR to RGB)

# Inference

results = model([im1, im2], size=640) # batch of images

# Results

results.print()

results.save() # or .show()

results.xyxy[0] # im1 predictions (tensor)

results.pandas().xyxy[0] # im1 predictions (pandas)

# xmin ymin xmax ymax confidence class name

# 0 749.50 43.50 1148.0 704.5 0.874023 0 person

# 1 433.50 433.50 517.5 714.5 0.687988 27 tie

# 2 114.75 195.75 1095.0 708.0 0.624512 0 person

# 3 986.00 304.00 1028.0 420.0 0.286865 27 tieFor all inference options see YOLOv5 AutoShape() forward method:

Lines 243 to 252 in 30e4c4f

Inference Settings

YOLOv5 models contain various inference attributes such as confidence threshold, IoU threshold, etc. which can be set by:

model.conf = 0.25 # NMS confidence threshold

iou = 0.45 # NMS IoU threshold

agnostic = False # NMS class-agnostic

multi_label = False # NMS multiple labels per box

classes = None # (optional list) filter by class, i.e. = [0, 15, 16] for COCO persons, cats and dogs

max_det = 1000 # maximum number of detections per image

amp = False # Automatic Mixed Precision (AMP) inference

results = model(im, size=320) # custom inference sizeDevice

Models can be transferred to any device after creation:

model.cpu() # CPU

model.cuda() # GPU

model.to(device) # i.e. device=torch.device(0)Models can also be created directly on any device:

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', device='cpu') # load on CPU💡 ProTip: Input images are automatically transferred to the correct model device before inference.

Silence Outputs

Models can be loaded silently with _verbose=False:

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', _verbose=False) # load silentlyInput Channels

To load a pretrained YOLOv5s model with 4 input channels rather than the default 3:

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', channels=4)In this case the model will be composed of pretrained weights except for the very first input layer, which is no longer the same shape as the pretrained input layer. The input layer will remain initialized by random weights.

Number of Classes

To load a pretrained YOLOv5s model with 10 output classes rather than the default 80:

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', classes=10)In this case the model will be composed of pretrained weights except for the output layers, which are no longer the same shape as the pretrained output layers. The output layers will remain initialized by random weights.

Force Reload

If you run into problems with the above steps, setting force_reload=True may help by discarding the existing cache and force a fresh download of the latest YOLOv5 version from PyTorch Hub.

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', force_reload=True) # force reloadScreenshot Inference

To run inference on your desktop screen:

import torch

from PIL import ImageGrab

# Model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

# Image

im = ImageGrab.grab() # take a screenshot

# Inference

results = model(im)Multi-GPU Inference

YOLOv5 models can be be loaded to multiple GPUs in parallel with threaded inference:

import torch

import threading

def run(model, im):

results = model(im)

results.save()

# Models

model0 = torch.hub.load('ultralytics/yolov5', 'yolov5s', device=0)

model1 = torch.hub.load('ultralytics/yolov5', 'yolov5s', device=1)

# Inference

threading.Thread(target=run, args=[model0, 'https://ultralytics.com/images/zidane.jpg'], daemon=True).start()

threading.Thread(target=run, args=[model1, 'https://ultralytics.com/images/bus.jpg'], daemon=True).start()Training

To load a YOLOv5 model for training rather than inference, set autoshape=False. To load a model with randomly initialized weights (to train from scratch) use pretrained=False. You must provide your own training script in this case. Alternatively see our YOLOv5 Train Custom Data Tutorial for model training.

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False) # load pretrained

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False, pretrained=False) # load scratchBase64 Results

For use with API services. See #2291 and Flask REST API example for details.

results = model(im) # inference

results.ims # array of original images (as np array) passed to model for inference

results.render() # updates results.ims with boxes and labels

for im in results.ims:

buffered = BytesIO()

im_base64 = Image.fromarray(im)

im_base64.save(buffered, format="JPEG")

print(base64.b64encode(buffered.getvalue()).decode('utf-8')) # base64 encoded image with resultsCropped Results

Results can be returned and saved as detection crops:

results = model(im) # inference

crops = results.crop(save=True) # cropped detections dictionaryPandas Results

Results can be returned as Pandas DataFrames:

results = model(im) # inference

results.pandas().xyxy[0] # Pandas DataFramePandas Output (click to expand)

print(results.pandas().xyxy[0])

# xmin ymin xmax ymax confidence class name

# 0 749.50 43.50 1148.0 704.5 0.874023 0 person

# 1 433.50 433.50 517.5 714.5 0.687988 27 tie

# 2 114.75 195.75 1095.0 708.0 0.624512 0 person

# 3 986.00 304.00 1028.0 420.0 0.286865 27 tieSorted Results

Results can be sorted by column, i.e. to sort license plate digit detection left-to-right (x-axis):

results = model(im) # inference

results.pandas().xyxy[0].sort_values('xmin') # sorted left-rightBox-Cropped Results

Results can be returned and saved as detection crops:

results = model(im) # inference

crops = results.crop(save=True) # cropped detections dictionaryJSON Results

Results can be returned in JSON format once converted to .pandas() dataframes using the .to_json() method. The JSON format can be modified using the orient argument. See pandas .to_json() documentation for details.

results = model(ims) # inference

results.pandas().xyxy[0].to_json(orient="records") # JSON img1 predictionsJSON Output (click to expand)

[

{"xmin":749.5,"ymin":43.5,"xmax":1148.0,"ymax":704.5,"confidence":0.8740234375,"class":0,"name":"person"},

{"xmin":433.5,"ymin":433.5,"xmax":517.5,"ymax":714.5,"confidence":0.6879882812,"class":27,"name":"tie"},

{"xmin":115.25,"ymin":195.75,"xmax":1096.0,"ymax":708.0,"confidence":0.6254882812,"class":0,"name":"person"},

{"xmin":986.0,"ymin":304.0,"xmax":1028.0,"ymax":420.0,"confidence":0.2873535156,"class":27,"name":"tie"}

]Custom Models

This example loads a custom 20-class VOC-trained YOLOv5s model 'best.pt' with PyTorch Hub.

model = torch.hub.load('ultralytics/yolov5', 'custom', path='path/to/best.pt') # local model

model = torch.hub.load('path/to/yolov5', 'custom', path='path/to/best.pt', source='local') # local repoTensorRT, ONNX and OpenVINO Models

PyTorch Hub supports inference on most YOLOv5 export formats, including custom trained models. See TFLite, ONNX, CoreML, TensorRT Export tutorial for details on exporting models.

💡 ProTip: TensorRT may be up to 2-5X faster than PyTorch on GPU benchmarks

💡 ProTip: ONNX and OpenVINO may be up to 2-3X faster than PyTorch on CPU benchmarks

model = torch.hub.load('ultralytics/yolov5', 'custom', path='yolov5s.pt') # PyTorch

'yolov5s.torchscript') # TorchScript

'yolov5s.onnx') # ONNX

'yolov5s_openvino_model/') # OpenVINO

'yolov5s.engine') # TensorRT

'yolov5s.mlmodel') # CoreML (macOS-only)

'yolov5s.tflite') # TFLite

'yolov5s_paddle_model/') # PaddlePaddleEnvironments

YOLOv5 may be run in any of the following up-to-date verified environments (with all dependencies including CUDA/CUDNN, Python and PyTorch preinstalled):

- Notebooks with free GPU:

- Google Cloud Deep Learning VM. See GCP Quickstart Guide

- Amazon Deep Learning AMI. See AWS Quickstart Guide

- Docker Image. See Docker Quickstart Guide

Status

If this badge is green, all YOLOv5 GitHub Actions Continuous Integration (CI) tests are currently passing. CI tests verify correct operation of YOLOv5 training, validation, inference, export and benchmarks on MacOS, Windows, and Ubuntu every 24 hours and on every commit.

@glenn-jocher

so can i fit a model with it?

Can someone use the training script with this configuration ?

Can I ask about the meaning of the output?

How can I reconstruct as box prediction results via the output?

Thanks

@rlalpha if want to run inference, put the model in .eval() mode, and select the first output. These are the predictions, which may then be filtered via NMS:

@rlalpha I've updated pytorch hub functionality now in c4cb785 to automatically append an NMS module to the model when pretrained=True is requested. Anyone using YOLOv5 pretrained pytorch hub models must remove this last layer prior to training now:

model.model = model.model[:-1]

Anyone using YOLOv5 pretrained pytorch hub models directly for inference can now replicate the following code to use YOLOv5 without cloning the ultralytics/yolov5 repository. In this example you see the pytorch hub model detect 2 people (class 0) and 1 tie (class 27) in zidane.jpg. Note there is no repo cloned in the workspace. Also note that ideally all inputs to the model should be letterboxed to the nearest 32 multiple. The second best option is to stretch the image up to the next largest 32-multiple as I've done here with PIL resize.

@rlalpha I've updated pytorch hub functionality now in c4cb785 to automatically append an NMS module to the model when

pretrained=Trueis requested. Anyone using YOLOv5 pretrained pytorch hub models must remove this last layer prior to training now:

model.model = model.model[:-1]Anyone using YOLOv5 pretrained pytorch hub models directly for inference can now replicate the following code to use YOLOv5 without cloning the ultralytics/yolov5 repository. In this example you see the pytorch hub model detect 2 people (class 0) and 1 tie (class 27) in zidane.jpg. Note there is no repo cloned in the workspace. Also note that ideally all inputs to the model should be letterboxed to the nearest 32 multiple. The second best option is to stretch the image up to the next largest 32-multiple as I've done here with PIL resize.

I got how to do it now. Thank you for rapid reply.

@rlalpha @justAyaan @MohamedAliRashad this PyTorch Hub tutorial is now updated to reflect the simplified inference improvements in PR #1153. It's very simple now to load any YOLOv5 model from PyTorch Hub and use it directly for inference on PIL, OpenCV, Numpy or PyTorch inputs, including for batched inference. Reshaping and NMS are handled automatically. Example script is shown in above tutorial.

@glenn-jocher calling model = torch.hub.load('ultralytics/yolov5', 'yolov5l', pretrained=True) throws error:

Using cache found in /home/pf/.cache/torch/hub/ultralytics_yolov5_master

Traceback (most recent call last):

File "<frozen importlib._bootstrap>", line 971, in _find_and_load

File "<frozen importlib._bootstrap>", line 955, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 665, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 678, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "/home/pf/.cache/torch/hub/ultralytics_yolov5_master/models/yolo.py", line 15, in <module>

from models.common import Conv, Bottleneck, SPP, DWConv, Focus, BottleneckCSP, Concat, NMS, autoShape

File "/home/pf/.cache/torch/hub/ultralytics_yolov5_master/models/common.py", line 8, in <module>

from utils.datasets import letterbox

ModuleNotFoundError: No module named 'utils.datasets'; 'utils' is not a package

Process finished with exit code 1

@pfeatherstone thanks for the feedback! Can you try with force_reload=True? Without it the cached repo is used, which may be out of date.

import torch

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True, force_reload=True)Still doesn't work. I get the following errors:

Downloading: "https://github.com/ultralytics/yolov5/archive/master.zip" to /home/pf/.cache/torch/hub/master.zip

Traceback (most recent call last):

File "<frozen importlib._bootstrap>", line 971, in _find_and_load

File "<frozen importlib._bootstrap>", line 955, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 665, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 678, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "/home/pf/.cache/torch/hub/ultralytics_yolov5_master/models/yolo.py", line 15, in <module>

from models.common import Conv, Bottleneck, SPP, DWConv, Focus, BottleneckCSP, Concat, NMS, autoShape

File "/home/pf/.cache/torch/hub/ultralytics_yolov5_master/models/common.py", line 8, in <module>

from utils.datasets import letterbox

ModuleNotFoundError: No module named 'utils.datasets'; 'utils' is not a package

Error in atexit._run_exitfuncs:

Traceback (most recent call last):

File "/usr/local/pycharm-2020.2/plugins/python/helpers/pydev/pydevd.py", line 1785, in stoptrace

debugger.exiting()

File "/usr/local/pycharm-2020.2/plugins/python/helpers/pydev/pydevd.py", line 1471, in exiting

sys.stdout.flush()

ValueError: I/O operation on closed file.

Process finished with exit code 1

@pfeatherstone I've raised a new bug report in #1181 for your observation. This typically indicates a pip package called utils is installed in your environment, you should pip uninstall utils.

Hi!

I try load model and apply .to(device), but i receive exception: RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu!

@Semihal please raise a bug report with reproducible example code. Thank you.

Is there a way to specify the NMS parameters on the pytorch hub model?

NMS parameters are model.autoshape() attributes. You can modify them to whatever you want. i.e. model.conf = 0.5 before running inference.

Lines 121 to 127 in 784feae

can we pass augment argument in at the time of inference?

Custom model loading has been simplified now with PyTorch Hub in PR #1677 🚀

Custom Models

This example loads a custom 20-class VOC-trained YOLOv5s model 'yolov5s_voc_best.pt' with PyTorch Hub.

model = torch.hub.load('ultralytics/yolov5', 'custom', path_or_model='yolov5s_voc_best.pt')

model = model.autoshape() # for PIL/cv2/np inputs and NMSWhere can I see the code for the results methods offered through pytorch hub? i.e results.print(), results.save(), etc

@glenn-jocher Thank you for your prompt reply, and your tireless efforts!

#I want to know how show the result in OpenCV cv.imshow

Now these results work well, as follows

results.print() # print results to screen

results.show() # display results

results.save() # save as results1.jpg, results2.jpg... etc.

But I want to know how to

cv2.imshow("Results", ????)

@Lifeng1129 I've heard this request before, so I've created and merged a new PR #1897 to add this capability. To receive this update you'll need to force_reload your pytorch hub cache:

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True, force_reload=True)Then you can use the new results.render() method to return you a list of np arrays representing the original images annotated with the predicted bounding boxes. Note that cv2 usage of the images will require a RGB to BGR conversion, i.e.:

results = model(imgs)

im_list = results.render()

cv2.imshow(im_list[0][..., ::-1]) # show image 0 with RGB to BGR conversion@glenn-jocher , loading the model using torch.hub is a great functionality. Nevertheless, I am trying to deploy the custom trained model in an isolated environment and it's being weird...

I sucessfully generate the model using torch.hub, save it and load it again in the same py script (test.py):

However, when I try to load the model from the previously saved file in a second py script (test2.py), it fails:

Both scripts are in the same location:

What's the best way to do this?

Thanks in advance

@jmanuelnavarro hub does not need network connectivity. Load the model with your first method as that's working.

Hello! How can I save results to a folder?

@dan0nchik that's a good question. There's no capability for this currently. It would be nice to have something like results.save('path/to/dir') right?

@dan0nchik I've implemented your feature idea in PR #2179. You can now pass a directory to save results to:

results.save() # save to current directory

results.save('path/to/dir') # save to specific directoryGreat! Thank you very much!

Hello again

Can you please add a flag or something to the display function, so original picture names would be saved?

For example:

results.save(save_orig_names=True) # save as results_zidane.jpg, results_bus.jpg... etc.I've tried to implement that, but I couldn't test :(

@dan0nchik yes that's a good point. I've thought of implementing this by default for use cases that allow it, i.e when a file or url, or PIL object is passed directly to model. For other cases this is not possible, such as when cv2 or torch images are passed in.

If you have some work started down this path perhaps you could submit a PR and I could review there?

@dan0nchik BTW, the main pytorch hub functionality is done with the autoShape() module that is in common.py, which generates Detections() class results, also in common.py:

Line 168 in 404749a

You would want to modify this line in particular, i.e. fname = .../self.fnames[i] if self.fnames else .../results{i}.jpg

Line 263 in 404749a

If you have some work started down this path perhaps you could submit a PR and I could review there?

@glenn-jocher Yes, I've created PR #2194 and implemented that, but didn't test.

Should the display/save/show function also displays the class names? Right now it only displays the bounding boxes. I can see there was something for that that was coded or started here:

Lines 214 to 217 in c0ffcdf

@kinoute yes it should, this is on a long TODO list of ours. If you'd like to contribute this feature feel free to submit a PR! A good example starting point is here:

https://github.com/WelkinU/yolov5-fastapi-demo/blob/270a9f6114ce1e54bd047221544178a419eef365/server.py#L71-L89

Hey @glenn-jocher Thanks for putting this awesome work together.

How to do this :

- I want to just detect a particular class, for example

0 or human beings. How to do this is there some interface provided ? I see that theautoShapeclass makesclasses = None.

class autoShape(nn.Module):

classes = None # (optional list) filter by class

# Then somewhere in NMS we use

nms(self.classes)

Thanks

@jalotra filter inference by class using the classes attribute. To detect only class 0, persons:

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True)

model.classes = [0] # list of classes to detectcan you give a demo that is use torch hub to load model inference rtsp video?

I use LoadStrames class to load a rtsp video

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True)

dataset = LoadStreams(self.source)

for path, img, im0s, vid_cap in dataset:

results = model(img, size=640)

results.show()but i get this error

ValueError: axes don't match array

i think the error due to the LoadStreams's return, the first channel is batch,i see the hub source code is three channel

@morestart for a fully managed rtsp streaming solution I would use python detect.py --source rtsp://yourstreamhere.

The PyTorch Hub model is a single-batch solution, so you'd have to pair it with a custom streamloader as in your example, except that LoadStreams() builds padded pytorch tensor batches rather than the original image inputs that the hub autoshape models typically handle. The hub model can run inference on torch tensors, however these are assumed to have unknown padding and thus pass through a different inference channel here that skips all postprocessing (i.e. does not return a results object)

Lines 194 to 196 in 95aefea

thanks for your reply! so i need change the LoadStreams channel to 3?

@morestart as I said for a fully managed solution simply use detect.py.

Torch Hub models are intended for integration into your own python projects, they are not intended for use with the detect.py dataloaders.

@glenn-jocher My project is use multi video to detect object, the detect.py is a easy way to use, but the detect.py is too dependent on local files to load model and It's hard to scale. I think use hub to load model is a simple plan and it made my project look better. I will try change the LoadStreams class can use Hub model to inference.

@glenn-jocher How can I pass a confidence threshold when I'm loading the model from PyTorch Hub?

@debparth see PyTorch Hub Tutorial, it's explained there.

Tutorials

thanks for your reply! so i need change the LoadStreams channel to 3?

@glenn-jocher My project is use multi video to detect object, the detect.py is a easy way to use, but the detect.py is too dependent on local files to load model and It's hard to scale. I think use hub to load model is a simple plan and it made my project look better. I will try change the LoadStreams class can use Hub model to inference.

Have you changed the LoadStreams class?

@valerietram88 you can use detect.py, but change the load model part to torch hub

@glenn-jocher How do I know the inferences happening in the torch hub model are happening in GPU.

We sending a list of np arrays, so how do we use GPU for inferences with pytorch hub?

@bipinkc19 you can send the model to a cuda device using normal pytorch methods: model.to(device).

YOLOv5 PyTorch Hub models automatically move images to the correct device if needed before inference.

Dear @glenn-jocher ,

How can use yolov3-tiny weights in hub.

I'm working on raspberry pi and want a good prediction speed and I think using tiny version I can achieve the optimal FPS.

Thank you.

Regards,

Asim

@asim266 for YOLOv3 models you can use the ultralytics/yolov3 repo. See

https://github.com/ultralytics/yolov3#pytorch-hub

@glenn-jocher when I use the following line of code for yolov3-tiny

model = torch.hub.load('ultralytics/yolov3', 'yolov3-tiny', force_reload=True).autoshape()

it gives me the following error..

Downloading: "https://github.com/ultralytics/yolov3/archive/master.zip" to C:\Users\Asim/.cache\torch\hub\master.zip

Traceback (most recent call last):

File "E:/Face Mask 3Class Yolov5/new_hub.py", line 7, in

model = torch.hub.load('ultralytics/yolov3', 'yolov3-tiny', force_reload=True).autoshape()

File "C:\Users\Asim\anaconda3\lib\site-packages\torch\hub.py", line 339, in load

model = _load_local(repo_or_dir, model, *args, **kwargs)

File "C:\Users\Asim\anaconda3\lib\site-packages\torch\hub.py", line 367, in _load_local

entry = _load_entry_from_hubconf(hub_module, model)

File "C:\Users\Asim\anaconda3\lib\site-packages\torch\hub.py", line 187, in _load_entry_from_hubconf

raise RuntimeError('Cannot find callable {} in hubconf'.format(model))

RuntimeError: Cannot find callable yolov3-tiny in hubconf

@glenn-jocher when I use the following line of code :

model = torch.hub.load('ultralytics/yolov3', 'yolov3_tiny', pretrained=True, force_reload=True).autoshape()

It gives me error :

Traceback (most recent call last):

File "C:\Users\Asim/.cache\torch\hub\ultralytics_yolov3_master\hubconf.py", line 37, in create

attempt_download(fname) # download if not found locally

File "C:\Users\Asim/.cache\torch\hub\ultralytics_yolov3_master\utils\google_utils.py", line 30, in attempt_download

tag = subprocess.check_output('git tag', shell=True).decode().split()[-1]

IndexError: list index out of range

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:/Users/Asim/Desktop/Free Lance/New folder/camera-live-streaming/app.py", line 9, in

model = torch.hub.load('ultralytics/yolov3', 'yolov3_tiny', pretrained=True, force_reload=True).autoshape()

File "C:\Users\Asim\anaconda3\lib\site-packages\torch\hub.py", line 339, in load

model = _load_local(repo_or_dir, model, *args, **kwargs)

File "C:\Users\Asim\anaconda3\lib\site-packages\torch\hub.py", line 368, in _load_local

model = entry(*args, **kwargs)

File "C:\Users\Asim/.cache\torch\hub\ultralytics_yolov3_master\hubconf.py", line 93, in yolov3_tiny

return create('yolov3-tiny', pretrained, channels, classes, autoshape)

File "C:\Users\Asim/.cache\torch\hub\ultralytics_yolov3_master\hubconf.py", line 51, in create

raise Exception(s) from e

Exception: Cache maybe be out of date, try force_reload=True. See https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading for help.

But if I use this line of code:

model = torch.hub.load('ultralytics/yolov3', 'yolov3_tiny', force_reload=True).autoshape()

It doesn't give any error but also does not detect anything.

@asim266 this the YOLOv5 PyTorch Hub tutorial. For questions about other repositories I would recommend you raise an issue there.

@glenn-jocher

First of thank you for the fantastic community page and help from you guys.

One question:

How do I save the model locally and load the model from local file in torch-hub for yolov5. This is for case where there is no access to internet.

@bipinkc19 PyTorch Hub commands only need internet access the first time they are run, to download a cached copy of this repo. After this first time the cache is saved to disk and located for use in subsequent calls.

Whoever struggles with the nms error with CUDA backend:

RuntimeError: Could not run 'torchvision::nms' with arguments from the 'CUDA' backend.

Update your torchvision to 0.8.1, that should resolve it. 🤟🏼

Is there a way to specify a specific class like ["person", "cat"] to only identify person and cat?

@xcalizorz hi good question! I've updated the tutorial above with details on how to filter inference results by class:

Inference Settings

Inference settings such as confidence threshold, NMS IoU threshold, and classes filter are model attributes, and can be modified by:

model.conf = 0.25 # confidence threshold (0-1)

model.iou = 0.45 # NMS IoU threshold (0-1)

model.classes = None # (optional list) filter by class, i.e. = [0, 15, 16] for persons, cats and dogs

results = model(imgs, size=320) # custom inference sizeCould you please provide some more details on the Training section.

How does one properly pass the bounding box data and labels here when using a dataloader? Would very much appreciate an example with some skeleton code.

@Lauler see Train Custom Data tutorial to get started with training:

YOLOv5 Tutorials

- Train Custom Data 🚀 RECOMMENDED

- Tips for Best Training Results ☘️ RECOMMENDED

- Weights & Biases Logging 🌟 NEW

- Supervisely Ecosystem 🌟 NEW

- Multi-GPU Training

- PyTorch Hub ⭐ NEW

- ONNX and TorchScript Export

- Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution

- Transfer Learning with Frozen Layers ⭐ NEW

- TensorRT Deployment

@glenn-jocher Thanks. I had already read Train Custom Data. I was under the impression that loading model from torch.hub may have allowed more flexibility in allowing the user to specify their own Dataset similar to the PennFudanDataset in this tutorial: https://pytorch.org/tutorials/intermediate/torchvision_tutorial.html , since you don't expect users to clone the yolov5 repo. But I should still organize the data according to the Train Custom Data-guide?

I think ultimately at some point in the future it is easier if users of object detection libraries can organize their data however they want and create their own train/validation dataloaders (similar to image classification tasks) as opposed to being forced to shuffle image files in folders with specific format requirements.

This is just a general remark (don't take it as negative criticism) about the design API of object detection libraries versus what has become the standard in image classification. Object detection libraries are not very flexible in comparison, and hard to adapt to your own needs or your own validation schemes (cross validation).

I will use the official way as described!

@Lauler you can use Hub models for any purpose including training. Hub models provide nothing else except a model, you must build your own training/inference infrastructure for whatever custom purposes you have.

Fully managed solutions for training, testing, and inference are also available in train.py, test.py, detect.py.

@rerester hi sorry to see you are having problems. It's hard to determine what your issue may be from the small screenshot you have pasted. If you believe you have a reproducible bug, raise a new issue using the 🐛 Bug Report template, providing screenshots and a minimum reproducible example to help us better understand and diagnose your problem. Thank you!

@glenn-jocher

I get an error "RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False. If you are running on a CPU-only machine, please use torch.load with map_location=torch.device('cpu') to map your storages to the CPU." at the 'model = torch.hub.load()' line when I run my custom model (which was trained at GPU environment) at CPU environment.

How can I use GPU-trained custom model(which is trained by Yolov5 git version not pytorch hub) in CPU environment with pytorch hub?(of course, the custom model works well in GPU environment with pytorch hub)

@suyong2 backend assignment is handled automatically in YOLOv5 PyTorch Hub models, so if you have a GPU your model will load there, if not it will load on CPU.

If this does not answer your question and you believe you have a reproducible issue, we suggest you raise a new issue using the 🐛 Bug Report template, providing screenshots and a minimum reproducible example to help us better understand and diagnose your problem. Thank you!

@glenn-jocher, is PyTorch hub support video inference?

@pravastacaraka PyTorch Hub can support any inference as long as you build a dataloader for it.

For a fully managed inference solution see detect.py.

@glenn-jocher Thanks for the tutorial.

I don't know why, but the last 3 lines of code don't work.

@xinxin342 the last 3 lines work correctly.

In python outputs are suppressed. If you want to print outputs you can use the print() function.

@glenn-jocher

Thank you for solving my question so quickly.

Hallo @glenn-jocher, can i load yolov5 in my local directory with "torch.hub.load("mydir/yolov5/", "yolov5s")", i was try it but get error "too many values to unpack (expected 2)"

@rullisubekti your code demonstrates incorrect usage. For correct usage read the tutorial above.

Is there an easy way to make inference on my own model? When I try to follow the same steps, I run into all sorts of problems. Using a custom .pt file doesn't work out of the box. I have been trying to use the autoshape wrapper provided in common.py, but I get the following error (by the way, there is a bug in the autoshape class, self.stride is not defined)

RuntimeError: Sizes of tensors must match except in dimension 1. Got 18 and 17 in dimension 2 (The offending index is 1)

@PascalHbr loading custom YOLOv5 models in PyTorch Hub is very easy, see the 'Custom Models' section in the above tutorial.

@glenn-jocher how can run training with PyTorch hub? from your intructions:

Training

To load a YOLOv5 model for training rather than inference, set autoshape=False. To load a model with randomly initialized ?> weights (to train from scratch) use pretrained=False.

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False) # load pretrained model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False, pretrained=False) # load scratch

Then what should I do?

@pravastacaraka to train YOLOv5 models see Train Custom Data tutorial:

YOLOv5 Tutorials

- Train Custom Data 🚀 RECOMMENDED

- Tips for Best Training Results ☘️ RECOMMENDED

- Weights & Biases Logging 🌟 NEW

- Supervisely Ecosystem 🌟 NEW

- Multi-GPU Training

- PyTorch Hub ⭐ NEW

- ONNX and TorchScript Export

- Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution

- Transfer Learning with Frozen Layers ⭐ NEW

- TensorRT Deployment

@glenn-jocher That answer didn't help me. I will clarify my question.

Can I train my dataset using the PyTorch hub instead of using train.py? Because based on the information you provide above there is a Training section:

Training

To load a YOLOv5 model for training rather than inference, set autoshape=False. To load a model with randomly initialized ?> weights (to train from scratch), use pretrained=False.

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False) # load pretrained model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False, pretrained=False) # load scratch

I thought I could use a PyTorch hub to train my dataset. If so, how do I pass these model variables to my dataset? Is it like this?

results = model('path/to/my-dataset')@glenn-jocher That answer didn't help me. I will clarify my question.

Can I train my dataset using the PyTorch hub instead of using

train.py? Because based on the information you provide above there is a Training section:Training

To load a YOLOv5 model for training rather than inference, set autoshape=False. To load a model with randomly initialized ?> weights (to train from scratch), use pretrained=False.

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False) # load pretrained model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False, pretrained=False) # load scratchI thought I could use a PyTorch hub to train my dataset. If so, how do I pass these model variables to my dataset? Is it like this?

results = model('path/to/my-dataset')

I think one should implement his own trainer(), correct me if i'm wrong @glenn-jocher .

So the conclusion is that we can't do training using the PyTorch hub, right?

Training

To load a YOLOv5 model for training rather than inference, set autoshape=False. To load a model with randomly initialized ?> weights (to train from scratch), use pretrained=False.

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False) # load pretrained model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False, pretrained=False) # load scratch

Then what about the information provided above? is that meaningless? @dimzog @glenn-jocher

@dimzog @pravastacaraka PyTorch Hub provides a pathway for defining models, nothing more. What you do with that model is up to you, though you are required to create the functionality you want.

As I said, for a fully managed training solution I would recommend train.py.

When I was using the model loaded by torch.hub, it seems like print(), pandas() these nice functions only work when the input are non-tensor. If the input is tensor, the output will be a list. My question is:

- what does this list mean, how can I use them?

- Anyway to use

pandas()if input data are tensors?

Thank you!

@Dylan-H-Wang yes the current intended behavior for torch inputs is simply for the AutoShape() wrapper to act as a pass-through. No preprocess, posprocessing or NMS is done, and no results object is generated. This is the default use case in train.py, test.py, detect.py, and yolo.py.

Lines 253 to 255 in ffb47ff

hello @glenn-jocher, I got some issue, when I run detect.py and load the model using torch.hub.load, with the same sample data and file weight. I get a different detection result and xyxy value return too, why? Thank you!

@rullisubekti these two topics are seperate. detect.py is a fully managed inference solution that does not use the AutoShape() wrapper. YOLOv5 PyTorch Hub models are intended for your own custom python workflows and utilize the AutoShape() wrapper.

Hello, everyone, I am stuck here, can anyone give me hints.

I tried to import custom model and get the prediction boxes as it is given in example.

I did this so far, it detects how many classes are there in the images but doesn't show xmin, ymin, xmax and ymax.

import cv2

import torch

from PIL import Image

import glob

#model

path = "./"

#model = torch.load('./last.pt')

model = torch.hub.load('ultralytics/yolov5', 'custom', path='./best.pt') # custom model

CUDA_VISIBLE_DEVICES = "0"

model.conf = 0.25 # confidence threshold (0-1)

model.iou = 0.45 # NMS IoU threshold (0-1)

dataset_name = 'test_1'

test_img_path = './' + dataset_name + '/*.png'

test_imgs = sorted(glob.glob(test_img_path))

print(len(test_imgs))

for img in test_imgs:

#print(img)

#file_name = img.split('/')[-1]

image = cv2.imread(img)

img1 = Image.open(img)

#print(img)

img2 = cv2.imread(img)[:, :, ::-1]

imgs = [img2]

#print(img2)

results = model(imgs, size = 640)

results.print()

results.xyxy[0]

results.pandas().xyxy[0]

this is the result

5

image 1/1: 1023x1920 4 yess

Speed: 15.6ms pre-process, 27.5ms inference, 1.3ms NMS per image at shape (1, 3, 352, 640)

image 1/1: 1023x1920 6 yess

.........

Any help would be appreciated.

Thanks a lot.

@Laudarisd in Python if you want to see the contents of a variable you might want to print it's value.

The first simple example doesn't seem to work...

(env) zxcv > cat wtf.py

#!/usr/bin/env python3

import torch

# Model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

# Image

img = 'https://ultralytics.com/images/zidane.jpg'

# Inference

results = model(img)

results.print()

(env) zxcv > ./wtf.py

Downloading: "https://github.com/ultralytics/yolov5/archive/master.zip" to /home/dllu/.cache/torch/hub/master.zip

Fusing layers...

Model Summary: 224 layers, 7266973 parameters, 0 gradients

Adding AutoShape...

YOLOv5 🚀 2021-5-25 torch 1.9.0.dev20210525+cu111 CUDA:0 (NVIDIA GeForce RTX 3090, 24234.625MB)

/home/dllu/zxcv/env/lib/python3.9/site-packages/torch/nn/functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at /pytorch/c10/core/TensorImpl.h:1260.)

return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

Traceback (most recent call last):

File "/home/dllu/zxcv/./wtf.py", line 13, in <module>

results.print()

File "/home/dllu/.cache/torch/hub/ultralytics_yolov5_master/models/common.py", line 344, in print

self.display(pprint=True) # print results

File "/home/dllu/.cache/torch/hub/ultralytics_yolov5_master/models/common.py", line 322, in display

str += f"{n} {self.names[int(c)]}{'s' * (n > 1)}, " # add to string

IndexError: list index out of range

EDIT: I deleted the line from .cache/torch/hub/ultralytics_yolov5_master/models/common.py, line 322 and now it works. Seems like a bug though.

@dllu both examples work correctly, just checked:

How to create a Minimal, Reproducible Example

When asking a question, people will be better able to provide help if you provide code that they can easily understand and use to reproduce the problem. This is referred to by community members as creating a minimum reproducible example. Your code that reproduces the problem should be:

- ✅ Minimal – Use as little code as possible that still produces the same problem

- ✅ Complete – Provide all parts someone else needs to reproduce your problem in the question itself

- ✅ Reproducible – Test the code you're about to provide to make sure it reproduces the problem

In addition to the above requirements, for Ultralytics to provide assistance your code should be:

- ✅ Current – Verify that your code is up-to-date with current GitHub master, and if necessary

git pullorgit clonea new copy to ensure your problem has not already been resolved by previous commits. - ✅ Unmodified – Your problem must be reproducible without any modifications to the codebase in this repository. Ultralytics does not provide support for custom code

⚠️ .

If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛 Bug Report template and providing a minimum reproducible example to help us better understand and diagnose your problem.

Thank you! 😃

Hi @glenn-jocher, upon further debugging it seems to be a bug with Pytorch. Very strange --- I'll dig a bit further. pytorch/pytorch#58959

@dllu Actually I also encountered the same problem while doing inference in Docker. Strange thing is there is no problem when I run detect code in local. My local pc has UBUNTU 20.04. I guess this is a issue from pyhton version. But I am not sure.

Hi @glenn-jocher here in Python if you want to see the contents of a variable you might want to print it's value.

could you give me some hints to visualize variables?

Thank you.

x=1

print(x)Can we run inference on a video with YOLOv5 in PyTorch Hub?

If so, can you show a brief example of that.

#open video

vid1 = cv2.VideoCapture('/path/to/video.mp4')

#Inference

results = model(vid1, size=640)

@lonnylundsten YOLOv5 PyTorch Hub inference is meant for integration into your own python workflows.

For a fully managed inference solution you can use detect.py.

I used the command given in the documentation to load a custom model-

model = torch.hub.load('ultralytics/yolov5', 'custom', path='/content/yolov5/runs/train/yolov5s_results3/weights/best.pt') # default

But got the following error-

ImportError: cannot import name 'save_one_box' from 'utils.general' (/content/yolov5/utils/general.py)

Further I checked if that was the case but noticed that the function is there in general.py

Please help

@jmayank23 👋 hi, thanks for letting us know about this problem with YOLOv5 🚀. We've created a few short guidelines below to help users provide what we need in order to get started investigating a possible problem.

How to create a Minimal, Reproducible Example

When asking a question, people will be better able to provide help if you provide code that they can easily understand and use to reproduce the problem. This is referred to by community members as creating a minimum reproducible example. Your code that reproduces the problem should be:

- ✅ Minimal – Use as little code as possible that still produces the same problem

- ✅ Complete – Provide all parts someone else needs to reproduce your problem in the question itself

- ✅ Reproducible – Test the code you're about to provide to make sure it reproduces the problem

In addition to the above requirements, for Ultralytics to provide assistance your code should be:

- ✅ Current – Verify that your code is up-to-date with current GitHub master, and if necessary

git pullorgit clonea new copy to ensure your problem has not already been resolved by previous commits. - ✅ Unmodified – Your problem must be reproducible without any modifications to the codebase in this repository. Ultralytics does not provide support for custom code

⚠️ .

If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛 Bug Report template and providing a minimum reproducible example to help us better understand and diagnose your problem.

Thank you! 😃

Hello,

I want to train the YOLOv5 model from scratch (not using the pretrained weights) on my own dataset and classes for a task of Face Mask Detection.

I have seen that in order to train I should load:

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', autoshape=False, pretrained=False) # load scratch

However, How do I actually train it? Can I use it as one layer in my model?

Thank you,

Almog

@almog-gueta see Train Custom Data tutorial:

YOLOv5 Tutorials

- Train Custom Data 🚀 RECOMMENDED

- Tips for Best Training Results ☘️ RECOMMENDED

- Weights & Biases Logging 🌟 NEW

- Supervisely Ecosystem 🌟 NEW

- Multi-GPU Training

- PyTorch Hub ⭐ NEW

- TorchScript, ONNX, CoreML Export 🚀

- Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution

- Transfer Learning with Frozen Layers ⭐ NEW

- TensorRT Deployment