HYPERPARAMETER EVOLUTION

glenn-jocher opened this issue · comments

Training hyperparameters in this repo are defined in train.py, including augmentation settings:

Lines 35 to 54 in df4f25e

We began with darknet defaults before evolving the values using the result of our hyp evolution code:

python3 train.py --data data/coco.data --weights '' --img-size 320 --epochs 1 --batch-size 64 -- accumulate 1 --evolveThe process is simple: for each new generation, the prior generation with the highest fitness (out of all previous generations) is selected for mutation. All parameters are mutated simultaneously based on a normal distribution with about 20% 1-sigma:

Lines 390 to 396 in df4f25e

Fitness is defined as a weighted mAP and F1 combination at the end of epoch 0, under the assumption that better epoch 0 results correlate to better final results, which may or may not be true.

Lines 605 to 608 in bd92457

An example snapshot of the results are here. Fitness is on the y axis (higher is better).

from utils.utils import *; plot_evolution_results(hyp)

I had this problem. 'shape '[16, 3, 85, 13, 13]' is invalid for input of size 56784'.The problem is located in the code here,' p = p.view(bs, self.na, self.nc + 5, self.ny, self.nx).permute(0, 1, 3, 4, 2).contiguous() # prediction',I'm a green hand and I'd appreciate any advice.

@YRunner this issue is dedicated only to hyperparameter evolution. Is your post in reference to this topic?

@Chida15 haha, yes, well good job, you've run two different models here, the orange points, and it's showing you the best result highlighted in blue. For this to be effective you want to evolve hundreds of mutations. So I would change the for loop here to at least 200 generations.

Line 371 in e77ca7e

@Chida15 haha, yes, well good job, you've run two different models here, the orange points, and it's showing you the best result highlighted in blue. For this to be effective you want to evolve hundreds of mutations. So I would change the for loop here to at least 200 generations.

Line 371 in e77ca7e

ok, thanks a lot!

Hi. I am trying to plot the evolution results but get an error that hyp is not defined. I am applying your latest version of repo. Any hint on that?thanks

I solved it.

@sanazss ah yes, you need to define hyp before running: from utils.utils import *; plot_evolution_results(hyp)

momentum=0.9

decay=0.0005

angle=0

saturation = 1.5

exposure = 1.5

hue=.1

learning_rate=0.001

burn_in=1000

max_batches = 120200

policy=steps

steps=70000,100000

scales=.1,.1

I see these params in the cfg file. I would like to use the same parameters . In such case, how would the updated hyp be?

hyp = {'giou': 1.582, # giou loss gain

'xy': 4.688, # xy loss gain

'wh': 0.1857, # wh loss gain

'cls': 27.76, # cls loss gain (CE should be around ~1.0)

'cls_pw': 1.446, # cls BCELoss positive_weight

'obj': 21.35, # obj loss gain

'obj_pw': 3.941, # obj BCELoss positive_weight

'iou_t': 0.2635, # iou training threshold

'lr0': 0.002324, # initial learning rate

'lrf': -4., # final LambdaLR learning rate = lr0 * (10 ** lrf)

'momentum': 0.97, # SGD momentum

'weight_decay': 0.0004569, # optimizer weight decay

'hsv_s': 0.5703, # image HSV-Saturation augmentation (fraction)

'hsv_v': 0.3174, # image HSV-Value augmentation (fraction)

'degrees': 1.113, # image rotation (+/- deg)

'translate': 0.06797, # image translation (+/- fraction)

'scale': 0.1059, # image scale (+/- gain)

'shear': 0.5768} # image shear (+/- deg)@varghesealex90 the hyp dictionary is pretty self explanatory. The key names are the same in many cases to what you have above, i.e. hyp['momentum'] etc.

The parameters we do not use are angle, hue, burn_in. The LR scheduler hyps are set to reduce at 80% and 90% of total epochs with scales of 0.1 and 0.1 already.

In any case, the hyps have been evolved to their present state because they improve performance over what you have, so I would not change them unless you are experimenting.

I have a problem here ,

p = p.view(bs, self.na, self.nc + 5, self.ny, self.nx).permute(0, 1, 3, 4, 2).contiguous() # prediction

RuntimeError: shape '[6, 3, 10, 13, 13]' is invalid for input of size 18252

I still can't fix it, can anyone help me?

@DanielChungYi is your error reproducible in a new git clone?

@glenn-jocher I did clone the latest version of the code, but the problem still there. Please help me.

@DanielChungYi ok I see. It's likely an issue with your custom dataset, as we can not reproduce this on the coco data. Unless you can supply a minimum reproducible example on the coco dataset there is not much we can do.

@DanielChungYi also check your cfg and your number of classes, as you might have a mismatch.

even i am getting the same error

p = p.view(bs, self.na, self.nc + 5, self.ny, self.nx).permute(0, 1, 3, 4, 2).contiguous() # prediction

RuntimeError: shape '[64, 3, 8, 10, 10]' is invalid for input of size 19200

@DanielChungYi also check your cfg and your number of classes, as you might have a mismatch.

did you solved this problem????

Hi @glenn-jocher Could you explain what "generations" and "mutations" mean? I am a bit confused as I ran my train.py with evolve for 8 epochs (Planning on training with 80 epochs) and there was 1 generation to evolve (ie., in train.py, for _ in range(1)), is that correct way to do? I got the evolve.txt though

@glenn-jocher generations is the number of generations to evolve for. For optimal results I recommend at least 100 generations, preferably 300 or more.

Generations is set at 1 due to a memory leak bug. This example shows COCO evolution code using a bash for loop as a workaround:

while true

do

python3 train.py --weights '' --prebias --img-size 512 --batch-size 16 --accumulate 4 --evolve --epochs 27 --device 4

doneA mutation is a change to the genome of the offspring, what differentiates it from its parent. This repo uses a form of communal asexual evolution, where the best prior example of all possible ancestors is mutated to create the next offspring.

Hi, does the weight yolov3.pt train using

hyp = {'giou': 1.2, # giou loss gain

'xy': 4.062, # xy loss gain

'wh': 0.1845, # wh loss gain

'cls': 15.7, # cls loss gain

'cls_pw': 3.67, # cls BCELoss positive_weight

'obj': 20.0, # obj loss gain

'obj_pw': 1.36, # obj BCELoss positive_weight

'iou_t': 0.194, # iou training threshold

'lr0': 0.00128, # initial learning rate

'lrf': -4., # final LambdaLR learning rate = lr0 * (10 ** lrf)

'momentum': 0.95, # SGD momentum

'weight_decay': 0.000201, # optimizer weight decay

'hsv_s': 0.8, # image HSV-Saturation augmentation (fraction)

'hsv_v': 0.388, # image HSV-Value augmentation (fraction)

'degrees': 1.2, # image rotation (+/- deg)

'translate': 0.119, # image translation (+/- fraction)

'scale': 0.0589, # image scale (+/- gain)

'shear': 0.401} # image shear (+/- deg) and --img-size 320 ?

THX.

@Walstruzz yolov3.pt is yolov3.weights, the original darknet weights, converted to pytorch format.

Hi,

Thank you for your post.

I am finding a good hyperparameters of my own dataset, but after two times repetitions,

I got this error when i run this code after

python3 train.py --data data/my_own.data --img-size 320 --epochs 1 --batch-size 16 --accumulate 4 --evolve --weights ''

train.py:463: RuntimeWarning: invalid value encountered in true_divide

x = (x[:n] * w.reshape(n, 1)).sum(0) / w.sum() # new parent

WARNING: non-finite loss, ending training tensor([nan, nan, nan, nan], device='cuda:0')

0%| | 0/56 [00:02<?, ?it/s]

shear translate giou hsv_s fl_gamma momentum cls scale lr0 degrees hsv_v iou_tweight_decay obj obj_pw hsv_h lrf cls_pw

nan nan nan nan nan nan nan nan nan nan nan nan nan nan 1 nan nan 1

Evolved fitness: 0 0 0 0 0 0 0

shear: nan

translate: nan

giou: nan

hsv_s: nan

fl_gamma: nan

momentum: nan

cls: nan

scale: nan

lr0: nan

degrees: nan

hsv_v: nan

iou_t: nan

weight_decay: nan

obj: nan

obj_pw: 1

hsv_h: nan

lrf: nan

cls_pw: 1

workspace/pytorch/yolov3/utils/datasets.py:711: RuntimeWarning: invalid value encountered in greater

i = (w > 4) & (h > 4) & (area / (area0 + 1e-16) > 0.1) & (ar < 10)

workspace/pytorch/yolov3/utils/datasets.py:711: RuntimeWarning: invalid value encountered in less

i = (w > 4) & (h > 4) & (area / (area0 + 1e-16) > 0.1) & (ar < 10)

I think that when I put --weights ' ', it has problem described above. (Also I run

python3 train.py --data data/coco_64img.data --img-size 320 --epochs 1 --batch-size 16 --accumulate 1 --evolve --weights '', but it was not working. However, when I use pretrained weight, it was working)

Should I train my own dataset to get my own weight then I can evolve the hyperparameters of my own dataset with my own weight?? Otherwise, is it problem of my own dataset (I already finished training 1000 epochs with my own dataset from scratch)

@Alchemist77 you can start your training from prior weights or not, it's up to you. Prior weights generally provide you results much sooner, though we get best results on COCO when training from scratch. If you see this error I would simply restart the evolution. It picks up where it left off of, reading evolve.txt to select the best parent, so no work is lost.

Excellent job on this repo and the evolution algorithm! I've tried to do it for my weights (transferred learning from yolov3.pt on my dataset of 1200/400 pics, then converted them into darknet format and back in order to reset epochs before training) and I noticed that some of the parameters are a bit off both on graphs and in print_mutation( output. I'm not sure but it feels like, for instance, lrf and GIoU have switched places in this example, this must be the results from 22 generations AFAIK. I know I should train for longer but I'm not sure if there's a flaw of your algorithm in this regard. What do you think about it? Thanks in advance!

@glenn-jocher generations is the number of generations to evolve for. For optimal results I recommend at least 100 generations, preferably 300 or more.

Generations is set at 1 due to a memory leak bug. This example shows COCO evolution code using a bash

forloop as a workaround:while true do python3 train.py --weights '' --prebias --img-size 512 --batch-size 16 --accumulate 4 --evolve --epochs 27 --device 4 doneA mutation is a change to the genome of the offspring, what differentiates it from its parent. This repo uses a form of communal asexual evolution, where the best prior example of all possible ancestors is mutated to create the next offspring.

@glenn-jocher How could I reproduce memory leak bug? I could try to find a solution. I tried to increase range in the following line, but that did not result in memory leak.

Line 471 in b87bfa3

@okanlv ideally line 471 there would read for _ in range(300): to evolve for 300 generations (which roughly seems to be a good point of diminishing returns), but this causes the GPU memory to grow every generation, by maybe 1GB per generation (!), so for example if your training uses 9GB on a 2080Ti then you will get a CUDA out of memory error after only a few generations. Hence the bash while loop workaround.

To reproduce quickly, you could use a full size yolov3-spp.cfg on a tiny dataset like this:

python3 train.py --cfg yolov3-spp.cfg --weights '' --epochs 10 --data coco_64img.data --evolve@rabdulatipoff we updated the evolution results plotting about a month ago to fix a bug in the plot labels. You might want to git pull and try plotting again.

@glenn-jocher Hey, I didn't get CUDA oom error with that command. I have tried some other things, but it worked without any problem. I have used the latest stable pytorch in my environment. If you are still getting that error, I could suggest a few things.

@okanlv oh! Maybe a PyTorch bug has been fixed in the latest releases. Thanks for looking, I'll try running it over here.

Training hyperparameters in this repo are defined in train.py, including augmentation settings:

Lines 35 to 54 in df4f25e

We began with darknet defaults before evolving the values using the result of our hyp evolution code:

python3 train.py --data data/coco.data --weights '' --img-size 320 --epochs 1 --batch-size 64 -- accumulate 1 --evolveThe process is simple: for each new generation, the prior generation with the highest fitness (out of all previous generations) is selected for mutation. All parameters are mutated simultaneously based on a normal distribution with about 20% 1-sigma:

Lines 390 to 396 in df4f25e

Fitness is defined as a weighted mAP and F1 combination at the end of epoch 0, under the assumption that better epoch 0 results correlate to better final results, which may or may not be true.

Lines 605 to 608 in bd92457

An example snapshot of the results are here. Fitness is on the y axis (higher is better).

from utils.utils import *; plot_evolution_results(hyp)

Hi, thanks for your work. I see the result of your hyps enolve like this.

For example, the value of lr0 is 0.00146 in your image. Is this value optimal by the plot_evolution_results(hyp)?

@DeepLearning723 yes, blue point on x axis.

@glenn-jocher I trained my model for students detection in classroom using top view camera, it is working fine. But when I changed camera position the detection is not like previous. Here I am using YOLOv3-tiny

This is the result for camera position while training

This is the result with changed in camera position

Please suggest me how to increase my detection for changed camera position.

I trained my YOLOv3-tiny model for 5,00,000 steps, I checked for 2k,3k steps also but it didnt work.

@tanujkamde you should include training images of any position the camera may have during inference else you are overfitting a certain camera angle and you will always get bad results like you did.

@glenn-jocher , do you know if the memory leak is fixed for evolve ?

@okanlv , what was your torch version when you didn't get the memory leak ? Also, what was the size of the dataset you were training on ?

Edit :

Started evolving and monitoring GPU vram on every training and everything seems to go well (i modified the code in train.py and didn't use bash loop):

evolution 0: 8994MiB

evolution 1: 9160MiB

evolution 2: 9146MiB

evolution 3: 9146MiB

evolution 4: 9130MiB

@tanujkamde you should include training images of any position the camera may have during inference else you are overfitting a certain camera angle and you will always get bad results like you did.

@glenn-jocher , do you know if the memory leak is fixed for evolve ?

Thanks for reply I will try training with different camera angles also.

No, I don’t have any idea about memory leak. Will you please help me out about this.

@Ownmarc the memory leak remains a mystery. It's still present on coco2014.data training with yolov3-spp.cfg, but on smaller datasets like coco64.data I can't reproduce it. So perhaps you're good to go. In any case the two options (which are functionally identical) are in #392 (comment). If you have no memory leak you can of course keep using the python for loop in train.py. The evolution code has been updated recently to a lower mutation probability, which is more in line with common practices I believe.

For our own work we are evolving partially trained datasets. So for example if full training is 1000 epochs, we evolve based on the fitness after 100 or 200 epochs. And remember you need to ideally run several hundred generations for best results. If you supply a GCP bucket folder under --bucket you can direct multiple VMs to evolve in parallel based on the same gs://bucket/evolve.txt file, as all VMs will read from and write to the same file.

@tanujkamde your training data needs to span the entire parameter space you expect to run inference on. This means that if you expect to run inference at location A or with camera X you need to include labelled examples of those in the training data, otherwise your dataset lacks variety, regardless of the quantity.

@yoga-0125 evolve is currently only set to run for 1 generation, but you can modify this here. Ideally you want to run for several hundred generations, which should take some time. See #392 (comment) for details.

Lines 445 to 450 in 578e7f9

Hello,

How do you handle the non-determinism of the training? Just use more epoch and generation ?

Thanks

@kossolax see https://pytorch.org/docs/stable/notes/randomness.html

The repo is intended for full reproducibility, so in theory if you train twice you should end up with identical models, but in practice this rarely happens, especially across different hardware, and the different random number generators spread around numpy, python, and pytorch.

if I use the --evolve flag the model doesn't save the weights. Is that intended or am I missing something?

Hi @glenn-jocher , I have a question about --evolve flag. I set

for _ in range(1): # generations to evolve if os.path.exists('evolve.txt'): # if evolve.txt exists: select best hyps and mutate # Select parent(s) x = np.loadtxt('evolve.txt', ndmin=2) parent = 'single' # parent selection method: 'single' or 'weighted'

generations from 1 to 10, but still got only one point in the figure. Did I miss something?

@hackobi no, the weights are not saved. The purpose of --evolve is to train hundreds of times with many different variations of the hyperparameters. The fitness and hyperparameters are saved in evolve.txt, from best to worst. Once you've evolved long enough you can use the best hyperparameters to train a model normally, which will be saved.

@Ringhu the plots visualize the results in evolve.txt, so if you look there you should see 10 rows. If all of the rows are the same you are doing something wrong though.

that makes sense @glenn-jocher thanks for the quick reply!

@glenn-jocher Thank you for ur reply. I set epoch to 2 and it works, after every 2 epochs it generates a row. But I don't know how many epochs does each generation need? Is 2 epochs enough or each generation need 273 or more epochs?

@Ringhu theres no hard rules here. For smaller datasets (and in an ideal world) you might evolve using the full number of epochs.

For larger datasets like COCO you might evolve based on a shorter number of epochs to save time, though beware that the best hyps for short term results will not correlate 100% with the best long term hyps.

In practice for COCO we use 10% of full training to evolve, 27 epochs.

@glenn-jocher 27 (10%epoch) * 300 (generation) means that we will need to spend 30 times of coco training time? Or the training will be faster here.

Thanks

@qwe3208620 yes that is correct. Of course, you can evolve for less generations also to arrive at a slightly reduced fitness solution.

A genetic algorithm by definition requires multiple evaluations of the cost function.

Hello, I don't know how the super parameter evolution mechanism here is obtained by genetic algorithm, could you please explain?In addition, my data set only has 1128 pedestrians. Is it reasonable to set the number of iterations to 10 in the case of hyperparameter evolution?

@logic03 you're probably going to want a larger dataset. There's not much point evolving hyperparameters to a tiny dataset.

@logic03 you're probably going to want a larger dataset. There's not much point evolving hyperparameters to a tiny dataset.

But I only have one category of pedestrians.At the beginning, I used INRIA pedestrian data set (only 614 images in the training set), and I found that the mAP calculated could reach 0.9. However, when I detected other pedestrian videos, there were many omissions, which I thought was because the data set was too small and the generalization ability was not strong enough.Later, I used the Hong Kong Chinese pedestrian data set, and the mAP was only 0.8, but it worked well in detecting my own pedestrian video.So I tried to put the two data sets together, and the mAP was 0.85, which was not as good as the second case when detecting the video.So I wonder if it has something to do with the super parameters, because when I trained the test before, the super parameters were the same default initial value.Have you ever encountered this situation?

@logic03 well, for generalizability, the larger and more varied the dataset the better. On the plus side, if your dataset is small, training and evolving will be faster.

So what is the approximate number of times I'm going to evolve when I use the hyperparametric evolution mechanism?

@logic03 well, for generalizability, the larger and more varied the dataset the better. On the plus side, if your dataset is small, training and evolving will be faster.

May I ask if this hyperparametric evolution mechanism is based on the strategy proposed in this paper?

《Population Based Training of Neural Networks》

Max Jaderberg, Valentin Dalibard, Simon Osindero, Wojciech M. Czarnecki, Jeff Donahue, Ali Razavi, Oriol Vinyals, Tim Green, Iain Dunning, Karen Simonyan, Chrisantha Fernando, Koray Kavukcuoglu

(Submitted on 27 Nov 2017 (v1), last revised 28 Nov 2017 (this version, v2))

@logic03 it's a simple mutation-based genetic algorithm:

https://en.wikipedia.org/wiki/Genetic_algorithm

300 generations is a rough number to try to run, though of course if you see no changes after a certain point it's likely settled into a minimum.

Hi,

I wanted to evolve the hyperparameters after getting rid of hsv_* in the hyp dictionary (they don't make sense in my non-RGB data). I was looking into train.py and found this line:

hyp[k] = x[i + 7] * v[i] # mutate

Is this 7 a magic number so hyp and x elements do match?

EDIT: Nevermind, I'm guessing now it is because x is a line read from evolve.txt and its first 6 values correspond to some extra metainfo other than hyperparameters.

@aclapes yes that's correct! There are 7 values (3 losses and 4 metrics) followed by the hyperparameter vector in each row of evolve.txt.

PR's are welcome, thank you!

Hello,

i wanted to evolve my hyp,

in python3 train.py --data data/coco.data --weights '' --img-size 320 --epochs 1 --batch-size 64 -- accumulate 1 --evolve,

which epochs in should set?

And how can i use xy and wh loss?

@joey1616 the more --epochs the more your results will correlate to full training results, but of course the longer everything will take also. There is no right answer, but we've been using about 10% of full training as a good proxy for full training results.

If you look at most training results you will see a corner in the mAP. Before this corner the results can be pretty uncertain and don't mean quite as much, but in general once mAP turns this corner its a fairly straight slope for the rest of training, which means your results there are a very good indicator of your final results. For the coco trainings below this happens around 50-60 epochs.

@joey1616 oh and individual xywh losses are deprecated. GIoU is a single loss that regressses the bounding boxes.

Thank you very much and my cls loss is very small,it is about 2.19e-07 in evolve when map@0.5 =0.175.Can i use this cls loss in my train?

@joey1616 I don't understand your question. cls loss should not normally be that small.

@joey1616 I don't understand your question. cls loss should not normally be that small.

Sorry,i mean that my cls loss gain is about 2.19e-07,and it was generated in evolution.It's too small so I don't know if this parameter should be used.

If it produces a high mAP then use it, if not don't.

@ChristopherSTAN blue dot is your best result. It will be the first row in evolve.txt.

Hi, I know this question is repetitive. But I need some assistance. How do these parameters in darknet framework map with ultralytics hyperparameters? From the above, this is the information I gathered. Please do correct me. I need help with the parameters indicated as "?" Thank you so much!

darknet: ultralytics

batch: batch-size(input_param)

subdivisions: ?

width: img-size(input_param)

height: img-size(input_param)

channels: ?

momentum: momentum

decay: weight_decay

angle: degrees

saturation: hsv_s

exposure: ?

hue: hsv_h

learning_rate: lr_0

burn_in: (use lr_1)

max_batches: ?

policy: ?

steps: ?

scales: scale

Hello, which 'evolve.txt' file should we use to get higher mAP? can we create our own file?

@taylan24 evolve.txt files are created during hyperparameter evolution, they store the results of each generation.

Hello, are the values of the best result from the same combination?

@ankitahumne evolution always starts from a beginning state. You should ensure your beginning state shows acceptable performance before starting the process. In other words, garbage in, garbage out.

@glenn-jocher Thanks, that would save a lot of time. But to arrive at decent hyper parameters, trail and error is the only way? Also, my precision and recall are always zero. It doesnt seem normal. Any hints to improve that?

@ankitahumne these are two separate topics. Before doing any evolution you need to train normally with default settings and make sure you see at least a minimum level of performance. If not you have a problem.

@glenn-jocher I did try with the default settings and that was pretty bad, so I am using --evolve to tune the hyperparameters. The results are slightly better in terms of P,R,mAP and F1. Looks like that's the way to proceed then - keep tuning hyperparameters. I tried a mix of changing hyp, multiscale, conf-thres, iou-thresh .

I would like to ask you about the purpose of this topic.

First of all, the default hypeparameters are set inside the train.py file.

If we want to tune the hyperparameters efficiently for our custom dataset. We need to specify the option --evolve ? And then the hyperameters are automatically adjusted during the training? Correct me if I am wrong. Thank you so much.

@leviethung2103 hyps are static while training. --evolve with evolve them over many trainings.

This is the result on training on a custom data with evolve. The values I get at the end of evolution are the same as the start. Is this normal? Doesn't look so to me :/ @glenn-jocher

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

@glenn-jocher Two video cards 16g, epochs set to 300, evolve iteration to 200, the result exceeds the GPU memory, how to solve this?

@glenn-jocher epochs set to 30, evolve iteration to 200, The following error:

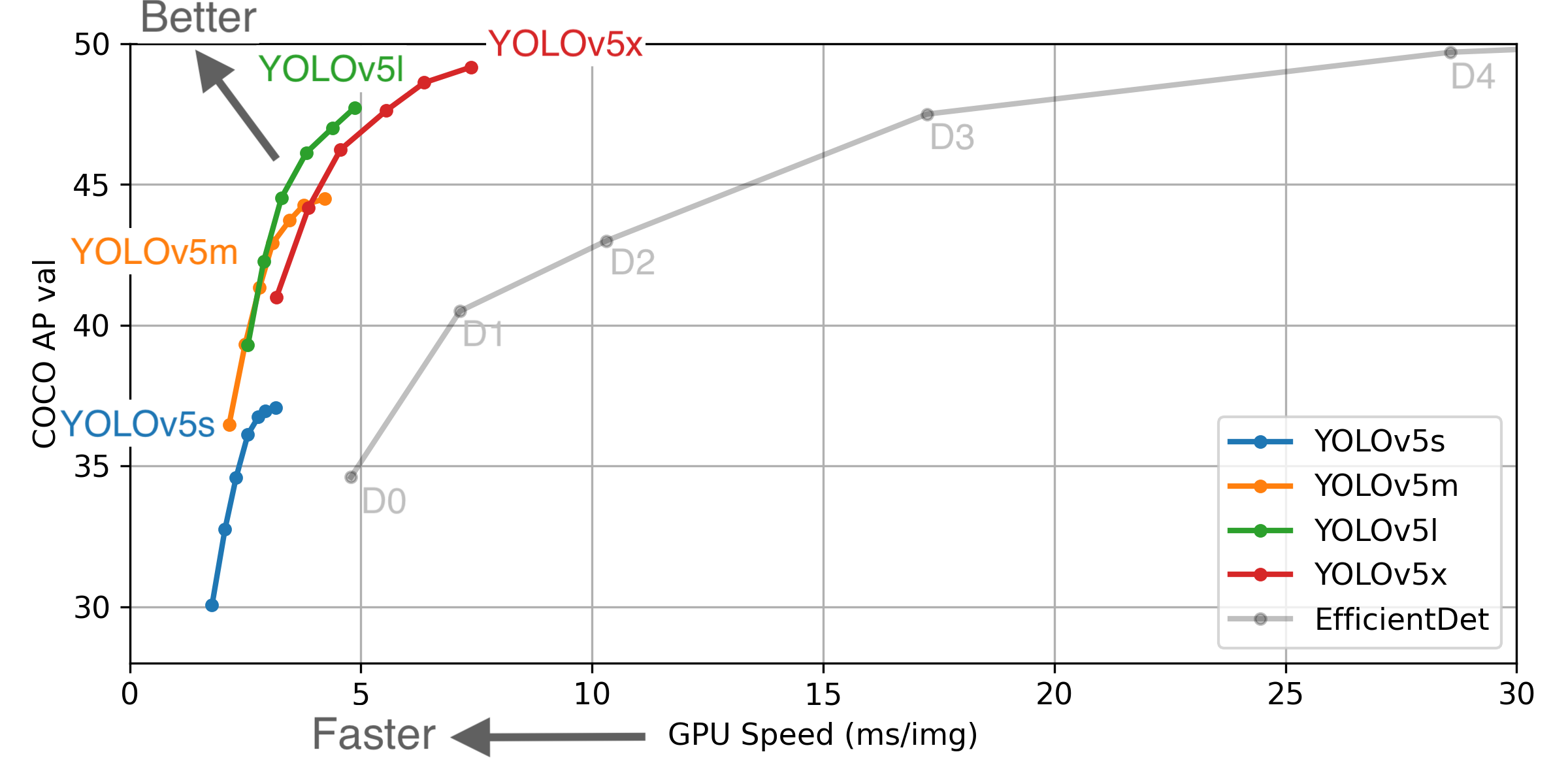

Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8.

- August 13, 2020: v3.0 release: nn.Hardswish() activations, data autodownload, native AMP.

- July 23, 2020: v2.0 release: improved model definition, training and mAP.

- June 22, 2020: PANet updates: new heads, reduced parameters, improved speed and mAP 364fcfd.

- June 19, 2020: FP16 as new default for smaller checkpoints and faster inference d4c6674.

- June 9, 2020: CSP updates: improved speed, size, and accuracy (credit to @WongKinYiu for CSP).

- May 27, 2020: Public release. YOLOv5 models are SOTA among all known YOLO implementations.

- April 1, 2020: Start development of future compound-scaled YOLOv3/YOLOv4-based PyTorch models.

Pretrained Checkpoints

| Model | APval | APtest | AP50 | SpeedGPU | FPSGPU | params | FLOPS | |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 37.0 | 37.0 | 56.2 | 2.4ms | 416 | 7.5M | 13.2B | |

| YOLOv5m | 44.3 | 44.3 | 63.2 | 3.4ms | 294 | 21.8M | 39.4B | |

| YOLOv5l | 47.7 | 47.7 | 66.5 | 4.4ms | 227 | 47.8M | 88.1B | |

| YOLOv5x | 49.2 | 49.2 | 67.7 | 6.9ms | 145 | 89.0M | 166.4B | |

| YOLOv5x + TTA | 50.8 | 50.8 | 68.9 | 25.5ms | 39 | 89.0M | 354.3B | |

| YOLOv3-SPP | 45.6 | 45.5 | 65.2 | 4.5ms | 222 | 63.0M | 118.0B |

** APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy.

** All AP numbers are for single-model single-scale without ensemble or test-time augmentation. Reproduce by python test.py --data coco.yaml --img 640 --conf 0.001

** SpeedGPU measures end-to-end time per image averaged over 5000 COCO val2017 images using a GCP n1-standard-16 instance with one V100 GPU, and includes image preprocessing, PyTorch FP16 image inference at --batch-size 32 --img-size 640, postprocessing and NMS. Average NMS time included in this chart is 1-2ms/img. Reproduce by python test.py --data coco.yaml --img 640 --conf 0.1

** All checkpoints are trained to 300 epochs with default settings and hyperparameters (no autoaugmentation).

** Test Time Augmentation (TTA) runs at 3 image sizes. Reproduce by python test.py --data coco.yaml --img 832 --augment

For more information and to get started with YOLOv5 please visit https://github.com/ultralytics/yolov5. Thank you!

@glenn-jocher How to use the parameters of evolve.txt in training?

@hande6688 see the yolov5 tutorials. A new hyp evolution yaml is created that you can point to when training yolov5: python train.py --hyp hyp.evolve.yaml

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

@glenn-jocher why the result of me about evolve is like the nayyersaahil28 users, and i had been modified code like fig1-2 ,

@nanhui69 your plots look like this because you have only evolved 1 generation. For the best performing and most recent evolution I would recommend the YOLOv5 hyperparameter evolution tutorial, where the default code evolves 300 generations:

YOLOv5 Tutorials

- Train Custom Data 🚀 RECOMMENDED

- Weights & Biases Logging 🌟 NEW

- Multi-GPU Training

- PyTorch Hub ⭐ NEW

- ONNX and TorchScript Export

- Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution < ---------------------------

- Transfer Learning with Frozen Layers ⭐ NEW

- TensorRT Deployment

@glenn-jocher but i have chaged the code "for _ in range(265): # generations to evolve---------------------------" ?

what's problem?

@glenn-jocher python3 train.py --epochs 30 --cache-images --evolve & ? should i need specify the my custom weight ,not the pretrainweight?

@nanhui69 you can evolve any base scenario, including starting from any pretrained weights. There are no constraints.

@nanhui69 you can evolve any base scenario, including starting from any pretrained weights. There are no constraints.

i only want to solve the problem as the evolve‘s png shows above -- only one point ? what should i do?

@nanhui69 your plots look like this because you have only evolved 1 generation. For the best performing and most recent evolution I would recommend the YOLOv5 hyperparameter evolution tutorial, where the default code evolves 300 generations:

YOLOv5 Tutorials

- Train Custom Data 🚀 RECOMMENDED

- Weights & Biases Logging 🌟 NEW

- Multi-GPU Training

- PyTorch Hub ⭐ NEW

- ONNX and TorchScript Export

- Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution < ---------------------------

- Transfer Learning with Frozen Layers ⭐ NEW

- TensorRT Deployment

@nanhui69 your plots look like this because you have only evolved 1 generation. For the best performing and most recent evolution I would recommend the YOLOv5 hyperparameter evolution tutorial, where the default code evolves 300 generations:

YOLOv5 Tutorials

- Train Custom Data 🚀 RECOMMENDED

- Weights & Biases Logging 🌟 NEW

- Multi-GPU Training

- PyTorch Hub ⭐ NEW

- ONNX and TorchScript Export

- Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution < ---------------------------

- Transfer Learning with Frozen Layers ⭐ NEW

- TensorRT Deployment

oh, i will check it again。。。。。

@nanhui69 your plots look like this because you have only evolved 1 generation. For the best performing and most recent evolution I would recommend the YOLOv5 hyperparameter evolution tutorial, where the default code evolves 300 generations:

YOLOv5 Tutorials

- Train Custom Data 🚀 RECOMMENDED

- Weights & Biases Logging 🌟 NEW

- Multi-GPU Training

- PyTorch Hub ⭐ NEW

- ONNX and TorchScript Export

- Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution < ---------------------------

- Transfer Learning with Frozen Layers ⭐ NEW

- TensorRT Deployment

when i follow yolov5 steps ,the evolve.txt have only one line? what's that?

@nanhui69 YOLOv5 evolution will create an evolve.txt file with 300 lines, one for each generation.

@nanhui69 YOLOv5 evolution will create an evolve.txt file with 300 lines, one for each generation.

so ,what's wrong with me ? and when evolve.txt exists ,to run evolve style, error appears?

hyp[k] = x[i + 7] * v[i] # mutate

IndexError: index 18 is out of bounds for axis 0 with size 18