Minimize copies

frankmcsherry opened this issue · comments

I've been trying to do a bit of review of the copies that go on in the timely and timely communication path. I think several of them can be removed, but first I thought I would try and explain what each of them are.

Let's go in order from a message received in timely communication, in BinaryReceiver::recv_loop().

-

There is almost certainly a copy in

let read = self.reader.read(&mut self.buffer[self.length..]).unwrap_or(0);

where we collect whatever data the kernel has for us. In the absence of some zero-copy interface to the networking, I think this is probably going to stick around. Though, we may have to think a bit harder about where we copy into.

-

Just a bit below, we have

target.send(slice[..h_len].to_vec()).unwrap();

This is where we peel out the bytes destined for a specific (worker, dataflow, channel) tuple and send the bytes along to that destination. Because this is a different thread with no lifetime relationship, we invoke

.to_vec()to get an owned allocation. -

The byte allocation arrives at communication's

binary::Puller, where it is not copied. This is a clever moment where we deserialize into theMessagetype, whosefrom_bytesmethod takes ownership of theVec<u8>buffer and invokes Abomonation'sdecodemethod to get references. -

This

Messagegets handed to operator code, and if the operator only needs a&[Record]then no copy needs to happen. However, if the operator needs a&mut Vec<Record>then theDerefMutimplementation will invoke aclone()on the&Vec<Record>, which will surely do some allocations. The byte buffer is dropped at this point. -

Operators can supply outputs either record-by-record, or as a ready-to-send batch of records. In either case, if they hit a data exchange channel they will need to be moved. This is essentially a copy, but it seems largely unavoidable if we want to put the records destined for remote workers into contiguous memory. This is where the "shuffle" actually needs to happen, and it seems legit.

-

If serialization is required, then

<Message as Serialize>::into_bytes()is invoked, and it will do an allocation of aVec<u8>and a copy into it. The only way we know how to turn generalVec<Record>types into bytes is using Abomonation'sencode, and this copies. In principle, we could "steal" the allocation of the vector itself, and only serialize subsequent owned data. -

The header (fixed sized struct) and bytes are sent to

BinarySender::send_loop(), in which we write both to aW: Writer. This happens to be aBufWritterwrapped around a network stream, which mean a copy into the buffer, and probably a copy out of the buffer when it eventually gets around to writing at the network stream in bulk (the second of which is intrinsic in theTcpStreamapi).

I think three of these are somewhat non-negotiable at the moment: i. the copy from kernel buffers when we read from the network stream (in 1.), ii. the copy as we do the data shuffle (in 5.), and the copy back into kernel buffers (in 7.).

This leaves us with four potential copies that could be superfluous.

-

This copy could be avoided using something like the

bytescrate, where one hands out multiple references to a common allocation, and the API ensures that the references are to disjoint ranges.This could also be avoided by doing smaller reads into independently owned allocations; each read pulls down the next payload and the subsequent header, which tells us how much to read for the next allocation (and could tell us a size and alignment). This has the potential risk that if there are many small methods we do many small reads, possibly doing lots of kernel crossings. In that case, it seems like copies are an unavoidable cost of moving many messages using few kernel crossings.

-

This wasn't actually a copy, but it has a number so we want to put it here.

-

This copy is self-inflicted, in that one could write operator code that doesn't even need a mutable reference to the source data. It isn't always natural to do this, but if your code insists on owned data with owned allocations then this is non-negotiable, as we don't control the Rust codegen that needs to work correctly with the data it is handed.

One candidate bit of cuteness is: if we are handed an owned

Vec<u8>, in conflict with the optimization for (2.), we could arrange that the data are laid out so that the same allocation can be used as the spine of theVec<Record>. This could still mean copying if these types have allocations behind them, and it is important that we got theVec<u8>as aVec<Record>because the deallocation logic is allowed to explode if we dealloc with a different size or alignment, but it could be possible for something like this to work. -

We decided that the shuffle move was non-optional, but I have to put it here to make markdown numbers work out.

-

When we go from

Vec<Record>toVec<u8>we have relatively few options. We could grab the spine of the vector and only serialize auxiliary data (perhaps to the same allocation, if there is enough space). This would mean no copies here for data without further owned memory, and in the absence of any further information we would have no choice I think (if eachRecordcontains a bunch ofStringfields and such).One alternative is something like the CapnProto builder patterns, where instead of allocating a Rust object for output you directly serialize the result at a byte buffer. This is possible, though I don't know how ergonomic it ends up being (that is, you could write the code by hand, but you probably wouldn't want to).

-

One of these copies seems unescapable (the kernel buffer copy), but the

BufWritercopy seems optional. It does some very good things for us, in terms of minimizing kernel crossings. This could be somewhat avoided if each worker produced a consolidatedVec<u8>to send to each remote process, rather than separate allocations for each channel, and each remote worker. This seems possible, though again awkward. The shuffle that happens means to colocate data for each worker, and we don't know how large each of these will be before sending them. We could commit to certain spacing (e.g. 1024 records for each worker) and start writing each worker at an offset of a common buffer for each process, with some inefficiency if there is skew of any sort. In any case, operator code currently produces output records one at a time, and we need to do something with each of these records.

One meta-point, which I don't really know that I understand, is that we may be able to absolve ourselves of copies that do not leave low level caches. Each copy that remains within the L1 cache could be viewed as pretty cheap, relative to all the other operations going on. So, deserialization code for small buffers might be relatively cheap, as compared to copying each frame into a new allocation (2.). I'm slightly making this up, but understanding "zero copy" and which costs it is really avoiding seems like a good thing to do along the way.

In fact, the copy in (6.) is especially pernicious; the into_bytes method just uses encode applied to what is initially a Vec::new():

fn into_bytes(&mut self, bytes: &mut Vec<u8>) {

unsafe { encode(&self.time, bytes).unwrap(); }

unsafe { encode(&self.from, bytes).unwrap(); }

unsafe { encode(&self.seq, bytes).unwrap(); }

let vec: &Vec<D> = self.data.deref();

unsafe { encode(vec, bytes).unwrap(); }

}This means it will double in size up to its appropriate allocation, rather than either re-using an allocated Vec<u8> or the new extent method in Abomonation 0.5 to pre-size the destination. This could plausibly also be a single struct that gets abomonated, rather than a bunch of calls in sequence, though that is less worrying than the re-allocations.

As part of experimenting with reducing copies, there are #133 and #135. The first is a prototype reusable bytes crate replacement (due to bytes not obviously supporting allocation reclamation, also easier to grok and for us to tweak if needed). The second is meant to look like a zero-cost network when just using one process: forcing serialization to shared buffers and just doing byte swaps; this should let us home in on communication overheads timely introduces.

#135 is already trying out an approach that elides one of the copies from (7), in that serialization now happens directly into a large shared buffer, rather into a personal Vec<u8> which is then copied again by the networking thread. We could totally add that back to see the effect, but adding it back should just be "allocate a Vec<u8>, serialize into it, copy out of it.

One question that came up in #128 is "what is the right metric to evaluate copy reduction?", which is a fine question that I don't know the answer to. I've been trying "throughput of exchange.rs" which will only get us so far. In profiling, there are clearly stand-out memory management operations (e.g. memmove shows up a lot as part of copies), and assessing these seem good. I'm personally at the point where I'm just trying to make sure I can account for each of them, and understand what is costing in the system, which is to say "not quite at the point of reducing the costs yet".

But, two things I've found helpful using the #135 mocking:

-

exchange 10000 10000 -w1runs an exchange benchmark with only one worker, which means that it doesn't bother to do per-record exchange logic. This mostly fills up buffers, serializes them, and then passes them along before eventually discarding them. It should be mostly overhead. -

pingpong 10000 10000 -w1runs a modified pingpong that inserts a non-trivial number of records, and repeatedly cycles them around the graph in a loop doing a data exchange as it goes. This could be a better "hands off" benchmark, in that we don't require user code to continually introduce records; we introduce just a few (the second arg) and then the same records spin in the system for many iterations.

But at the moment, I'm not entirely clear on the best way to measure this. But I'm learning!

Some more data, for concreteness:

I think I like the pingpong 10000 100000 sort of test. It seems to surface some interesting issues.

First up, it fundamentally has deserialization in it (the map_in_place) so there is probably no getting around some data copying without rewriting the program a bit.

But if you spin up the profiler with two workers (-w2) you get readouts like (edited for clarity)

Weight Self Weight Symbol Name

41.69 s 100.0% 0 s pingpong (27712)

41.69 s 99.9% 0 s thread_start

41.68 s 99.9% 650.00 ms std::sys_common::backtrace::__rust_begin_short_backtrace

27.89 s 66.9% 4.30s Subgraph::pull_internal_progres

13.51 s 32.4% 468.00 ms OperatorCore::pull_internal_progress

13.44 s 32.2% 13.44 s _platform_memmove$VARIANT$Haswell

13.04 s 31.2% 197.00 ms ProcessBinary::pre_work

10.11 s 24.2% 115.00 ms Buffer::give_content

9.72 s 23.3% 7.65 s Exchange::push

5.52 s 13.2% 1.14 s OperatorCore::pull_internal_progress

5.38 s 12.9% 35.00 ms mmap

5.35 s 12.8% 5.35 s __mmap

In here, Subgraph::pull_internal_progres is the dataflow doing work, which is about 2/3 of the time (work may be "useful compute" and may be partly "wasteful alloc/copies"). Also contained, the deallocations matching allocations from the next paragraph:

Also in here, ProcessBinary::pre_work which is the code that swings through incoming binary messages and carves out Vec<u8>s to put in operator buffers. At 30% of the time, this is pretty substantial in this computation (which admittedly doesn't do much other than exchange data). But, it seems like it might be a good example where slimming down the allocation associated with inbound data would register.

Actually, I put a bit more time into pingpong, and with the help of an ETHZ local determined that it isn't the best test. The partitioning pattern and incrementing means that each exchange channel moves all data from one worker to the other, rather than distributing them uniformly. Introducing uniform distribution makes things go a bit crazy (because there is no loop synchronization). Anyhow, it's still interesting, but it isn't 100% clear that elapsed times measure the right thing (profiling reveals the screw ups, for sure).

I've been poking around a bit with #135. I suspect nothing I'm about to say will be very surprising but I'll write it down in case it's useful.

I've been coming at this from the direction of tracking the number of allocations. I couldn't figure out how to instrument jemalloc, but I found that if I switched the allocator to libc's malloc, I could hook into a few different tools like ePBF and heaptrack.

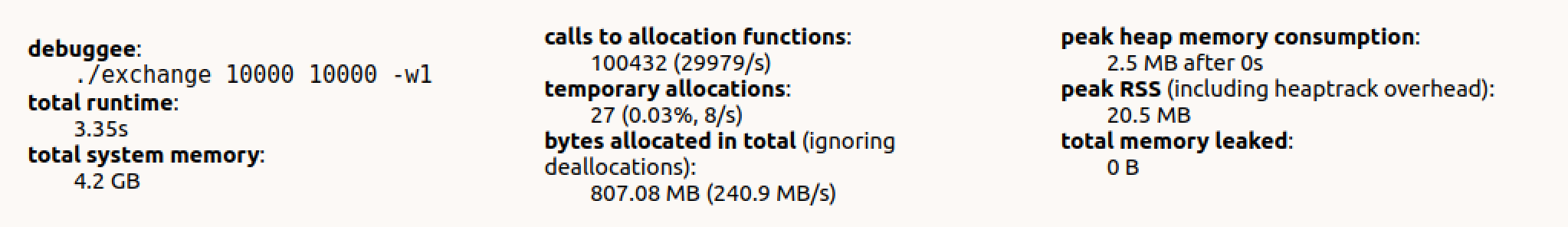

So from heaptrack, I get the following nice summary after running exchange 10000 10000 -w1

total runtime: 1.43s.

bytes allocated in total (ignoring deallocations): 807.08MB (566.37MB/s)

calls to allocation functions: 100432 (70478/s)

temporary memory allocations: 27 (18/s)

peak heap memory consumption: 2.47MB

peak RSS (including heaptrack overhead): 18.24MB

total memory leaked: 0B

Heaptrack can output a nice little flamegraph svg but I can't attach that here. Instead I'll attach some verbose text output. The takeaway is that 100,000 of the 100432 allocations occur in ProcessBinary::pre_work, as one would expect.

So not very revelatory, but with this method we can answer the question "how many allocations did this change eliminate". That's not the whole performance story but might be a useful metric.

This does sound like a useful metric, and it does seem to point the finger roughly where we might expect! As you say, maybe not surprising, but very useful nonetheless. I'm surprised it is quite such a large fraction, actually (looking at time spent in the allocator makes it look a bit more balanced, probably because the other allocations are larger; but thinning down the number is a great thing to do).

As a next step, do you want to take a swing at swapping in bytes in place of those allocations? This is for sure where it might get a bit dodgy (in that the covers come off on some Abomonation stuff), and there are perhaps unsolved issues to list and work maybe work out (the main one I can think of: if you give shared access to byte arrays, what happens if user code doesn't drop or copy out of them?).

I'm about to (Saturday) vanish for a week of vacation, if that informs your planning. But I'm up for either i. helping to swap bytes in, ii. swapping bytes in myself and explaining what happened, iii. learning more about memory profiling (no clue, really).

But, very cool! :D

I've apparently broken things in the dataplane_mockup branch; some tests are not terminating, which .. I can believe I did somehow. Now investigating.

Hrm. Can't repro locally, but travis had a 10 min timeout on one test. If you see anything similar drop me a line, but I'll assume it is travis for the moment.

I'm going to give swapping bytes in myself and see how it goes. I have limited time so I probably won't have a PR ready until after your vacation.

I'm not sure I'll be able to figure everything out on my own but I bet the attempt will be informative.

Hi,

I'm finally able to get back at this. I'm going to write down my thoughts just to help reorient myself and see if I understand/remember things correctly.

We know that when we run exchange 10000 10000 -w1 that causes 100k allocations at this point. Since exchange is doing 10,000 rounds we must be entering for recv in self.recvs

10,000 times and therefore the number of messages/to_vec() calls every time we enter that loop must be 10 (...is this a function of message size?).

So we know the number of times we call to_vec() is going to be a function of the counts of workers * messages and the total memory allocated is workers * messages * message size. But I'm not sure how beneficial switching to bytes here could be -- I don't have a good mental model of the expense of slice.to_vec() and how that differs from copying a slice into a prexisting buffer. Probably more predictable memory access patterns, right?

Another option was #2 on your initial list, here, where we read bytes from the network and route them to workers. The work here would involve switching BinaryReceiver.buffer to abytes object that we write into and recover every time through recv_loop, instead of calling slice[..h_len].to_vec().

Hello!

Coincidentally, I just started hacking on a version of the bytes changes to see what would be involved. Give me a few hours and I should have something I can push that passes tests.

The throughput numbers for exchange seemed pretty close to timely master, with this version costing an extra memcpy (serialization) and timely master having more allocations. I think the theory is that i. allocations are eventually more painful with multiple cores, NUMA regions, etc. vs serialization, and ii. for inter-process communication the serialization is necessary anyhow.

Some tests are failing at the moment, but I'll see about fixing them up and push at the dataflow_mockup branch with an explanation of what is going on.

I dropped some comments in #135. The current pushed version there passes tests, and has somewhat better performance than the branch had before (at least for exchange.rs). It should do substantially fewer allocations (a constant number, ideally).

Going to close this for now. #135 has landed and substantially reduces copies. There is certainly more work to do in the future, but enough has changed that a new analysis should probably start with fresh eyes!

Thanks very much for the help, @JosephWagner!