This repository contains the code and trained models of:

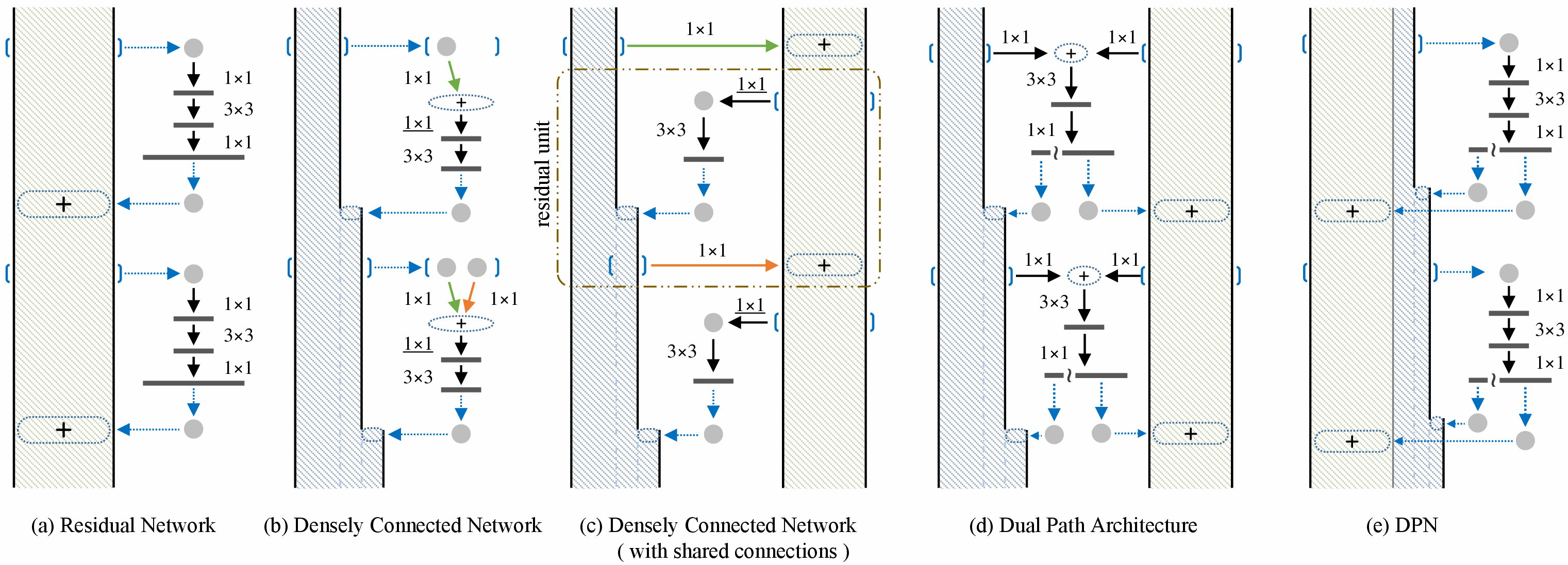

Yunpeng Chen, Jianan Li, Huaxin Xiao, Xiaojie Jin, Shuicheng Yan, Jiashi Feng. "Dual Path Networks" (arxiv).

DPNs are implemented by MXNet @92053bd.

| Method | Settings |

|---|---|

| Random Mirror | True |

| Random Crop | 8% - 100% |

| Aspect Ratio | 3/4 - 4/3 |

| Random HSL | [20,40,50] |

Note: We did not use PCA Lighting and any other advanced augmentation methods. Input images are resized by bicubic interpolation.

The augmented input images are substrated by mean RGB = [ 124, 117, 104 ], and then multiplied by 0.0167.

Mean-Max pooling is a new technique for improving the accuracy of a trained CNN whose input size is larger than training crops. The idea is to first convert a trained CNN into a convolutional network and then insert a mean-max pooling layer, i.e. 0.5 * (global average pooling + global max pooling), just before the final softmax layer, see score.py. Mean-Max Pooling is very effective and does not require any training/fine-tuining process.

Based on our observations, Mean-Max Pooling consistently boost the testing accuracy. We adopted this testing strategy in both LSVRC16 and LSVRC17. Please let me know if any other resarchers have proposed exactly the same technique.

Single Model, Single Crop Validation Error:

| Model | Size | GFLOPs | 224x224 | 320x320 | 320x320 ( with mean-max pooling ) |

|||

|---|---|---|---|---|---|---|---|---|

| Top 1 | Top 5 | Top 1 | Top 5 | Top 1 | Top 5 | |||

| DPN-92 | 145 MB | 6.5 | 20.73 | 5.37 | 19.34 | 4.66 | 19.04 | 4.53 |

| DPN-98 | 236 MB | 11.7 | 20.15 | 5.15 | 18.94 | 4.44 | 18.72 | 4.40 |

| DPN-131 | 304 MB | 16.0 | 19.93 | 5.12 | 18.62 | 4.23 | 18.55 | 4.16 |

| DPN-107* | 333 MB | 18.3 | 19.75 | 4.94 | 18.34 | 4.19 | 18.15 | 4.03 |

*DPN-107 is trained with addtional training data: Pretrained on ImageNet-5k and then fine-tuned on ImageNet-1k.

The training speed is tested based on MXNet @92053bd.

Multiple Nodes (Without specific code optimization):

| Model | CUDA /cuDNN |

#Node | GPU Card (per node) |

Batch Size (per GPU) |

kvstore |

GPU Mem (per GPU) |

Training Speed* (per node) |

|---|---|---|---|---|---|---|---|

| DPN-92 | 8.0 / 5.1 | 10 | 4 x K80 (Tesla) | 32 | dist_sync |

8017 MiB | 133 img/sec |

| DPN-98 | 8.0 / 5.1 | 10 | 4 x K80 (Tesla) | 32 | dist_sync |

11128 MiB | 85 img/sec |

| DPN-131 | 8.0 / 5.1 | 10 | 4 x K80 (Tesla) | 24 | dist_sync |

11448 MiB | 60 img/sec |

| DPN-107 | 8.0 / 5.1 | 10 | 4 x K80 (Tesla) | 24 | dist_sync |

12086 MiB | 55 img/sec |

*This is the actual training speed, which includes

data augmentation,forward,backward,parameter update,network communication, etc. MXNet is awesome, we observed a linear speedup as has been shown in link

| Model | Size | Dataset | MXNet Model |

|---|---|---|---|

| DPN-92 | 145 MB | ImageNet-1k | GoogleDrive |

| DPN-98 | 236 MB | ImageNet-1k | GoogleDrive |

| DPN-131 | 304 MB | ImageNet-1k | GoogleDrive |

| DPN-107* | 333 MB | ImageNet-1k | GoogleDrive |

*DPN-107 is trained with addtional training data: Pretrained on ImageNet-5k and then fine-tuned on ImageNet-1k.

ImageNet-1k Trainig/Validation List:

- Download link: GoogleDrive

ImageNet-1k category name mapping table:

- Download link: GoogleDrive

ImageNet-5k Raw Images:

- The ImageNet-5k is a subset of ImageNet10K provided by this paper.

- Please download the ImageNet10K and then extract the ImageNet-5k by the list below.

ImageNet-5k Trainig/Validation List:

- It contains about 5k leaf categories from ImageNet10K. There is no category overlapping between our provided ImageNet-5k and the official ImageNet-1k.

- Download link: GoogleDrive

If you use DPN in your research, please cite the paper:

@article{Chen2017,

title={Dual Path Networks},

author={Yunpeng Chen, Jianan Li, Huaxin Xiao, Xiaojie Jin, Shuicheng Yan, Jiashi Feng},

journal={arXiv preprint arXiv:1707.01629},

year={2017}

}