About PatchNCE loss

YangGangZhiQi opened this issue · comments

Hi, thanks for your great works!

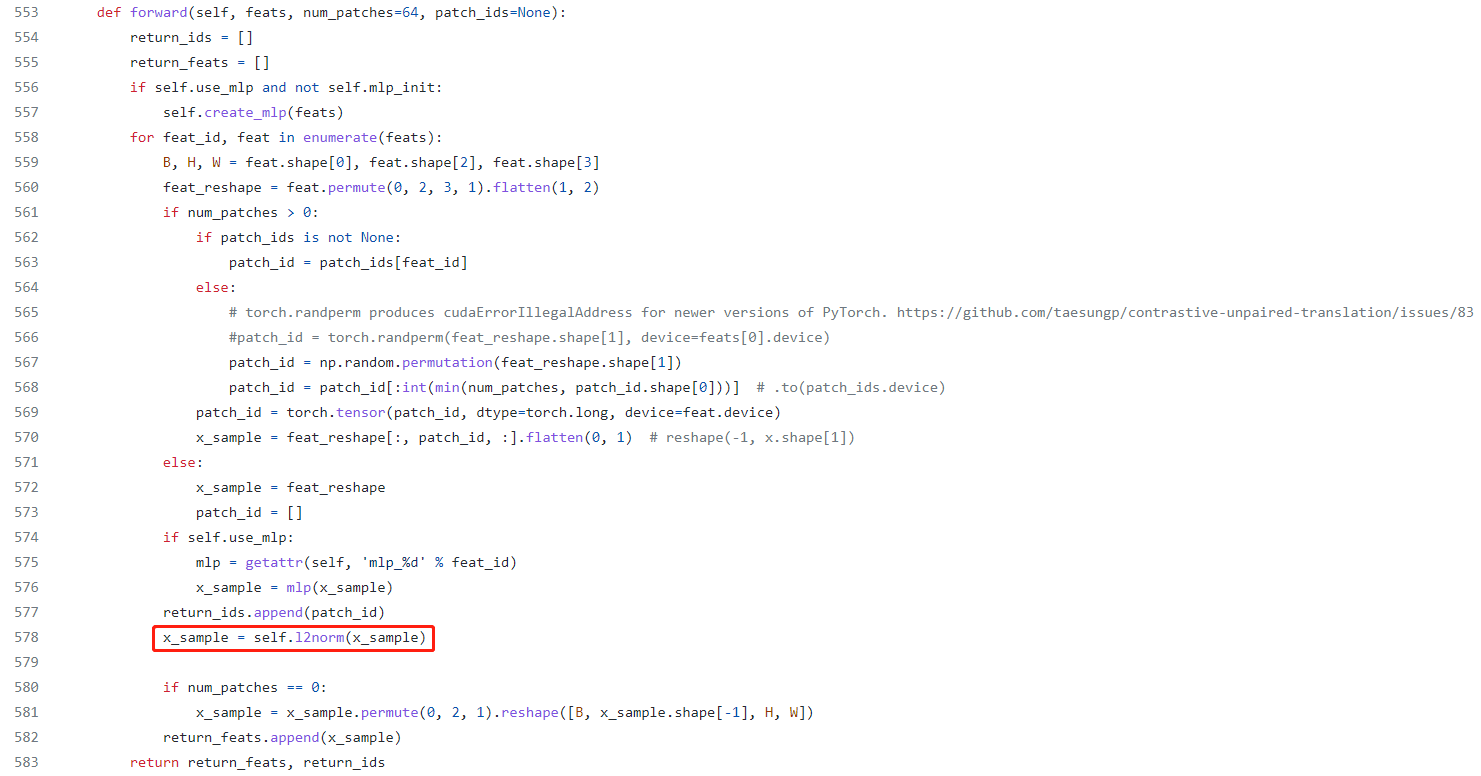

I have some questions about PatchNCE loss. In your source code, it sames like we want output(feat_k) be closer to positive sample(feat_q) as soon as possible and be far away from negative sample(feat_qT).To get this goal, we want feat_k * feat_q to be larger when optimizing crossEntropyLoss.

I have a question. Why could we affirm that feat_k * feat_q is larger means feat_k is close to feat_q and far away from feat_qT?

To think more deeply. Is there some alter to * operator? I would appreciate it if someone could help me ?

sum(feak_k * feat_q) is the dot product. The dot product is a measure of similarity when the magnitude of each vector is one. It means feat_k * feat_q is the largest when they are the same as each other.