Workload portability is important to manufacturing customers, as it allows them to operate solutions across different environments without the need to re-architect or re-write large sections of code. They can easily move from the Edge to the cloud, depending on their specific requirements. It also enables them to analyze and make real-time decisions at the source of the data and reduces their dependency on a central location for data processing. Apache Spark's rich ecosystem of data connectors, availability in the cloud and the Edge (Docker & Kubernetes), and a thriving open source community makes it an ideal candidate for portable ETL workloads.

In this sample we'll showcase an E2E data pipeline leveraging Spark's data processing capabilities.

In the Cloud version, we provision all infrastructure with Terraform.

Note: Prior to running terraform apply you must ensure the wheel ./src/common_lib/dist/common_lib-*.whl exists locally by executing python3 -m build ./src/common_lib.

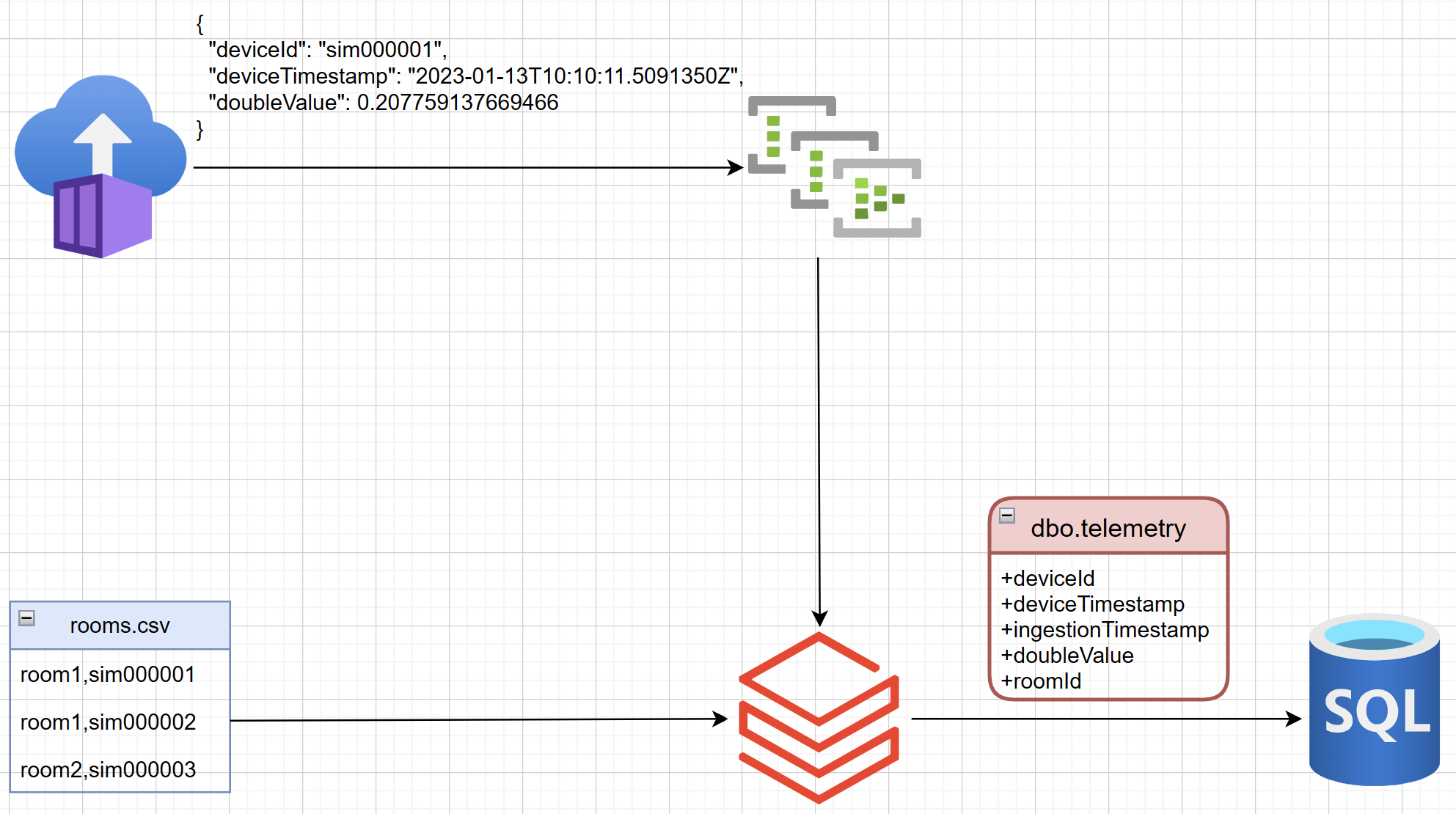

The IoT Telemetry Simulator is hosted in Azure Container Instances. It sends generated data to a Kafka broker, exposed through Azure Event Hubs. The ETL workload is represented in a Databricks Job. This job is responsible for reading and enriching the data from sources and store the final output to an Azure SQL DB.

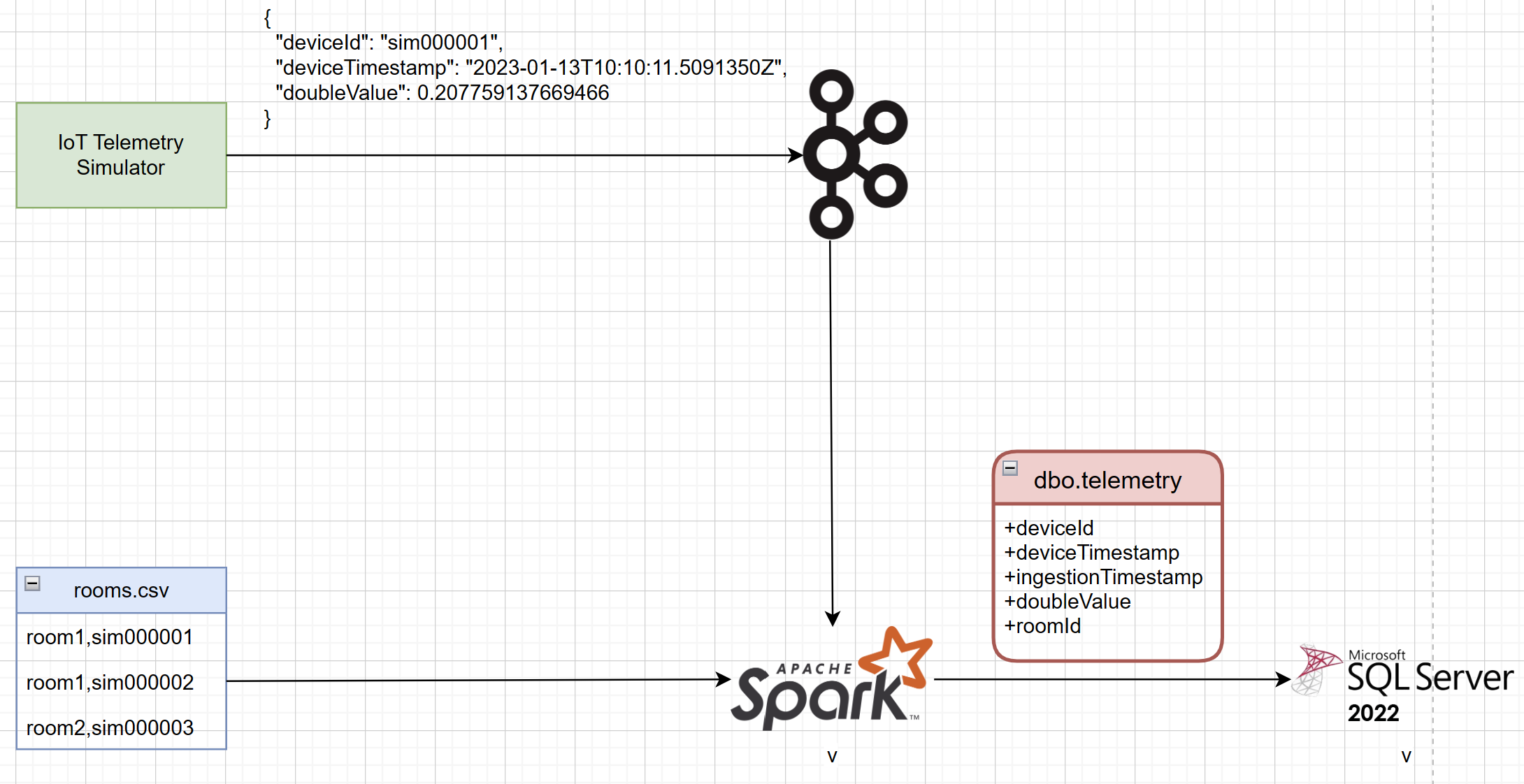

In the Edge version, we provision and orchestrate everything with Docker Compose.

Note: Please use the docker compose tool instead of the older version docker-compose.

The pipeline begins with Azure IoT Device Telemetry Simulator sending synthetic Time Series data to a Confluent Community Kafka Server. A PySpark app then processes the Time Series, applies some metadata and writes the enriched results to a SQL DB hosted in SQL Server 2022 Linux container. The key point to note here is that the data processing logic is shared between the Cloud and Edge through the common_lib Wheel.

- To validate that the E2E Edge pipeline is working correctly, we can execute the script

smoke-test.sh. This script will send messages using the IoT Telemetry Simulator and then query the SQL DB to ensure the messages were processed correctly. - Unit tests are available for the

common_libWheel in PyTest. - Both type of tests are also executed in the CI pipeline.

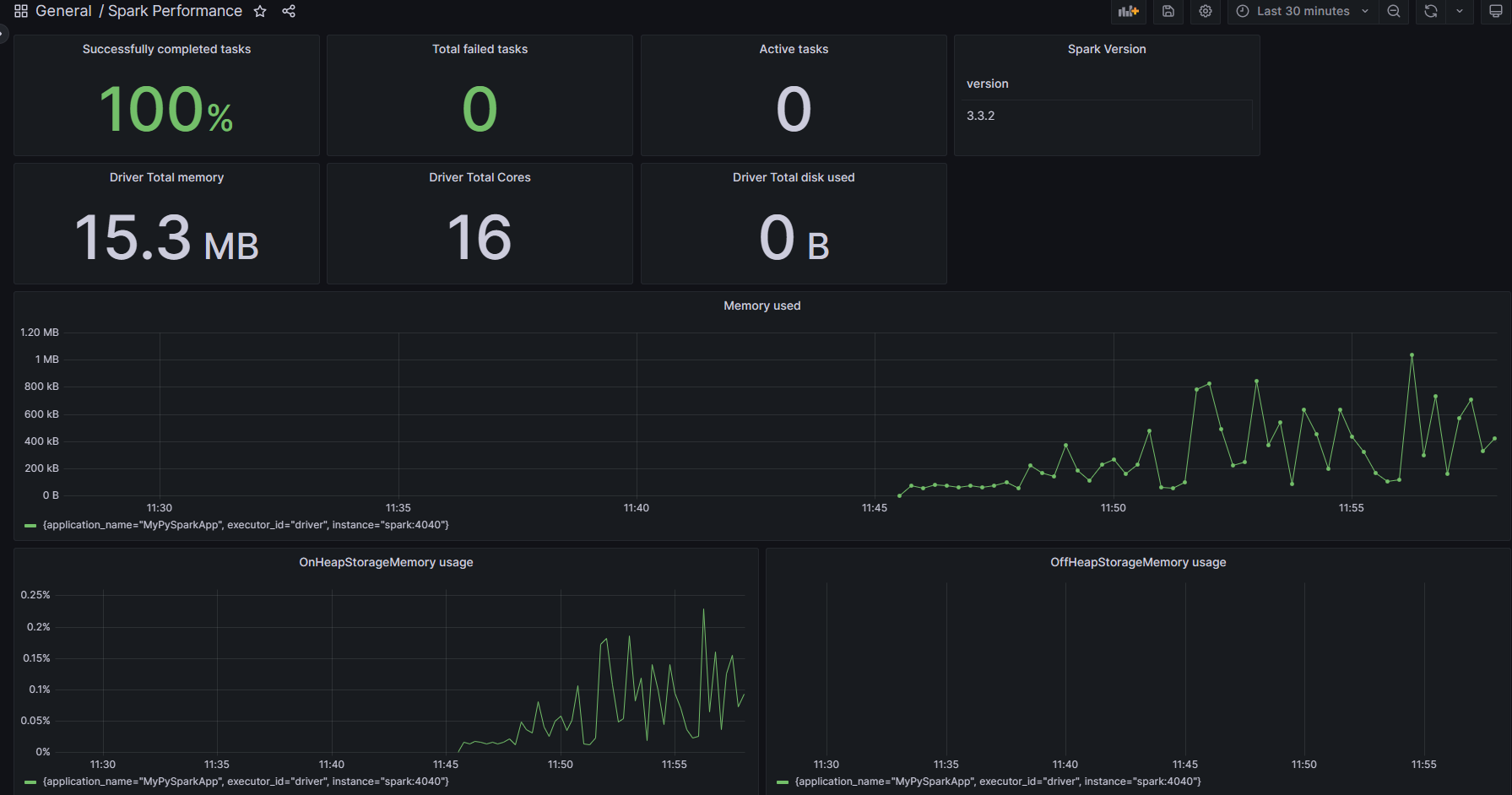

The Edge version of the solution also deploys additional containers for Prometheus and Grafana. The Grafana dashboard below, relies on the Spark 3.0 metrics emitted in the Prometheus format.

GitHub Codespaces are supported through the VS Code Dev Containers. The minimum required machine type configuration is 4-core.