🦄️ MOFA-Video: Controllable Image Animation via Generative Motion Field Adaptions in Frozen Image-to-Video Diffusion Model

We have released the Gradio inference code and the checkpoints for Hybrid Controls! Please refer to Here for more instructions.

Stay tuned. Feel free to raise issues for bug reports or any questions!

- (2024.05.31) Gradio demo and checkpoints for trajectory-based image animation

- (2024.06.22) Gradio demo and checkpoints for image animation with hybrid control

- Inference scripts and checkpoints for keypoint-based facial image animation

- Training scripts for trajectory-based image animation

- Training scripts for keypoint-based facial image animation

|

|

|

| Trajectory + Landmark Control | ||

|

|

|

|

| Trajectory Control | |||

|

|

|

|

|

| Landmark Control | ||||

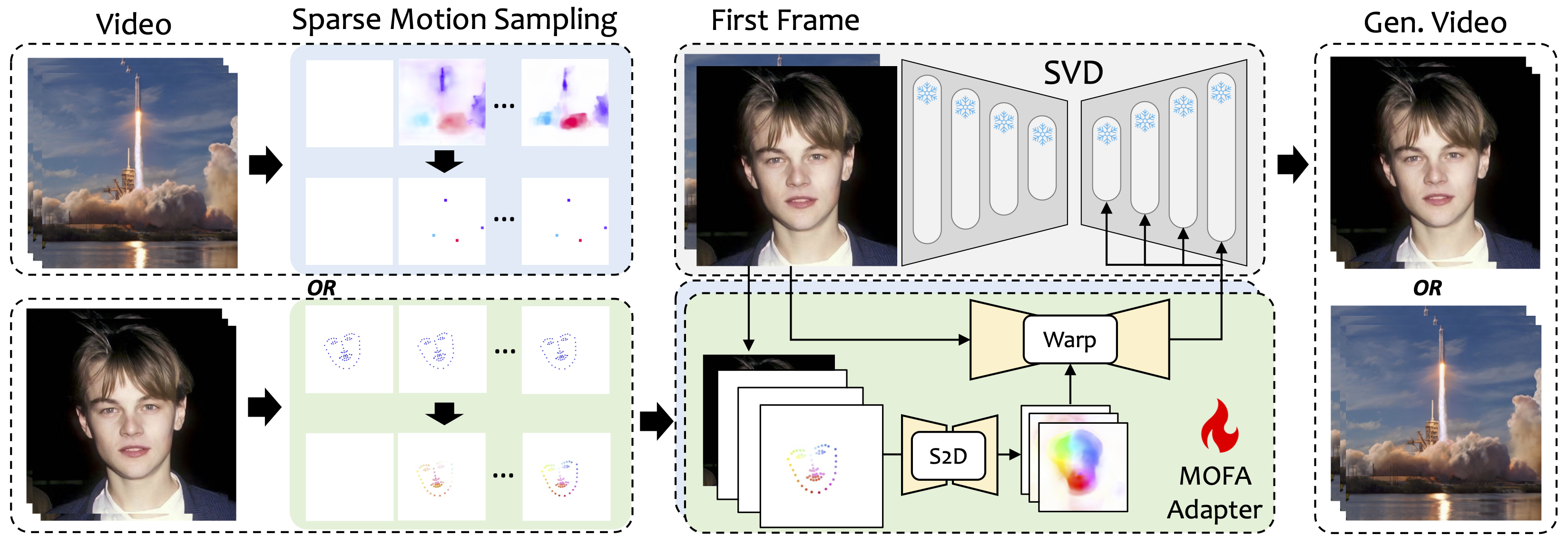

We introduce MOFA-Video, a method designed to adapt motions from different domains to the frozen Video Diffusion Model. By employing sparse-to-dense (S2D) motion generation and flow-based motion adaptation, MOFA-Video can effectively animate a single image using various types of control signals, including trajectories, keypoint sequences, AND their combinations.

During the training stage, we generate sparse control signals through sparse motion sampling and then train different MOFA-Adapters to generate video via pre-trained SVD. During the inference stage, different MOFA-Adapters can be combined to jointly control the frozen SVD.

Our inference demo is based on Gradio. Please refer to Here for more instructions.

Our inference demo is based on Gradio. Please refer to Here for more instructions.

@article{niu2024mofa,

title={MOFA-Video: Controllable Image Animation via Generative Motion Field Adaptions in Frozen Image-to-Video Diffusion Model},

author={Niu, Muyao and Cun, Xiaodong and Wang, Xintao and Zhang, Yong and Shan, Ying and Zheng, Yinqiang},

journal={arXiv preprint arXiv:2405.20222},

year={2024}

}

We sincerely appreciate the code release of the following projects: DragNUWA, SadTalker, AniPortrait, Diffusers, SVD_Xtend, Conditional-Motion-Propagation, and Unimatch.