A Pragmatic Look at Deep Imitation Learning

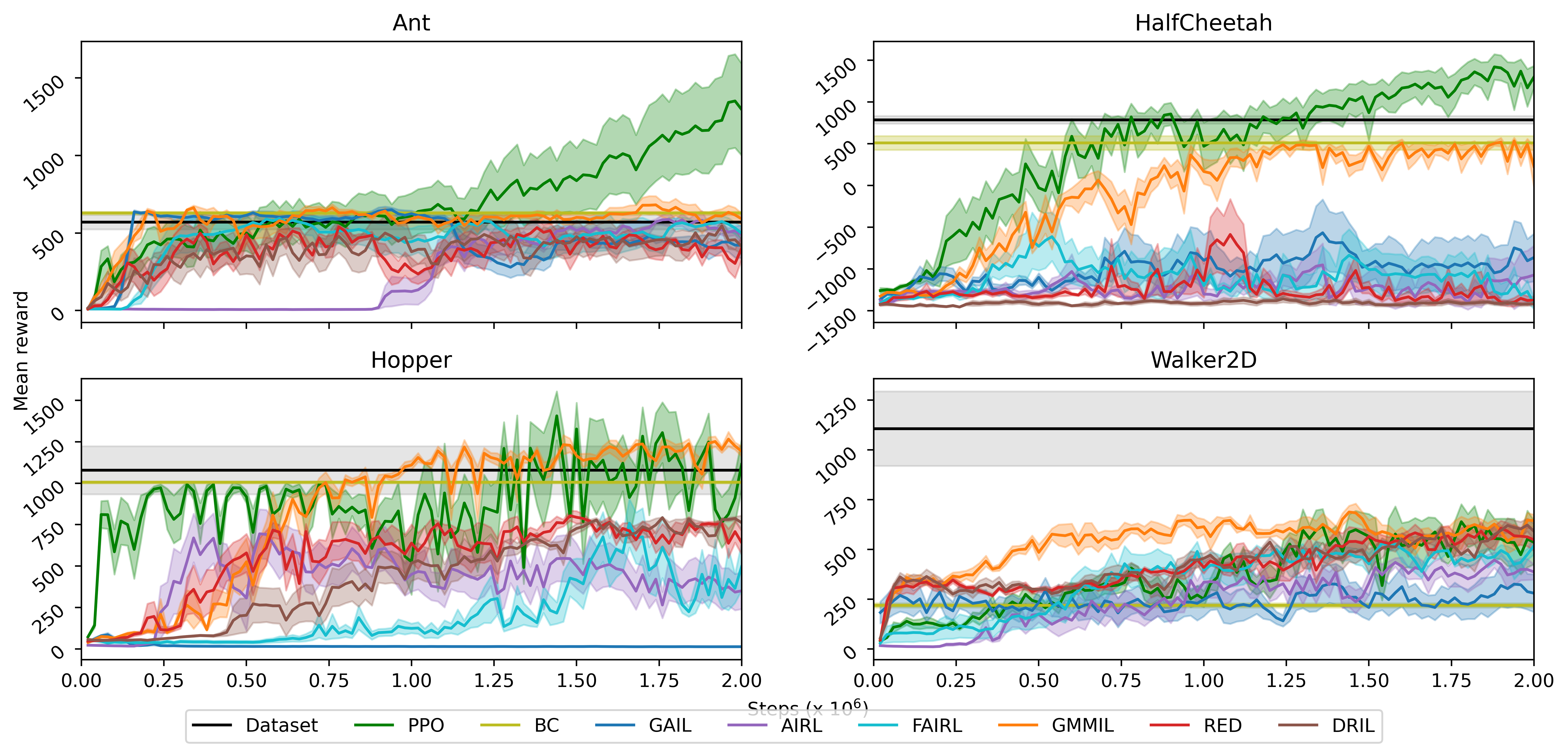

Imitation learning algorithms (with PPO [1]):

Requirements

The code runs on Python3.7 (AX requires >3.7). You can install most of the requirements by running

pip install -r requirements.txt

Notable required packages are PyTorch, OpenAI Gym, D4RL-pybullet, Hydra and Ax. if you fail to install d4rl-pybullet, install it with pip directly from git by using the command

pip install git+https://github.com/takuseno/d4rl-pybullet

Note:

For hyperparameter optimization, the code uses the Hydra AX sweeper plugin. This is not included in the requirements.txt. Ax requires a specific version of PyTorch, and therefore might upgrade/downgrade the PyTorch if you install it on existing environment. Ax sweeper can be installed with:

pip install hydra-ax-sweeper --upgrade

Run

The training of each imitation learning algorithm can be started with

python main.py algorithm=ALG/ENV

ALG one of [AIRL|BC|DRIL|FAIRL|GAIL|GMMIL|PUGAIL|RED] and ENV to be one of [ant|halfcheetah|hopper|walker2d].

example:

python main.py algorithm=AIRL/hopper

Hyperparameters can be found in conf/config.yaml and conf/algorithm/ALG/ENV.yaml,

with the latter containing algorithm & environment specific hyperparameter that was tuned with AX.

The resulting model will saved in ./outputs/ENV_ALGO/m-d-H-M with the last subfolder indicating current date (month-day-hour-minute).

Run hyperparameter optimization

Hyper parameter optimization can be run by adding the -m flag.

example:

python main.py -m algorithm=AIRL/hopper hyperparam_opt=AIRL hydra/sweeper=ax

The hyperparam_opt specifies which parameters to optimize. (Default is IL and contains all parameters).

Note that you need AX-sweeper installed for the above code to work. (See Requirement section)

Run with seeds

You can run each algorithm with different seeds with:

python main.py -m algorithm=AIRL/hopper seed=1, 2, 3, 4, 5

or use the existing bash script

./scripts/run_seed_experiments.sh ALG ENVThe results will be available in ./output/seed_sweeper_ENV_ALG folder (note: running this code twice will overwrite the previous result).

Options that can be modified in config include:

- State-only imitation learning:

state-only: true/false - Absorbing state indicator [12]:

absorbing: true/false - R1 gradient regularisation [13]:

r1-reg-coeff: 0.5(in each algorithm subfolder)

The state only & absorbing is not used in the result.

Results

Acknowledgements

Citation

If you find this work useful and would like to cite it, the following would be appropriate:

@misc{arulkumaran2020pragmatic,

author = {Arulkumaran, Kai and Ogawa Lillrank, Dan},

title = {A Pragmatic Look at Deep Imitation Learning},

url = {https://github.com/Kaixhin/imitation-learning},

year = {2020}

}

References

[1] Proximal Policy Optimization Algorithms

[2] Adversarial Behavioral Cloning

[3] Learning Robust Rewards with Adversarial Inverse Reinforcement Learning

[4] Efficient Training of Artificial Neural Networks for Autonomous Navigation

[5] Disagreement-Regularized Imitation Learning

[6] A Divergence Minimization Perspective on Imitation Learning Methods

[7] Generative Adversarial Imitation Learning

[8] Imitation Learning via Kernel Mean Embedding

[9] Positive-Unlabeled Reward Learning

[10] Primal Wasserstein Imitation Learning

[11] Random Expert Distillation: Imitation Learning via Expert Policy Support Estimation

[12] Discriminator-Actor-Critic: Addressing Sample Inefficiency and Reward Bias in Adversarial Imitation Learning

[13] Which Training Methods for GANs do actually Converge?