This repo is now archived, was a fun research project and I learnt a lot, but all good things must come to an end...

- orbslam.py - toy implementation of monocular SLAM (ORBSlam) - INCOMPLETE

- model.py - Neural Network Model - architecture needs improvement, is currently just a basic ResNet

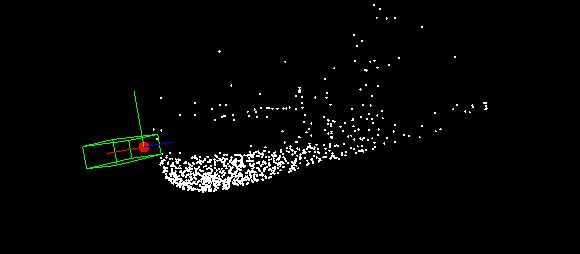

- renderer.py - Radar point cloud visualization, very slow lol

-

Machine Learning

- Research on different ANN Architectures

- Implement recurrence, temporal dynamics of scene

- Semantic Segmentation

- Look more into automatic labelling of moving vs. non-moving objects in scene (have my own little idea wanna implement 😄).

- Set up data trigger infrastructure - data collection for edge cases

- Research on different ANN Architectures

-

SLAM

- Read more of Multiple View Geometry

- Figure out what to do after getting Camera Matrix (projection matrix?)

- Get at least a toy implementation of ORBSlam up and running

-

Misc.

- Leverage Cloud GPU for training and stuff (Google Colab)

- git gud

My final goal is to get a Level 2-3 Self-Driving System up and running, whilst also learning about relevant concepts

- A bold-face symbol such as x always represents a column vector, and its transpose is the row vector xT.

- a point in the plane will be represented by the column vector (x,y)T, rather than its transpose, the row vector (x,y).

- a line may naturally be represented by the vector (a, b, c)T

- The set of equivalence classes of vectors in R3 − (0, 0, 0)T forms the projective space P2.

- A conic is a curve described by a second-degree equation in the plane. In Euclidean geometry conics are of three main types: hyperbola, ellipse, and parabola

- The equation of a conic in inhomogeneous coordinates is: ax2 + bxy + cy2 + dx + ey + f = 0

- i.e. a polynomial of degree 2. “Homogenizing” this by the replacements: x → x1/x3, y → x2/x3 gives ax12 + bx1x2 + cx22 + dx1x3 + ex2x3 + fx32 = 0 (2.1) or in matrix form: xTCx = 0

-

Get two images points, first image being x and next one being x'

-

Extract features(keypoints) from each image u,v, along with descriptors of every point using ORB.

-

Use KNN with point descriptors to match points from each image

-

Filter out points using ratio test (distance from points)

-

Extract the Fundamental matrix F, using RANSAC to filter matches and the 8-point algorithm.

-

Perform SVD on F, and find focal length values from s1 and s1 of matrix S (need to explore different method, currently unsure)

-

Set the Intrinsic Matrix K using focal length and center point of pinhole (need to investigate further on using better methods, extracting focal length and getting intrinsic params.).

-

Repeat steps from 1-4, then normalize homogenous image points (x,y) -> (x,y,z) x and x' into x^^ and x^^':

x^^ = K-1x

x^^' = K-1x'

-

Find the Essential Matrix *E *using (x,y)_ from normalized coords

-

Perform Singular Value Decomposition on E

-

Extract Rotation and translation as demonstrated here: Determining R and t from E

-

And finally, extract 3D points as shown here: 3D points from image points