Kubernetes Bootstrap

This project facilitates bootstrapping a new Kubernetes cluster on AWS.

This is an opinionated project, based on the following tools.

-

aws cli for interacting with AWS.

-

Terraform is used for creating AWS resources (apart from those created by kops).

-

kops is used for creating and managing the Kubernetes cluster.

-

kubectl is the Kubernetes CLI, used for interacting with the cluster.

-

helm is used for deploying applications.

-

aws-iam-authenticator is used for authenticating to a Kubernetes cluster with AWS IAM credentials.

Everything is driven by GNU make. The targets named

deploy-<X> are used to deploy each component; their corresponding clean-<X> target destroys that

component.

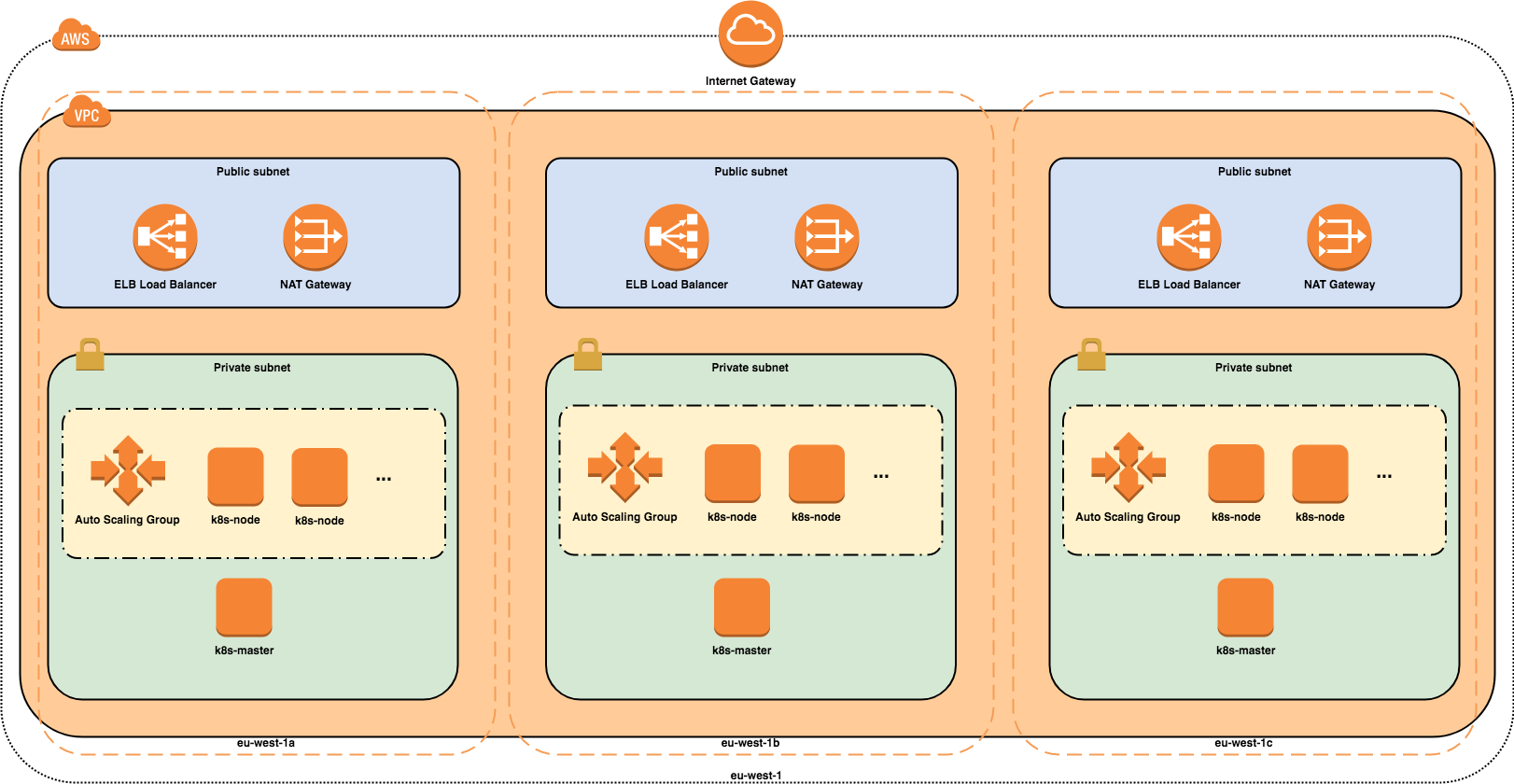

Cluster architecture

This project creates a high-availability Kubernetes cluster in which the master and worker nodes are spread across three availability zones (AZs) in a single region. A new Virtual Private Cloud (VPC) is created with both private and public subnets in each AZ. An Elastic Load-Balancer (ELB) provides external access to the Kubernetes API server running on the master nodes. All instances running Kubernetes are created inside the private subnets, with outbound Internet access provided by NAT and Internet gateways. A second ELB is created by the Kubernetes Ingress Controller to route incoming traffic to applications running on the cluster. The master nodes run in an Auto-Scaling Group (ASG) of min and max size of 1. This is to ensure that the nodes would be re-provisioned should the underlying instance go down. The worker nodes also run in an ASG, the size of which is configurable.

Pre-requisites

-

Software on you local machine.

docker,make,aws cli,terraform,kops,kubectl,helmandaws-iam-authenticatorshould be installed and available in the shell search path. Refer to their respective documentation for installation instructions specific to your OS. -

Configure AWS credentials. Refer to the AWS documentation. The Makefile checks that the correct AWS environment (region and account) is configured for the environment (see customization).

-

Configure IAM role. If you plan to authenticate kubectl with an IAM user (optional) you need to create an IAM role, named

KubernetesAdmin, that users must assume for successful interaction with the API Server. This is done like so:# get your account ID ACCOUNT_ID=$(aws sts get-caller-identity --output text --query 'Account') # define a role trust policy that opens the role to users in your account (limited by IAM policy) POLICY=$(echo -n '{"Version":"2012-10-17","Statement":[{"Effect":"Allow","Principal":{"AWS":"arn:aws:iam::'; echo -n "$ACCOUNT_ID"; echo -n ':root"},"Action":"sts:AssumeRole","Condition":{}}]}') # create a role named KubernetesAdmin (will print the new role's ARN) aws iam create-role \ --role-name KubernetesAdmin \ --description "Kubernetes administrator role (for AWS IAM Authenticator for Kubernetes)." \ --assume-role-policy-document "$POLICY" \ --output text \ --query 'Role.Arn' -

Configure your environment. Specify AWS region, base fqdn and a name for your cluster. See customization.

Before you deploy a cluster you might want to apply IP-restrictions to the external resources (to an office ip address for instance). IP-restrictions for the kubernetes API-server and the bastion host are configured in kops/values.yaml and IP-restrictions for any external facing services you deploy are configured in helm/nginx-ingress/values.yaml.

Bring up a new Kubernetes cluster

The following steps will bring up a new Kubernetes cluster.

1. make deploy-prereqs

Use this make target to create the resources required by Terraform. This includes creating the Terraform state bucket on S3 and creating a DynamoDb table used for to prevent concurrent deploys.

2. make deploy-infra

This make target deploys the infrastructure required by kops in order to create a Kubernetes cluster: an S3 bucket, in which to store the kops state; and, a Route53 hosted zone, delegated from a base zone, in which to register domain names used by the cluster.

3. make deploy-cluster

This target runs kops to create a new Kubernetes cluster. This process takes time and, as

indicated by the kops output, you need to wait until the cluster is up-and-running before

proceeding to deploy applications. Use the validate-cluster make target to determine whether

a cluster is ready or not.

4. make validate-cluster

Simply runs kops validate cluster with the correct arguments for the state store.

5. make deploy-helm

Installs tiller (the helm agent) inside the Kubernetes cluster. Ensure that the cluster is ready

(by using the validate-cluster target above) before carrying out this step.

6. make deploy-nginx-ingress

Creates an Ingress Controller, required for incoming traffic to reach applications running inside the cluster. We chose nginx as a simple Ingress Controller, but other brands are available. This step takes some time to complete in the background, as it creates both a Classic Elastic Load-Balancer on AWS.

7. make deploy-external-dns

Installs ExternalDNS which will take care of creating DNS A records for the exposed Kubernetes

services and ingresses. ExternalDNS is installed with sync policy which means that it

will do a full synchronization of DNS records. If you don't want ExternalDNS to delete any DNS

records when you delete services or ingresses in the Kubernetes cluster, then change this value

to upsert-only. See extenal-dns for details.

8. make deploy-app-echoserver

This make target packages a simple application into a helm chart and deploys that chart to the

cluster. The echoserver application is a web-server that displays the HTTP headers of the

received request. The URL at which the application can be reached can be seen with kubectl, via

kubectl get ingresses. Though note that the wildcard DNS entry created in the previous step

must have propagated through the DNS to the resolver on your local network before it will be

reachable.

Additional targets

-

make deploy-authenticatorPackages and installs the AWS IAM Authenticator. Ensure that Helm's tiller pod is running in the cluster before running this step. This step can be re-run whenever you change the

configmap.yamlcontaining the IAM user to Kubernetes user mapping. -

make deploy-kube2iamDeploys kube2iam that enables the use of individual IAM roles per pod. This is recommended if a pod requires access to AWS resources. Additional configuration (not yet in this project) is required to make ExternalDNS and cluster autoscaler run with kube2iam.

-

make deploy-autoscalerDeploys the cluster autoscaler that automatically adjusts the size (within the limits specified in the cluster spec) of the Kubernetes cluster based on resource needs. A good explanation on how the autoscaler works can be found in the cluster autoscaler FAQ.

-

make deploy-metrics-serverDeploys the metrics server that allows for using the kubectl top command (shows pod metrics).

-

make deploy-prometheusDeploys prometheus that is used for storing metrics that can be visualized with Grafana. Default configuration has an ingress enabled. Can be modified in the values file.

-

make deploy-grafanaDeploys grafana that is used for visualizing platform metrics. Default configuration has no ingress enabled. Can be modified in the values file.

Updating / upgrading the cluster

In kops terminology updating refers to changing cluster parameters such as the number or type of

virtual machines used for the instance groups; upgrading refers to upgrading the version of

Kubernetes running on the cluster. Both of these procedures are, however, achieved in the same way.

When upgrading the Kubernetes version it's also necessary to check if the cluster-autoscaler needs

to be updated, since it is depending on the Kubernetes version, see versions here.

When updating or upgrading the cluster take notice of deployments

that use a PodDisruptionBudget with minAvailable set to 1. nginx-ingress is such a deployment.

During the upgrade, Kubernetes is not able

to drain nodes on which such Pods are running and the upgrade will hang until it times-out. The solution

is to use kubectl to manually delete the Pod, causing it to be re-scheduled to an alternative, available

node.

1. Update the values file

2. make update-cluster

Tear-down

1. make clean-cluster

Destroys the Kubernetes cluster along with helm and any installed charts or applications.

2. make clean-infra

Removes the infrastructure required by kops.

3. make clean-prereqs

Removes the infrastructure required by Terraform.

4. Remove local terraform state file

Remove the local Terraform state file (terraform/infra/.terraform/terraform.tfstate). Otherwise

creating a new cluster with the same parameters may fail due to stale local state.

Bastion

If you need to SSH into one of the master or nodes instances then you'll need to launch a bastion host first. The Kubernetes cluster template defines a bastion instance group, however we set the instance count to 0 so it's not enabled by default.

Do the following to launch a bastion host:

# edit the kops/values.yaml file, set bastionCount value to 1

$ make update-cluster

Once the bastion host is running, then run the following commands to SSH into the bastion host with a forwarded SSH agent.

# if you don't have the private key on your machine, copy it from the kops state bucket

$ aws s3 cp s3://<kops-state-bucket>/ssh-key/<ssh-key> .

# verify you have an SSH agent running.

$ ssh-add -l

# if you need to add the private key to your agent

$ ssh-add path/to/private/key

# now you can SSH into the bastion

ssh -A admin@bastion.<cluster-fqdn>

Once successfully SSHed into the bastion host, you'll be able to SSH into any of the cluster master or nodes instances by using their local IP address.

More details can be found here: https://github.com/kubernetes/kops/blob/master/docs/bastion.md

Metrics

Memory and CPU utilization metrics for both nodes and pods are available via Kubernetes'

metrics pipeline.

This requires the Kubernetes Metrics Server be deployed in the cluster, which is done via the make

target deploy-metrics-server. It is then possible to see information about either pods or nodes

with kubectl top pods or kubectl top nodes respectively.

Customization

The Makefile is parameterized. The following parameters can be customized either by overriding their value on the make command line or by exporting them in the shell. Using direnv can make this quite frictionless.

-

AWS_REGIONThe AWS region in which common resources - such as Terraform and kops state storage buckets - are created.

-

BASE_FQDNThe domain name under which the cluster resources will be created. A hosted-zone must already exist in Route 53 - in the same account - for this domain.

-

ENVIRONMENTA name for the environment, such as

lab,dev,test,prod-eu,stage-sony-usor even your namejohn-does-cluster, for a personal cluster.

Consult the Makefile for the default values.

The ENVIRONMENT parameter is used to separate clusters within the same account.

The Makefile validates known ENVIRONMENT values against particular

AWS account and region. This is to protect against accidental deployment of infrastructure to the

wrong account or region. Experience has shown that it is too easy to have production AWS

credentials (or profile) activated in the shell environment and to deploy the development

infrastructure or vice-versa.

To enable validation, put known environments and associated account ids and regions in a file called environments.mk as in the example below.

# Put two entries for each environment in this file using the convention

# <myenv>_aws_account=nnnn and <myenv>_aws_region=region

lab_aws_account=123456789

lab_aws_region=eu-west-2

You can also override ordinary environment variables such as ENVIRONMENT in this file to set your active environment.

As an example, to create a 'lab' cluster for individual experimentation the ENVIRONMENT parameter may be overridden when calling make:

$ make deploy-prereqs ENVIRONMENT=lab

or, simply run make deploy-prereqs if you have overridden ENVIRONMENT in the environments.mk file. Alternatively, if direnv is installed as below.

$ cat .envrc

export AWS_PROFILE=lab

export AWS_REGION=eu-west-2

export BASE_FQDN=lab.sonymobile.com

export ENVIRONMENT=lab

$ direnv allow .

direnv: loading .envrc

direnv: export +AWS_PROFILE +AWS_REGION +BASE_FQDN +ENVIRONMENT

The individual values of the Kubernetes cluster spec used by kops can be customised for a particular installation through the file kops/values-${ENVIRONMENT}.yaml. For example:

$ cat kops/values-lab.yaml

master:

machineType: t2.medium

node:

machineType: m5.large

useSpotMarket: true

maxPrice: 0.50

Using the same principle, you can customize values of any helm chart by creating a values-${ENVIRONMENT}.yaml file in that chart. For example (to try out a specific nginx-ingress image in the lab setup):

$ cat helm/nginx-ingress/values-lab.yaml

controller:

image:

tag: "0.23.0"

Misc utilities

To set environment variables to get commands like kops validate cluster to work, just run:

$ $(make vars)

If you would like to manage a cluster that was not originally set up on your machine you can use

make export-kubecfg to create a kube context that lets you use kubectl towards that cluster. Be

sure to set the environment variables to match the target environment, i.e. ENVIRONMENT and

BASE_FQDN.

Final notes

While this project brings up a high-availability Kubernetes cluster it is not entirely production ready for other reasons. It needs some hardening here and there. Tiller is deployed with an insecure 'allow unauthenticated users' policy for intance. There are a lot of public resources describing steps to take to make a Kubernetes cluster production ready.

Authors

License

This project is licensed under the Apache License, Version 2.0 - see the LICENSE file for details