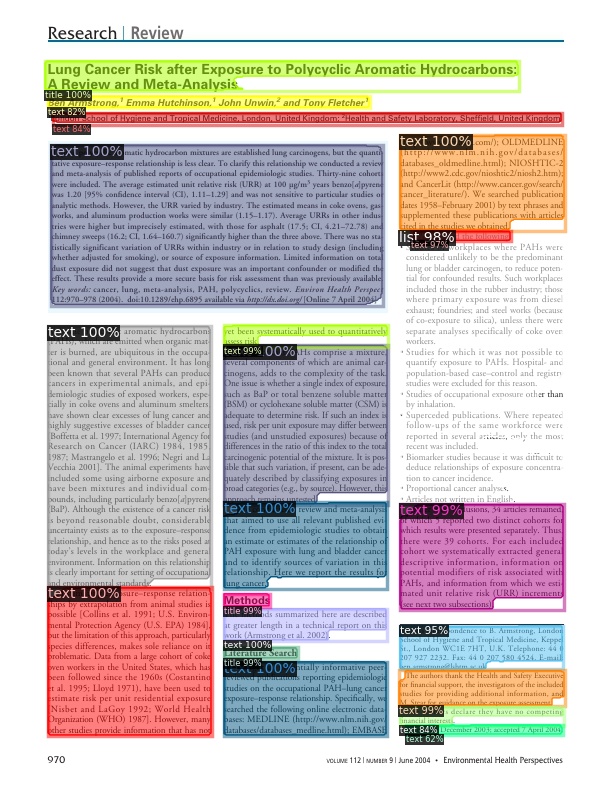

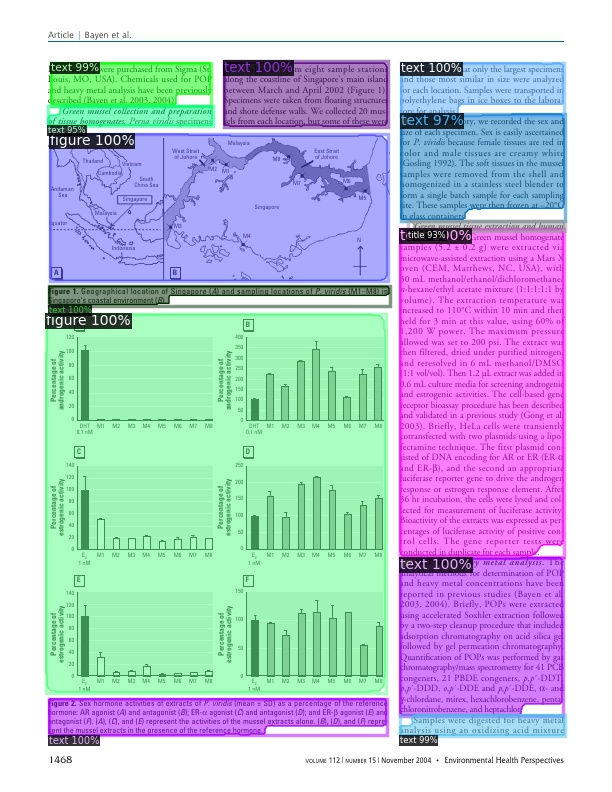

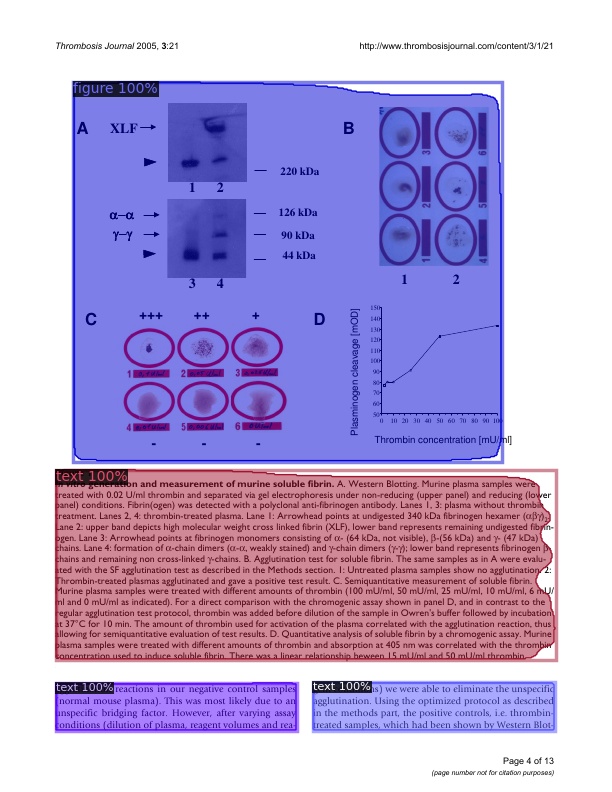

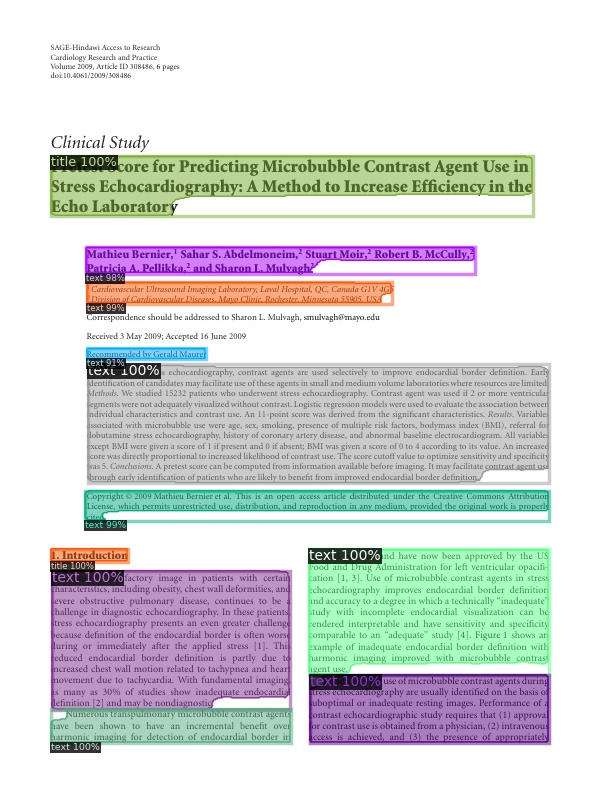

Detectron2 trained on PubLayNet dataset

This repo contains the training configurations, code and trained models trained on PubLayNet dataset using Detectron2 implementation.

PubLayNet is a very large dataset for document layout analysis (document segmentation). It can be used to trained semantic segmentation/Object detection models.

NOTE

- Models are trained on a portion of the dataset (train-0.zip, train-1.zip, train-2.zip, train-3.zip)

- Trained on total 191,832 images

- Models are evaluated on dev.zip (~11,000 images)

- Backbone pretrained on COCO dataset is used but trained from scratch on PubLayNet dataset

- Trained using Nvidia GTX 1080Ti 11GB

- Trained on Windows 10

- Install the latest

Detectron2from https://github.com/facebookresearch/detectron2 - Copy config files (

DLA_*) from this repo to the installed Detectron2 - Download the relevant model from the

Benchmarkingsection. If you have downloaded model usingwgetthen refer hpanwar08#22 - Add the below code in demo/demo.py in the

mainto get confidence along with label names

from detectron2.data import MetadataCatalog

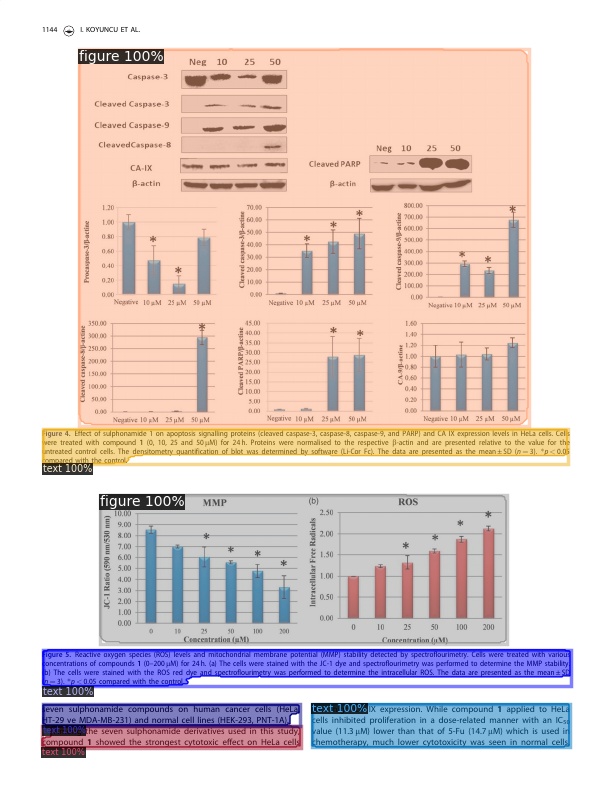

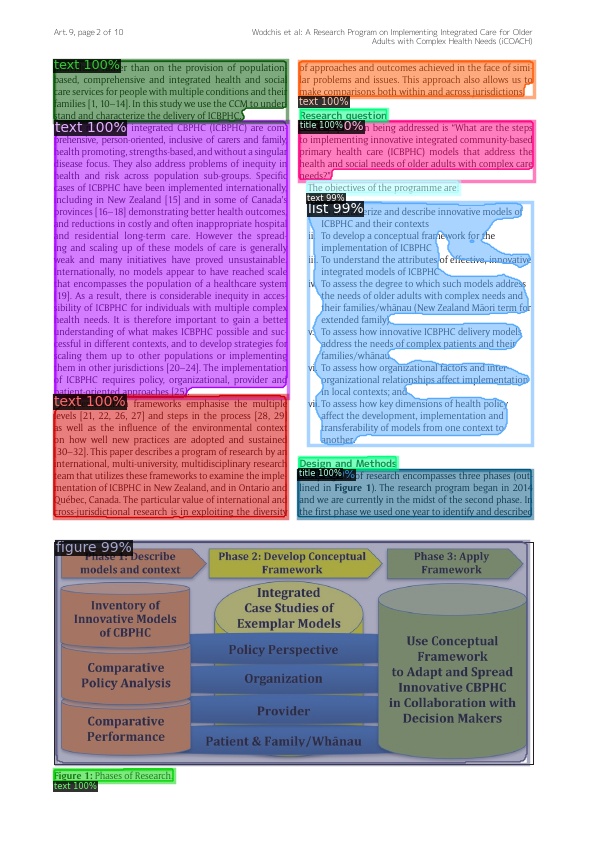

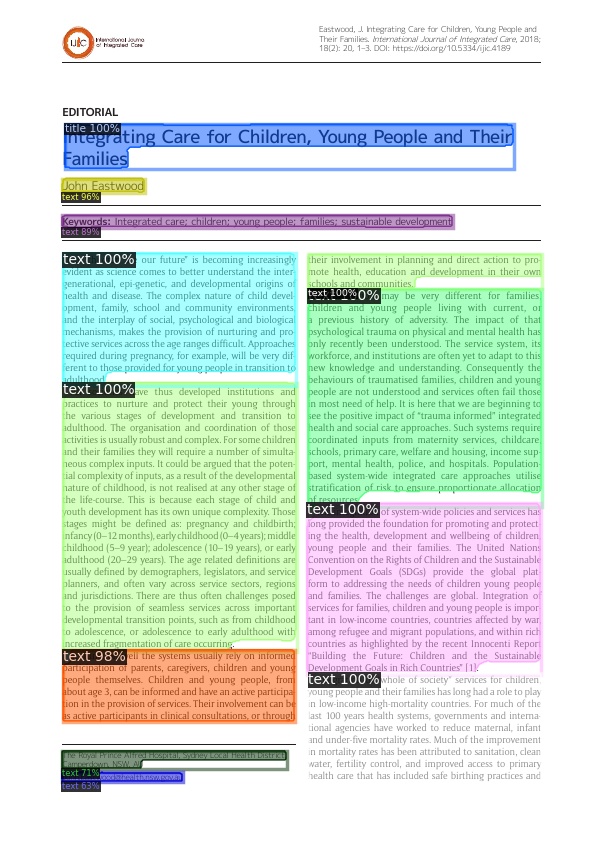

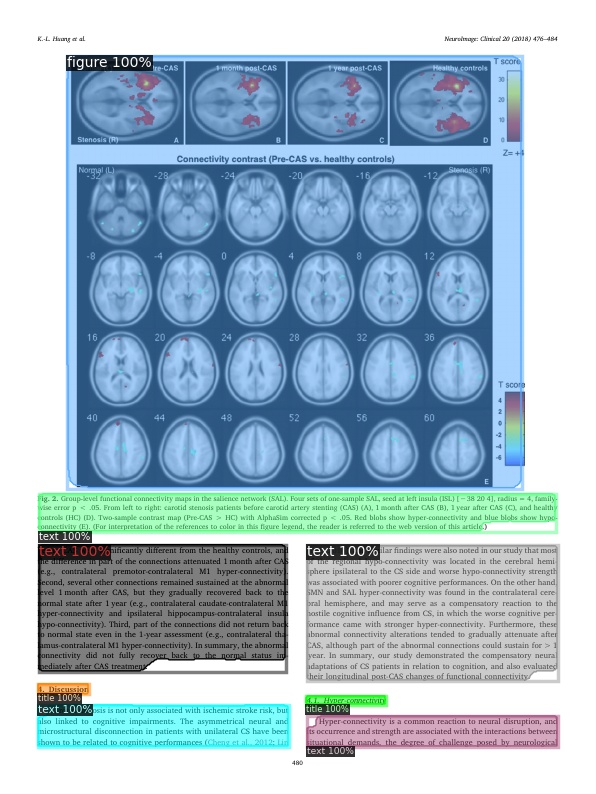

MetadataCatalog.get("dla_val").thing_classes = ['text', 'title', 'list', 'table', 'figure']

- Then run below command for prediction on single image (change the config file relevant to the model)

python demo/demo.py --config-file configs/DLA_mask_rcnn_X_101_32x8d_FPN_3x.yaml --input "<path to image.jpg>" --output <path to save the predicted image> --confidence-threshold 0.5 --opts MODEL.WEIGHTS <path to model_final_trimmed.pth> MODEL.DEVICE cpu

- For local docker deployment for testing use Docker DLA

| Architecture | No. images | AP | AP50 | AP75 | AP Small | AP Medium | AP Large | Model size full | Model size trimmed |

|---|---|---|---|---|---|---|---|---|---|

| MaskRCNN Resnext101_32x8d FPN 3X | 191,832 | 90.574 | 97.704 | 95.555 | 39.904 | 76.350 | 95.165 | 816M | 410M |

| MaskRCNN Resnet101 FPN 3X | 191,832 | 90.335 | 96.900 | 94.609 | 36.588 | 73.672 | 94.533 | 480M | 240M |

| MaskRCNN Resnet50 FPN 3X | 191,832 | 87.219 | 96.949 | 94.385 | 38.164 | 72.292 | 94.081 | 168M |

| Architecture | Config file | Training Script |

|---|---|---|

| MaskRCNN Resnext101_32x8d FPN 3X | configs/DLA_mask_rcnn_X_101_32x8d_FPN_3x.yaml | ./tools/train_net_dla.py |

| MaskRCNN Resnet101 FPN 3X | configs/DLA_mask_rcnn_R_101_FPN_3x.yaml | ./tools/train_net_dla.py |

| MaskRCNN Resnet50 FPN 3X | configs/DLA_mask_rcnn_R_50_FPN_3x.yaml | ./tools/train_net_dla.py |

Add the below code in demo/demo.py to get confidence along with label names

from detectron2.data import MetadataCatalog

MetadataCatalog.get("dla_val").thing_classes = ['text', 'title', 'list', 'table', 'figure']

Then run below command for prediction on single image

python demo/demo.py --config-file configs/DLA_mask_rcnn_X_101_32x8d_FPN_3x.yaml --input "<path to image.jpg>" --output <path to save the predicted image> --confidence-threshold 0.5 --opts MODEL.WEIGHTS <path to model_final_trimmed.pth> MODEL.DEVICE cpu

- Train MaskRCNN resnet50

|

|

|---|---|

|

|

|

|

|

|

|

Detectron2 is Facebook AI Research's next generation software system that implements state-of-the-art object detection algorithms. It is a ground-up rewrite of the previous version, Detectron, and it originates from maskrcnn-benchmark.

- It is powered by the PyTorch deep learning framework.

- Includes more features such as panoptic segmentation, densepose, Cascade R-CNN, rotated bounding boxes, etc.

- Can be used as a library to support different projects on top of it. We'll open source more research projects in this way.

- It trains much faster.

See our blog post to see more demos and learn about detectron2.

See INSTALL.md.

See GETTING_STARTED.md, or the Colab Notebook.

Learn more at our documentation. And see projects/ for some projects that are built on top of detectron2.

We provide a large set of baseline results and trained models available for download in the Detectron2 Model Zoo.

Detectron2 is released under the Apache 2.0 license.

If you use Detectron2 in your research or wish to refer to the baseline results published in the Model Zoo, please use the following BibTeX entry.

@misc{wu2019detectron2,

author = {Yuxin Wu and Alexander Kirillov and Francisco Massa and

Wan-Yen Lo and Ross Girshick},

title = {Detectron2},

howpublished = {\url{https://github.com/facebookresearch/detectron2}},

year = {2019}

}