Existing GAN inversion methods fail to provide latent codes for reliable reconstruction and flexible editing simultaneously. This paper presents a transformer-based image inversion and editing model for pretrained StyleGAN which is not only with less distortions, but also of high quality and flexibility for editing. The proposed model employs a CNN encoder to provide multi-scale image features as keys and values. Meanwhile it regards the style code to be determined for different layers of the generator as queries. It first initializes query tokens as learnable parameters and maps them into

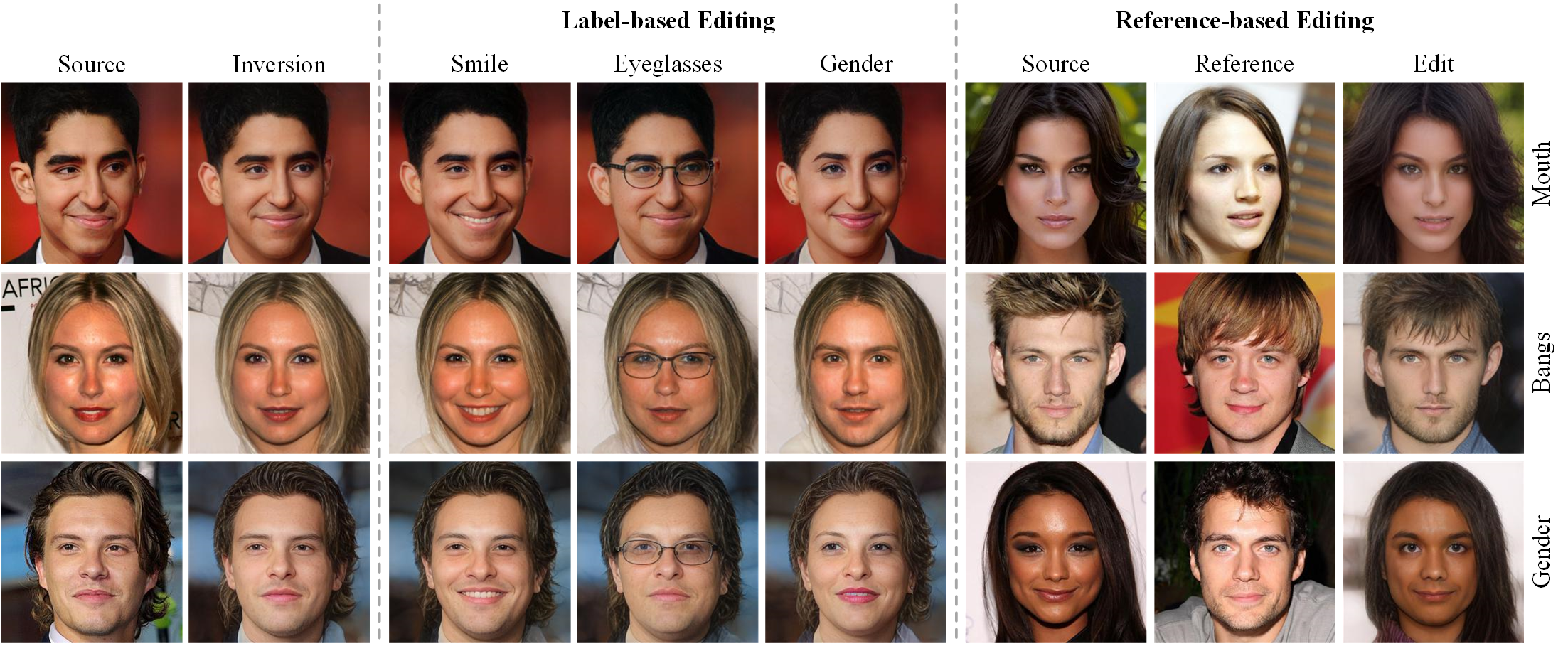

$W^+$ space. Then the multi-stage alternate self- and cross-attention are utilized, updating queries with the purpose of inverting the input by the generator. Moreover, based on the inverted code, we investigate the reference- and label-based attribute editing through a pretrained latent classifier, and achieve flexible image-to-image translation with high quality results. Extensive experiments are carried out, showing better performances on both inversion and editing tasks within StyleGAN.

Our style transformer proposes novel multi-stage style transformer in w+ space to invert image accurately, and we characterize the image editing in StyleGAN into label-based and reference-based, and use a non-linear classifier to generate the editing vector.

- Ubuntu 16.04

- NVIDIA GPU + CUDA CuDNN

- Python 3

We provide the pre-trained models of inversion for face and car domains.

Update configs/paths_config.py with the necessary data paths and model paths for training and inference.

dataset_paths = {

'train_data': '/path/to/train/data'

'test_data': '/path/to/test/data',

}

We use rosinality's StyleGAN2 implementation.

You can download the 256px pretrained model in the project and put it in the directory /pretrained_models.

Moreover, following pSp, we use some pretrained models to initialize the encoder and for the ID loss, you can download them from here and put it in the directory /pretrained_models.

python scripts/train.py \

--dataset_type=ffhq_encode \

--exp_dir=results/train_style_transformer \

--batch_size=8 \

--test_batch_size=8 \

--val_interval=5000 \

--save_interval=10000 \

--stylegan_weights=pretrained_models/stylegan2-ffhq-config-f.pt

python scripts/inference.py \

--exp_dir=results/infer_style_transformer \

--checkpoint_path=results/train_style_transformer/checkpoints/best_model.pt \

--data_path=/test_data \

--test_batch_size=8 \

If you use this code for your research, please cite

@inproceedings{hu2022style,

title={Style Transformer for Image Inversion and Editing},

author={Hu, Xueqi and Huang, Qiusheng and Shi, Zhengyi and Li, Siyuan and Gao, Changxin and Sun, Li and Li, Qingli},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={11337--11346},

year={2022}

}