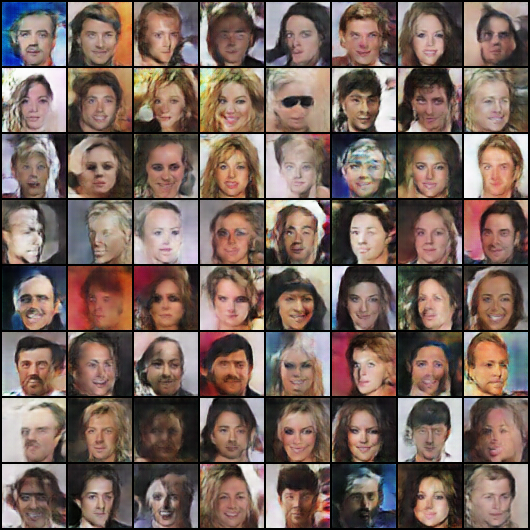

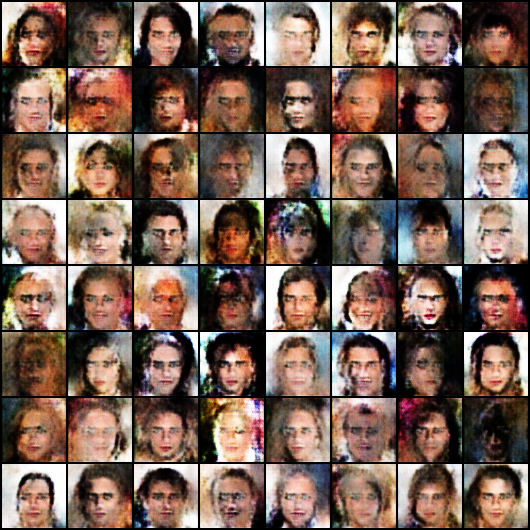

CelebA experiments

sanghoon opened this issue · comments

Notes

- This work was done only to show some sample outputs. Different random seeds can lead to totally different outcomes. Therefore, we need to investigate outputs from repeated trials to correctly compare two GAN methods.

- For faster training, I used only 50k images from CelebA (resized to be 64x64)

Large learning rate (0.01)

Vanilla DCGAN

| After 2 epochs | After 10 epochs | After 25 epochs |

|---|---|---|

|

|

|

DCGAN w/ prediction

| After 2 epochs | After 10 epochs | After 25 epochs |

|---|---|---|

|

|

|

Medium learning rate (0.0001)

Vanilla DCGAN

| After 2 epochs | After 10 epochs | After 25 epochs |

|---|---|---|

|

|

|

DCGAN w/ prediction

| After 2 epochs | After 10 epochs | After 25 epochs |

|---|---|---|

|

|

|

Small learning rate (1e-5)

Vanilla DCGAN

| After 2 epochs | After 10 epochs | After 25 epochs |

|---|---|---|

|

|

|

DCGAN w/ prediction

| After 2 epochs | After 10 epochs | After 25 epochs |

|---|---|---|

|

|

|