Pytorch implementation of "One-Sided Unsupervised Domain Mapping" (arxiv). The implementation is based on the architectures of both DiscoGAN and CycleGAN.

Download dataset [edges2shoes, edges2handbags, facecurb]: python datasets/download.py $DATASET_NAME. Use ./datasets/combine_A_and_B.py to create handbags2shoes dataset.

Download seperately: celebA dataset, car dataset used in Deep Visual Analogy-Making and head/face dataset. Then extract into ./datasets folder.

Male to Female: python ./discogan_arch/distance_gan_model.py --task_name='celebA' --style_A='Male'

Blond to Black hair: python ./discogan_arch/distance_gan_model.py --task_name='celebA' --task_name='celebA' --style_A='Blond_Hair' --style_B='Black_Hair' --constraint='Male' --constraint_type=-1

Eyeglasses to Without Eyeglasses: python ./discogan_arch/distance_gan_model.py --task_name='celebA' --style_A='Eyeglasses' --constraint='Male' --constraint_type=1

Edges to Shoes: python ./discogan_arch/distance_gan_model.py --task_name='edges2shoes' --num_layers=3

Edges to Handbags: python ./discogan_arch/distance_gan_model.py --task_name='edges2handbags' --num_layers=3

Shoes to Handbags: python ./discogan_arch/distance_gan_model.py --task_name='handbags2shoes' --starting_rate=0.5

Car to Car: python ./discogan_arch/distance_gan_angle_pairing_model.py --task_name='car2car'

Head/Face to Head/Face: python ./discogan_arch/distance_gan_angle_pairing_model.py --task_name='face2face'

Car to Head/Face: python ./discogan_arch/distance_gan_angle_pairing_model.py --task_no ame='car2face'

Add following flags to python command as follows:

To train from A to B only: --model_arch=distance_A_to_B. To train from B to A only: --model_arch=distance_B_to_A.

To add reconstruction/cycle loss to distance loss: --use_reconst_loss.

To use self distance instead of regular distance:--use_self_distance.

To avoid normalizing distances: --unnormalized_distances.

To change number of items used for expectation and std calculation: --max_items=NUM.

Additional options can be found in ./discogan_arch/discogan_arch_options/options.py

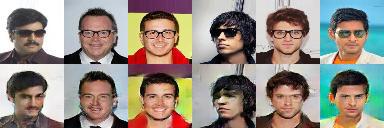

Male to Female (First row is input and Second row is output):

Blond to Black Hair:

With to Without Eyeglasses:

Edges to Shoes:

Shoes to Edges:

Handbags to Shoes:

Car to Car:

Car to Head:

Dataset: python datasets/download.py horse2zebra

Train: python train.py --dataroot ./datasets/horse2zebra --name horse2zebra_distancegan --model distance_gan

Test: python test.py --dataroot ./datasets/horse2zebra --name horse2zebra_distancegan --model distance_gan --phase test

Results saved in ./results/horse2zebra_distancegan/latest_test/index.html. Loss results and plots: 'run python -m visdom.server' and navigate to http://localhost:8097 (For other options see pytorch-CycleGAN-and-pix2pix)

To train from A to B only: --A_to_B.

To train from B to A only: --B_to_A.

To add reconstruction/cycle loss to distance loss: --use_cycle_loss.

To change weights of distance loss: --lambda_distance_A=NUM, --lambda_distance_B=NUM.

--use_self_distance, --unnormalized_distances, --max-items=NUM are as above.

Additional options can be found in ./cyclegan_arch/cyclegan_based_options.

python ./cyclegan_arch/mnist_to_svhn/main.py --use_distance_loss=True --use_reconst_loss=False --use_self_distance=False

Change above flags as required.

Horse to Zebra:

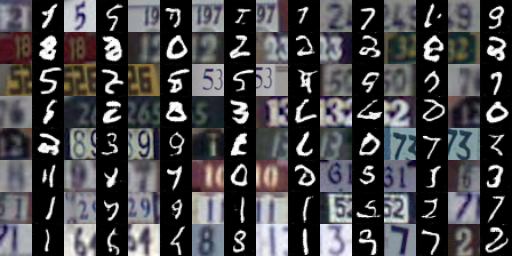

SVHN to MNIST:

If you found this code useful, please cite the following paper:

@inproceedings{Benaim2017OneSidedUD,

title={One-Sided Unsupervised Domain Mapping},

author={Sagie Benaim and Lior Wolf},

booktitle={NIPS},

year={2017}

}

This project has received funding from the European Research Council (ERC) under the European Union's Horizon 2020 research and innovation programme (grant ERC CoG 725974).