On Interaction Between Augmentations and Corruptions in Natural Corruption Robustness

This repository provides the code for the paper On Interaction Between Augmentations and Corruptions in Natural Corruption Robustness. This paper studies how perceptual similarity between a set of training augmentations and a set of test corruptions affects test error on those corruptions and shows that common augmentation schemes often generalize poorly to perceptually dissimilar corruptions.

The repository is divided into three parts. First, the Jupyter notebook minimal_sample_distance.ipynb illustrates how to calculate the measure of distance between augmentations and corruptions proposed in the paper. Second, imagenet_c_bar/ provides code to generate or test on the datasets CIFAR-10-C-bar and ImageNet-C-bar, which are algorithmically chosen to be dissimilar from CIFAR-10/ImageNet-C and are used to study generalization. Finally, experiments/ provides code to reproduce the experiments in the paper. Usage of these latter two is described in their respective READMEs.

This paper:

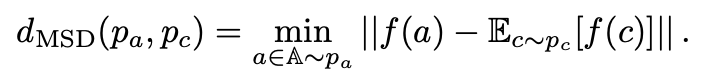

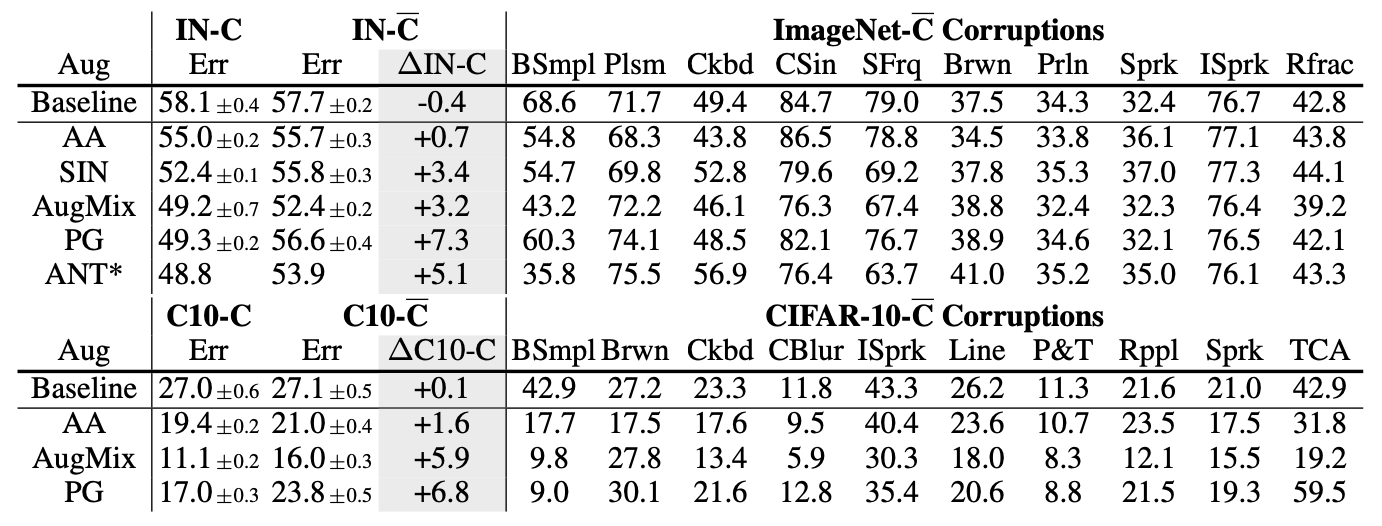

- Defines the minimal sample distance, which provides a measure of similarity on a perceptual feature space f(t) between augmentations and corruptions, extracted using a pre-trained neural network. This measure is assymetric to account for the fact that augmentation distributions are typically broader than any one corruption distribution but can still lead to good error if they produce augmentations that are perceptually similar to the corruption:

- Shows percetual similarity between train-time augmentations and test-time corruptions is often predictive of corruption error, across several common corruptions and augmentations. A large set of artificial augmentation schemes, called the augmentation powerset, is also introduced to better analyze the correlation:

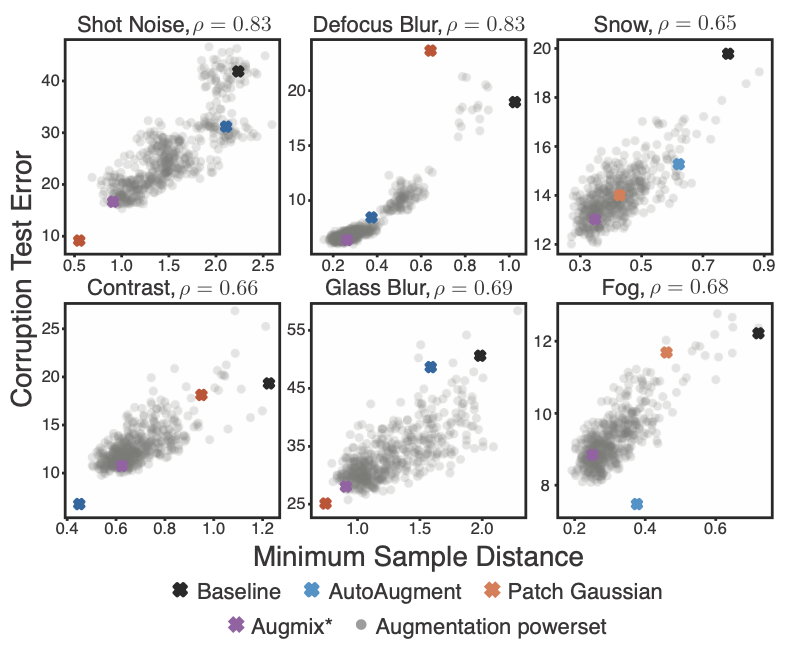

- Introduces a new set of corruptions designed to be perceptually dissimilar from the common benchmark CIFAR10/ImageNet-C. These new corruptions are chosen algorithmically from a set of 30 natural, human interpretable corruptions using the perceptual feature space defined above.

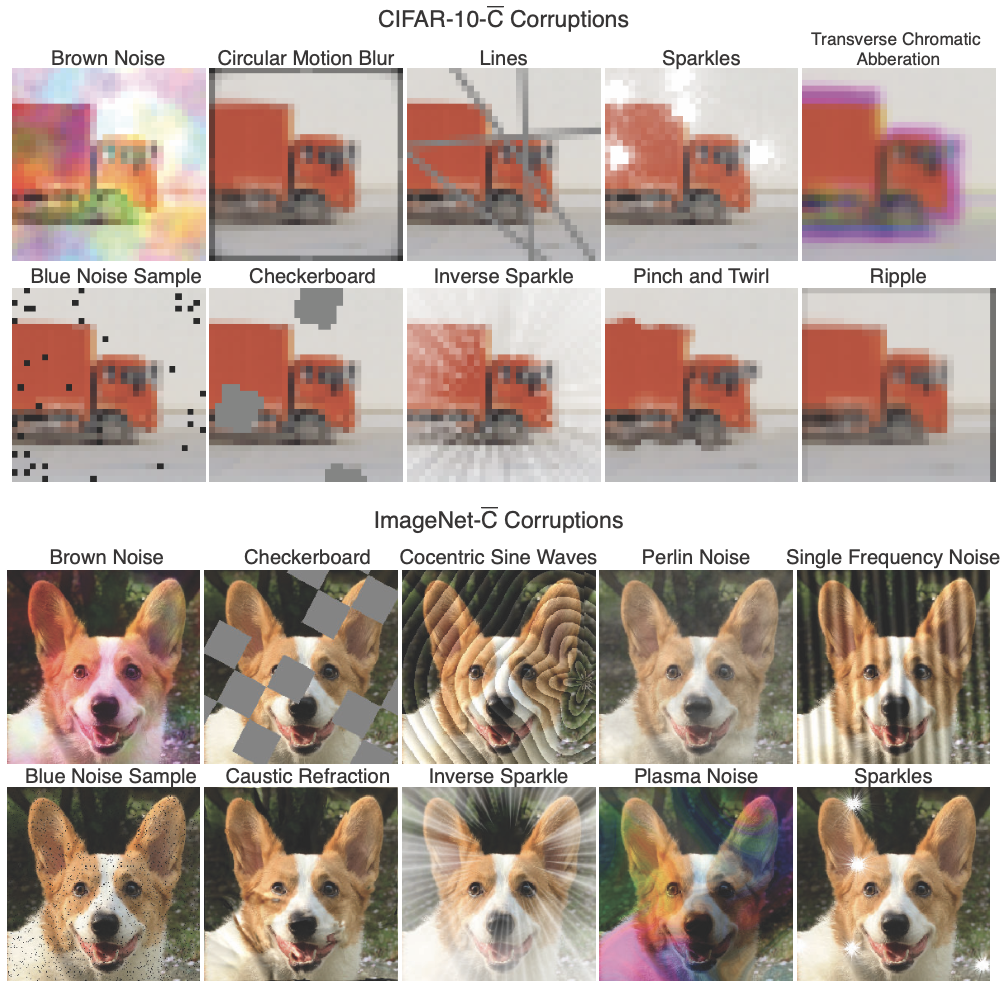

- Shows that several common data augmentation schemes that improve corruption robustness perform worse on the new dataset, suggesting that generalization is often poor to dissimilar corruptions. Here AutoAugment, Stylized-ImageNet, AugMix, Patch Gaussian, and ANT3x3 are studied.

* Base example images copyright Sehee Park and Chenxu Han.

License

augmentation-corruption is released under the MIT license. Please see the LICENSE file for more information.

Contributing

We actively welcome your pull requests! Please see CONTRIBUTING.md and CODE_OF_CONDUCT.md for more info.