- Pytorch 1.8

- Python 3.6

- transformers>=4.4.0

- datasets

- scipy

- download link

- PERSONA-CHAT: positive 1, negative 19 (given)

- Focus: positive 1 (given), context negative sampling 2, random negative sampling 17

- ./dataset/FoCus

- ./dataset/personachat

- ./model/NP_focus/roberta-base/model.bin

- ./model/NP_persona/roberta-base/model.bin

- ./model/NP_persona/roberta-large/model.bin

- ./model/prompt_finetuning/roberta-{size}_{personachat_type}/model.bin

standard response selection model for Focus

cd NP_focus

python3 train.py --model_type roberta-{size} --epoch 10- size: base or large

standard response selection model for PERSONA-CHAT

cd NP_persona

python3 train.py --model_type roberta-{size} --persona_type {persona_type} --epoch 10- size: base or large

- persona_type: original or revised

Zero-shot baseline

- test_focus.py: testing for focus dataset

- test_perchat.py: testing for personachat dataset

cd SoP

python3 test_perchat.py --model_type roberta-{size} --persona_type {persona_type} --persona simcse --weight {weight} --agg max- size: base or large

- persona_type: original or revised (It doesn't matter in focus))

- weight

- 0.5 for original persona and Focus

- 0.05 for revised persona

Fine-tuned P5 model

- train.py: The main training file mentioned in the paper

- train_no_ground: don't use persona grounding

- train_no_question: don't use prompt question

- test_zeroshot.py: no persona during test

cd prompt_finetuning

python3 train.py --model_type roberta-{size} --data_type {data_type} --persona_type {persona_type} --persona {persona} --num_of_persona {num_of_persona} --reverse- size: base or large

- data_type: personachat or focus

- persona_type: original or revised (It doesn't matter in focus)

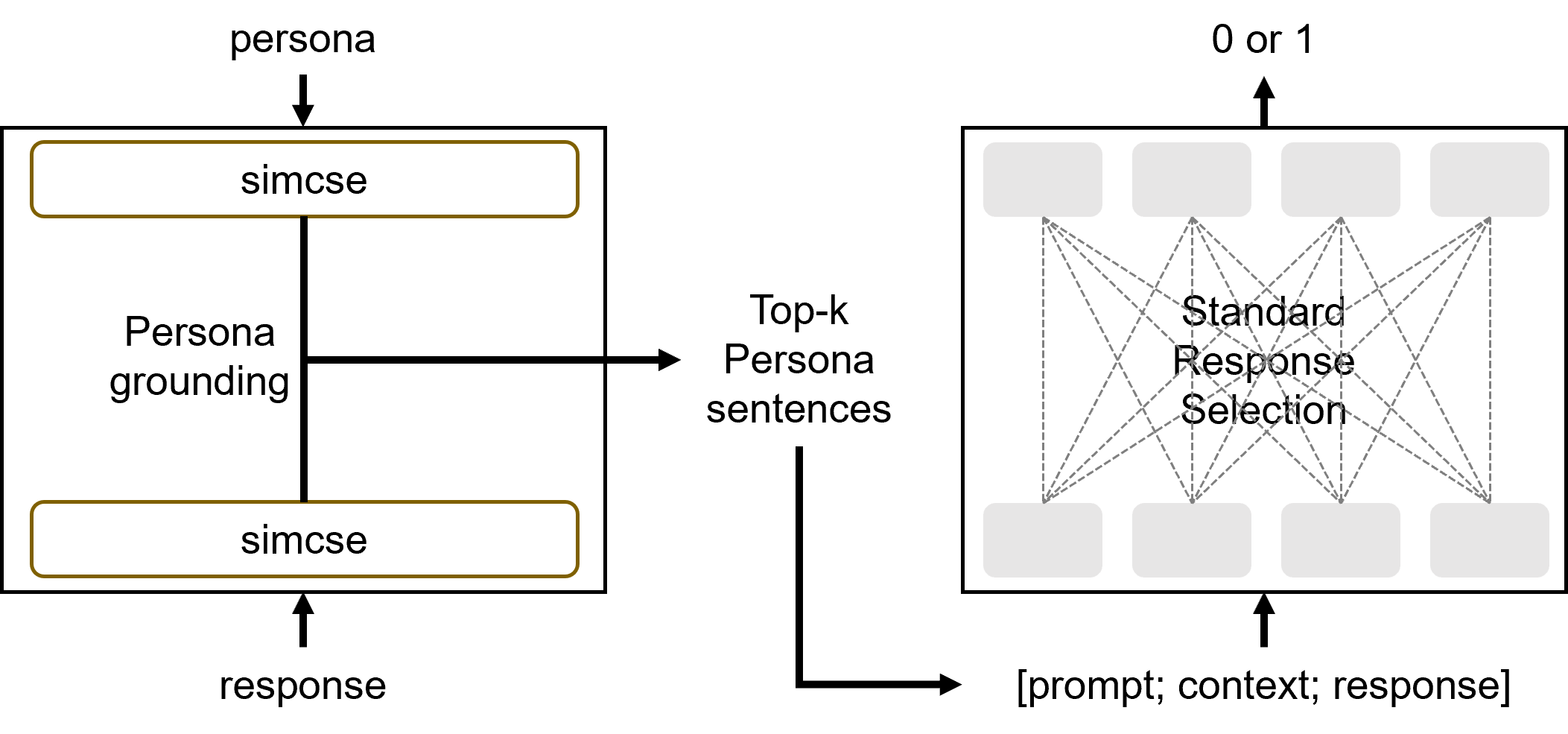

- persona: simcse or nli or bertscore (recommend: simcse)

- num_of_persona: 1 to 5 (recommend: 2)

- reverse: option (order of persona sentences)

Zero-shot P5 model

- test.py: The main test file mentioned in the paper

- test_no_ground.py: don't use persona grounding

- test_no_question.py: don't use prompt question

- test_other_question.py: variant of prompt question

- test_random_question.py: random prompt question

- dd means: When SRS is trained with dailydailog

cd prompt_persona_context

python3 test.py --model_type roberta-{size} --data_type {data_type} --persona_type {persona_type} --persona {persona} --num_of_persona {num_of_persona} --reverse- size: base or large

- data_type: personachat or focus

- persona_type: original or revised (It doesn't matter in focus)

- persona: simcse or nli or bertscore (recommend: simcse)

- num_of_persona: 1 to 5 (recommend: 2)

- reverse: option (order of persona sentences)

@article{lee2023p5,

title={P5: Plug-and-Play Persona Prompting for Personalized Response Selection},

author={Lee, Joosung and Oh, Minsik and Lee, Donghun},

journal={arXiv preprint arXiv:2310.06390},

year={2023}

}