Update: Docker image (thanks to @avasalya) is here

(with zoom-in/out, go back into scenes, load dataset from GUI, change number of keypoints, keyboard shortcuts, and more features...)

Code for the paper:

Rapid Pose Label Generation through Sparse Representation of Unknown Objects (ICRA 2021)

Rohan P. Singh, Mehdi Benallegue, Yusuke Yoshiyasu, Fumio Kanehiro

This is a tool for rapid generation of labeled training dataset primarily for the purpose of training keypoint detector networks for full pose estimation of a rigid, non-articulated 3D object in RGB images.

We provide a GUI to fetch minimal user input. Using the given software, we have been able to generate large, accurately--labeled, training datasets consisting of multiple objects in different scenes (environments with varying background conditions, illuminations, clutter etc.) using just a handheld RGB-D sensor in only a few hours, including the time involved in capturing the raw dataset. And ultimately, used the training dataset for training a bounding-box detector (YOLOv3) and a keypoint detector network (ObjectKeypointTrainer).

The code in this repository forms Part-1 of the full software:

Links to other parts:

- Part-2: ObjectKeypointTrainer

- Part-3: Not-yet-available

All or several parts of the given Python 3.7.4 code are dependent on the following:

- PyQt5

- OpenCV

- open3d

- transforms3d

We recommend satisfying above dependencies to be able to use all scripts, though it should be possible to bypass some requirements depending to the use case. We recommend working in a conda environment.

For pre-processing of the raw dataset (extracting frames if you have a ROS bagfile and for dense 3D reconstruction) we rely on the following applications:

We assume that using bag-to-png, png-to-klg and ElasticFusion, the user is able to generate a dataset directory tree which looks like follows:

dataset_dir/

├── wrench_tool_data/

│ ├── 00/

│ │ ├── 00.ply

│ │ ├── associations.txt

│ │ ├── camera.poses

│ │ ├── rgb/

│ │ └── depth/

│ ├── 01/

│ ├── 02/

│ ├── 03/

│ ├── 04/

│ └── camera.txt

├── object_1_data/...

└── object_2_data/...

where camera.poses and 00.ply are the camera trajectory and the dense scene generated by ElasticFusion respectively. Ideally, the user has collected raw dataset for different scenes/environments in directories 00, 01, 02,... . camera.txt contains the camera intrinsics as fx fy cx cy.

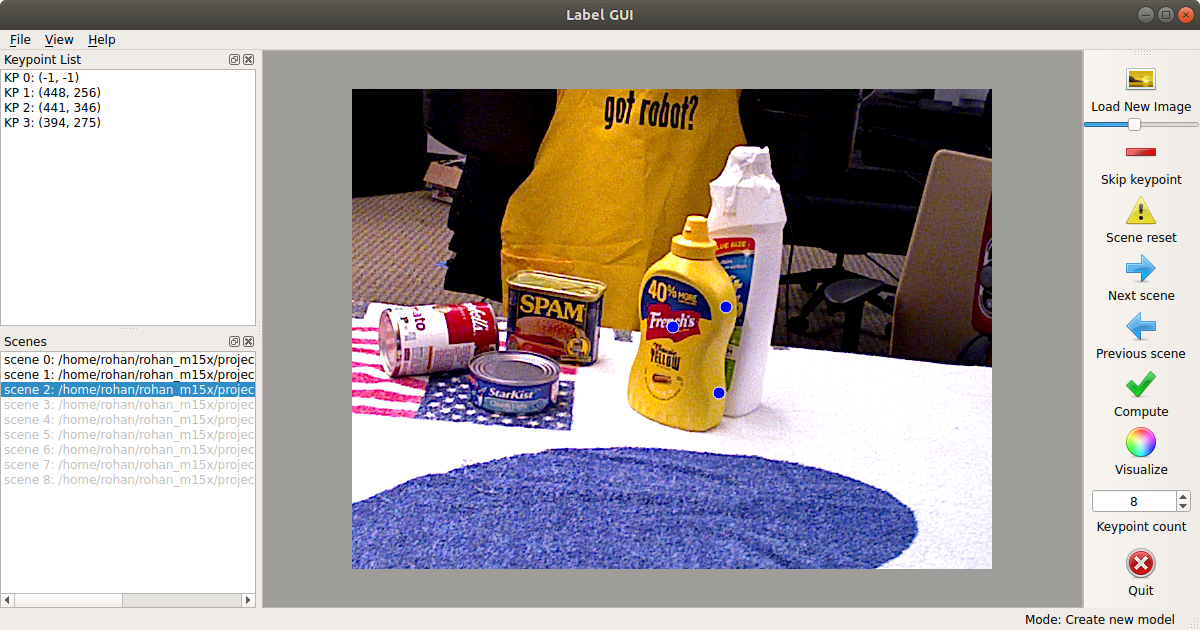

This should bring up the main GUI:

$ python main.py

In the case where the user has no model of any kind for their object, the first step is to generate a sparse model using the GUI and then build a rough dense model using the join.py script.

To create the sparse model, first choose about 6-10 points on the object. Since you need to click on these points in RGB images later, make sure the points are uniquely identifiable. Also make sure the points are well distributed around the object, so a few points are visible if you look at the object from any view. Remember the order and location of the chosen points and launch the GUI, setting the --keypoints argument equal to the number of chosen keypoints.

- Go to 'File' and 'Load Dataset'.

- Click on 'Next scene' to load the first scene.

- Click on 'Load New Image' and manually label all keypoints decided on the object which are visible.

- Click on 'Skip keypoint' if keypoint is not visible (Keypoint labeling is order sensitive).

- To shuffle, click on 'Load New Image' again or press the spacebar.

- Click on 'Next scene' when you have clicked on as many points as you can see.

- Repeat Steps 2-5 for each scene.

- Click on 'Compute'.

If manual label was done maintaining the constraints described in the paper, the optimization step should succeed and produce a sparse_model.txt and saved_meta_data.npz in the output directory. The saved_meta_data.npz archive holds data of relative scene transformations and the manually clicked points with their IDs (important for generating the actual labels using generate.py and evaluation with respect to ground-truth, if available).

The user can now generate a dense model for their object like so:

$ python join.py --dataset <path-to-dataset-dir> --sparse <path-to-sparse-model.txt> --meta <path-to-saved-meta-data>

The script will end while throwing the Open3D Interactive Visualization window. Perform manual cropping if required and save as dense.ply.

If you already have a model file for your object (generated through CAD, scanners etc.), follow these steps to obtain sparse_model.txt:

- Open the model file in Meshlab.

- Click on PickPoints (Edit > PickPoints).

- Pick 6-10 keypoints on the object arbitrarily.

- "Save" as sparse_model.txt.

Once sparse_model.txt has been generated for a particular object, it is easy to generate labels for any scene. This requires the user to uniquely localize at least 3 points defined in the sparse model in the scene.

- Launch the GUI.

- Go to 'File' and 'Load Dataset'. Then 'Load Model'.

- Choose a previously generated

sparse_model.txt(A Meshlab *.pp file can also be used in this step). - Click on 'Load New Image'.

- Label at least 3 keypoints (that exist in the sparse model file).

- Click on 'Skip keypoint' if keypoint is not visible (Keypoint labeling is order sensitive).

- Click on 'Compute'.

"Compute" tries to solve an orthogonal Procrustes problem on the given manual clicks and the input sparse model file. This will generate the saved_meta_data.npz again for the scenes for which labeling was done.

Once (1) sparse model file, (2) dense model file and (3) scene transformations (in saved_meta_data.npz) are available, run the following command:

$ python generate.py --sparse <path-to-sparse-model> --dense <path-to-dense-model> --meta <path-to-saved-meta-data-npz> --dataset <path-to-dataset-dir> --output <path-to-output-directory> --visualize

That is it. This should be enough to generate keypoint labels for stacked-hourglass-training as described in ObjectKeypointTrainer, mask labels for training a pixel-wise segmentation and bounding-box labels for training a generic object detector. Other types of labels are possible too, please create Issue or Pull request :)

You would probably need to convert the format of the generated labels to suit your requirements.

If you would like to train our pose estimator, you can directly use ObjectKeypointTrainer.

If you would like to train Mask-RCNN for instance segmentation, refer to this script to convert the binary masks to contour-points in JSON format.

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.

If you find this work useful in your own research, please consider citing:

@inproceedings{singh2021rapid,

title={Rapid Pose Label Generation through Sparse Representation of Unknown Objects},

author={Singh, Rohan P and Benallegue, Mehdi and Yoshiyasu, Yusuke and Kanehiro, Fumio},

booktitle={2021 IEEE International Conference on Robotics and Automation (ICRA)},

pages={10287--10293},

year={2021},

organization={IEEE}

}