Data Science Internship Assignment

Assignment for candidates

Table of Contents

Data

- Time series. I have choosen this dataset [provided file](data/raw/Learning Catalogue.csv) and provided file as a process fluctuating in time

Task

- Assigning Skills to learning unit. - Building a word level model for predicting which skill should be assigned to the learning unit?

Working with files

- LearningUnitAssignmeny - It contains all analysis, with whole 2 models

At the end of this file, you can input the new text description and see the skills assignment.

For ease you can also directly use colab notebook for checking the results and approach - link

Caution: while using the colab, you have to load the dataset using the files section in the left hand side.

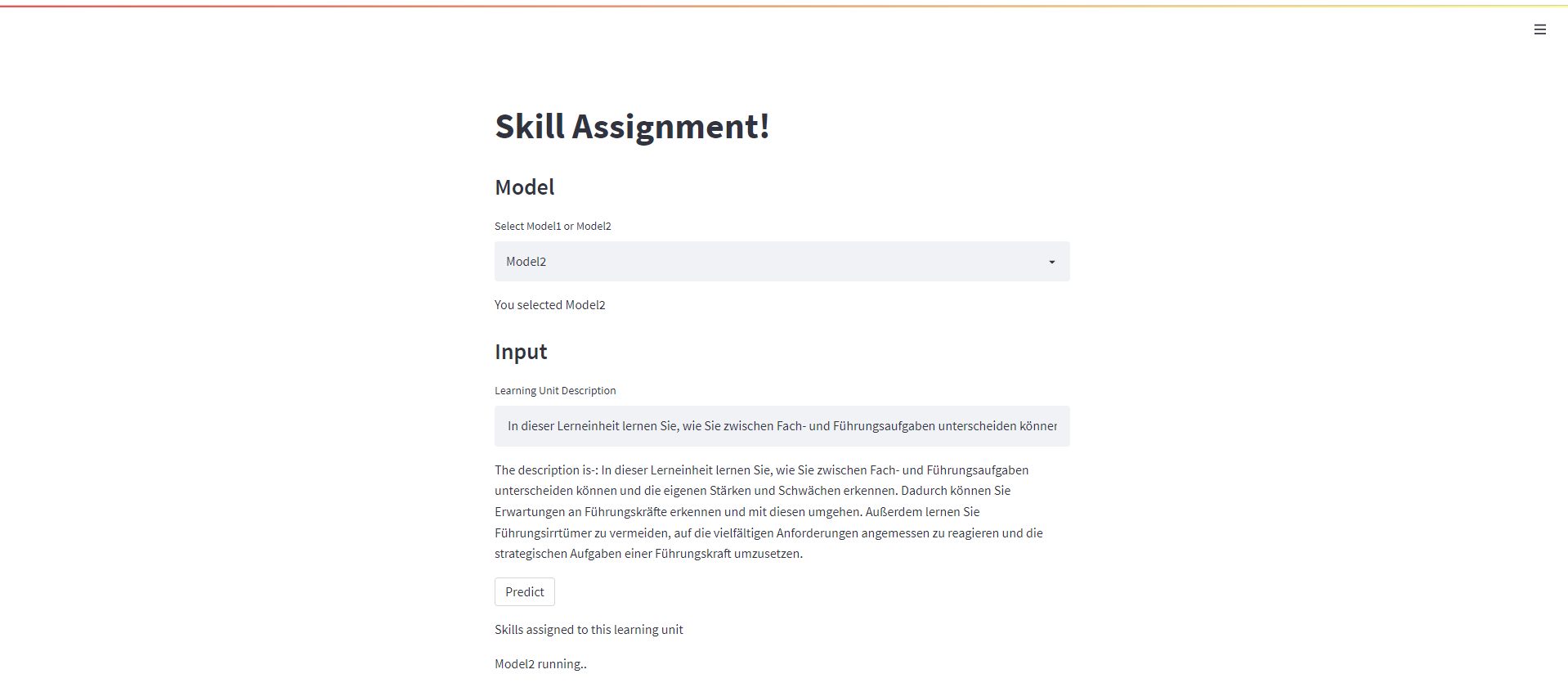

- app.py - It contains the simple app build using streamlit for using the build model

Some glimpse of app -:

-

predict.py - It is predicting the new test input - you have to call one function.

-

requirements.txt - It contains all the requirements for running this project

How to execute the files/code

- One is directly go through/run the python notebook- LearningUnitSkillAssignment.ipynb.

- Else run app.py use the model as per your choice.

Modelling 🚀

- Create embeddings using universal sentence encoder embeddings link, it is a multillingual model.

- Word based model - so created embeddings for each word in description and also skills.

- Find similarity between each word of description and the skill.

- Assign those top skills to that description which are crossing that particular threshold and highest count skill is assigned accordingly.

Further development

I have trained two models one with removing simple german stop words and second by removing german stop words including words which are occuring more times in our dataset.

If more time will be there these things could be done -:

- Different embeddings should be tried and thier metrics scores

- Due to non-labelled dataset , cant appl any supervised learning, but if we have more data then we can apply self learning and then can use supervised learning.