This repository will implement the classic deep reinforcement learning algorithms. The aim of this repository is to provide clear code for people to learn the deep reinforcement learning algorithm.

In the future, more algorithms will be added and the existing codes will also be maintained.

Requirements

- tensorflow 1.6

- tensorboardX

- gym 0.10

- pytorch 0.4

Installation

- install the pytorch

plase go to official webisite to install it: https://pytorch.org/

Recommend use Anaconda Virtual Environment to manage your packages

- install openai-baselines (the openai-baselines update so quickly, please use the older version as blow, will solve in the future.)

# clone the openai baselines

git clone https://github.com/openai/baselines.git

cd baselines

git checkout 366f486

pip install -e .

DQN

Here I uploaded two DQN models, training Cartpole and mountaincar.

Tips for MountainCar-v0

This is very sparse for MountainCar-v0, it is 0 at the beginning, only when the top of the mountain is 1, there is a reward. This leads to the fact that if the sample to the top of the mountain is not taken during training, basically the train will not come out. So you can change the reward, for example, to change to the current position of the Car is positively related. Of course, there is a more advanced approach to inverse reinforcement learning (using GAN).

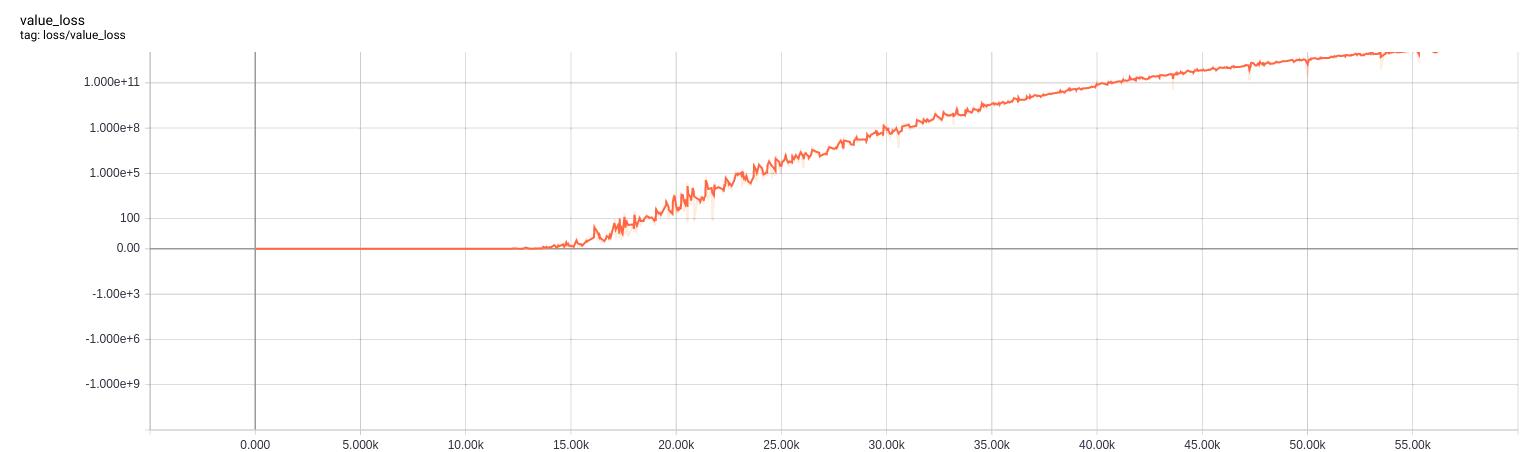

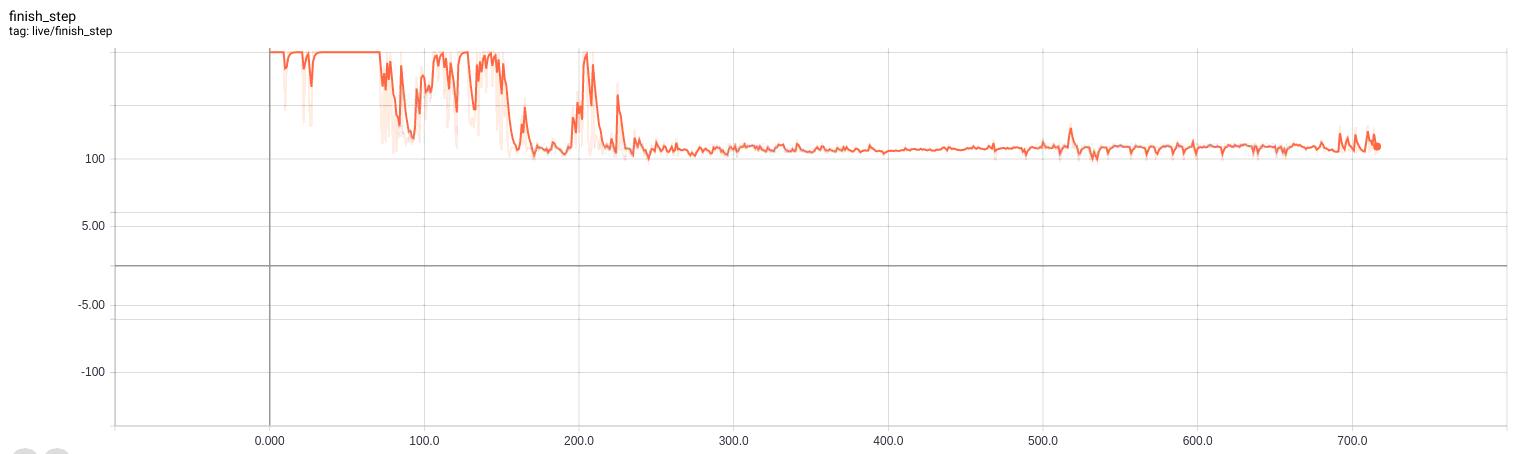

This is value loss for DQN, We can see that the loss increaded to 1e13 however, the network work well. This is because the training is going on, the target_net and act_net are very different, so the calculated loss becomes very large. The previous loss was small because the reward was very sparse, resulting in a small update of the two networks.

This is value loss for DQN, We can see that the loss increaded to 1e13 however, the network work well. This is because the training is going on, the target_net and act_net are very different, so the calculated loss becomes very large. The previous loss was small because the reward was very sparse, resulting in a small update of the two networks.

Papers Related to the DQN

- Playing Atari with Deep Reinforcement Learning [arxiv] [code]

- Deep Reinforcement Learning with Double Q-learning [arxiv] [code]

- Dueling Network Architectures for Deep Reinforcement Learning [arxiv] [code]

- Prioritized Experience Replay [arxiv] [code]

- Noisy Networks for Exploration [arxiv] [code]

- A Distributional Perspective on Reinforcement Learning [arxiv] [code]

- Rainbow: Combining Improvements in Deep Reinforcement Learning [arxiv] [code]

- Distributional Reinforcement Learning with Quantile Regression [arxiv] [code]

- Hierarchical Deep Reinforcement Learning: Integrating Temporal Abstraction and Intrinsic Motivation [arxiv] [code]

- Neural Episodic Control [arxiv] [code]

Thanks for higgsfield!!!

Policy Gradient

Thanks for Luckysneed's help!!!

Use the following command to run a saved model

python Run_Model.py

Use the following command to train model

pytorch_MountainCar-v0.py

policyNet.pkl

This is a model that I have trained.

Actor-Critic

This is an algorithmic framework, and the classic REINFORCE method is stored under Actor-Critic.

PPO

Proximal-Policy-Optimization

A2C

Advantage Policy Gradient, an paper in 2017 pointed out that the difference in performance between A2C and A3C is not obvious.

The Asynchronous Advantage Actor Critic method (A3C) has been very influential since the paper was published. The algorithm combines a few key ideas:

- An updating scheme that operates on fixed-length segments of experience (say, 20 timesteps) and uses these segments to compute estimators of the returns and advantage function.

- Architectures that share layers between the policy and value function.

- Asynchronous updates.

A3C

Original paper: https://arxiv.org/abs/1602.01783

Alphago zero

I will reproduce AlphagoZero in wargame.

Timeline

- 2018/11/01 gives the Resnet15Dense1 version, which has poor convergence, but the winning rate can reach 85%.

- 2018/11/12 Gives the Resnet12Dense3 version, which is the first convergence and stable trend, with a winning percentage of 92%.

- 2018/11/13 The Resnet12Dense3-v2 version is given. This version is very stable and the winning rate is gradually increasing. Performance to be observed

- 2018/11/15 After 3 days of training, Wargame AI is given - the version of Gou Chen, the hit rate is stable at over 90%.

- 2018/11/16 The rate of Chen Chen wins 96%.

- 2018/11/25 Gou Chen v2,v3 release

Papers Related to the Deep Reinforcement Learning

[1] A Brief Survey of Deep Reinforcement Learning

[2] The Beta Policy for Continuous Control Reinforcement Learning

[3] Playing Atari with Deep Reinforcement Learning

[4] Deep Reinforcement Learning with Double Q-learning

[5] Dueling Network Architectures for Deep Reinforcement Learning

[6] Continuous control with deep reinforcement learning

[7] Continuous Deep Q-Learning with Model-based Acceleration

[8] Asynchronous Methods for Deep Reinforcement Learning

[9] Trust Region Policy Optimization

[10] Proximal Policy Optimization Algorithms

[11] Scalable trust-region method for deep reinforcement learning using Kronecker-factored approximation

TO DO

- DDPG

- ACER

- TRPO

- DPPO