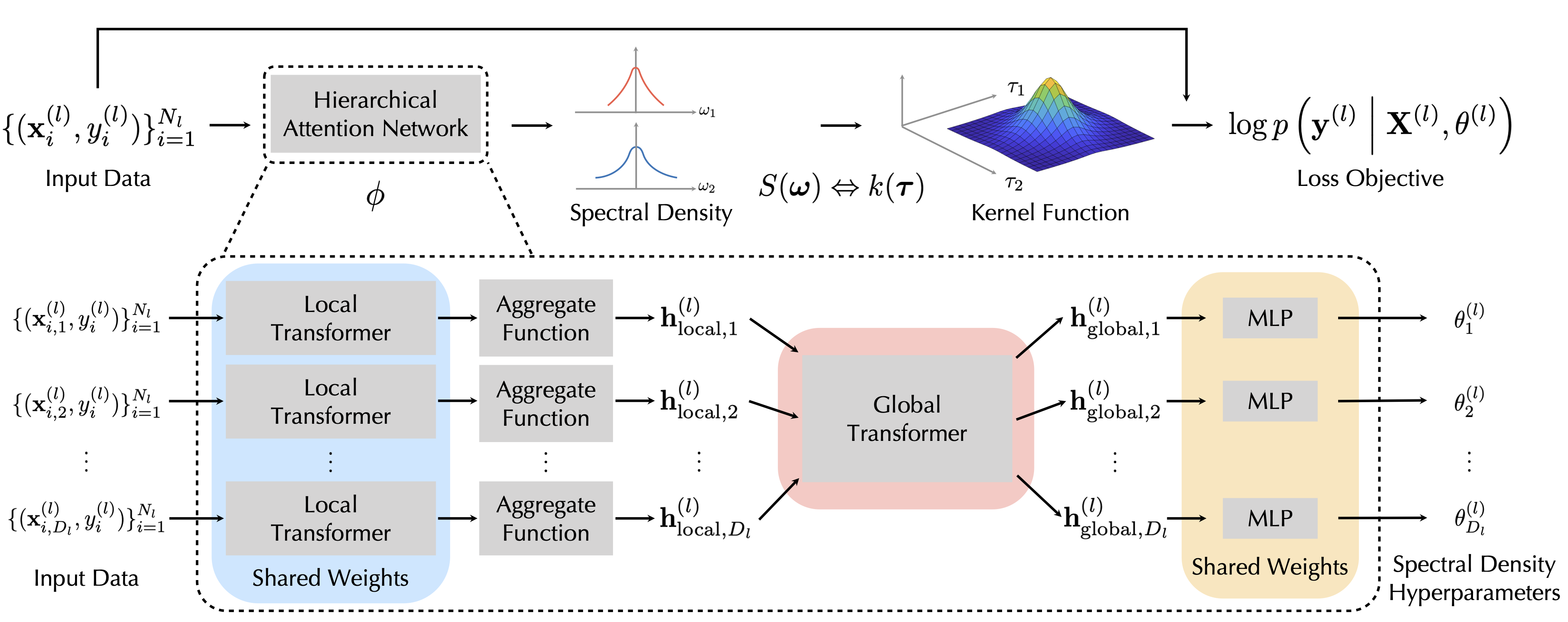

This repository contains code and pretrained-models for the task-agnostic amortized inference of GP hyperparameters (AHGP) proposed in our NeurIPS 2020 paper. AHGP is a method that allows light-weight amortized inference of GP hyperparameters with a pre-trained neural model.

The repository also includes a pip installable package with the essential components of AHGP so that you can use AHGP with simple function calls.

If you find this repository helpful, please cite our NeurIPS 2020 paper:

@inproceedings{liu2020ahgp,

title={Task-Agnostic Amortized Inference of Gaussian Process Hyperparameters},

author={Liu, Sulin and Sun, Xingyuan and Ramadge, Peter J. and Adams, Ryan P.},

booktitle={Advances in Neural Information Processing Systems},

year={2020}

}

The essential components of AHGP are packaged in ahgp/ and you can install this via PyPI:

pip install amor-hyp-gpamor-hyp-gp has the following dependencies:

python >= 3.6pytorch >= 1.3.0tensorboardXeasydictPyYAML

Usage examples are included in examples/.

example_ahgp_inference.py contains an example of full GP inference, which uses amortized GP hyperparameter inference and full GP prediction implemented in PyTorch.

example_ahgp_hyperparameters.py contains an example that outputs the GP hyperprameters (means and variances of the Gaussian mixtures for modeling the spectral density).

config/model.yaml provides a example configuration file of the pretrained model to be used for amortized inference.

In pretrained_model/, we provide the pretrained model used in our paper, which is trained on 5000 synthetic datasets of relative small size generated from stationary GP priors. We also encourage you to try with your own pretrained model for your specific problem!

An example of generating synthetic training data from GP priors with stationary kernels is provided in get_data_gp.py.

To run the experiments, you will need the following extra dependencies:

GPyemukit

The UCI regression benchmark datasets are stored in data/regression_datasets. The Bayesian optimization functions are implemented in utils/bo_function.py. The Bayesian quadrature functions are imported from the emukit package.

Training model

To train a neural model for amortized inference, you can run:

python run_exp.py -c config/train.yamlIn config/train.yaml, you can define the configurations for the neural model and training hyperparameters.

Regression experiments

To run the regression benchmark experiments with the pretrained neural model, use:

python run_exp.py -c config/regression.yaml -tIn config/regression.yaml, you can define the configurations of the pretrained model and the regression experiment.

Bayesian optimization experiments

To run the Bayesian optimization experiments with the pretrained neural model, use:

python run_exp_bo.py -c config/bo.yamlIn config/bo.yaml, you can define the configurations of the pretrained model and the BO experiment.

Bayesian quadrature experiments

To run the Bayesian quadrature experiments with the pretrained neural model, use:

python run_exp_bq.py -c config/bq.yamlIn config/bq.yaml, you can define the configurations of the pretrained model and the BQ experiment.

Please reach out to us with any questions!