Cloning human driving behavior using a deep learning model implemented in Keras.

- The data to train the autonomous driving agent comprises of camera images taken from 3 (simulated) cameras mounted on the (simulated) car.

- The images act as the features for the deep learning model, whereas the labels are the control outputs - in this case, the steering angle.

- The data can either be generated by the driving simulator created by udacity, available [here] (https://github.com/udacity/self-driving-car-sim) OR can be downloaded from one of the project GitHub repos [here] (https://d17h27t6h515a5.cloudfront.net/topher/2016/December/584f6edd_data/data.zip)

- A csv logfile contains the training entries : i.e. the 3 camera image filenames (=features) and their corresponding outputs (=labels)

-

model.pyhas the main code to prepare the data, and create and train the Keras model.- This will save the trained model as

model.h5

- This will save the trained model as

-

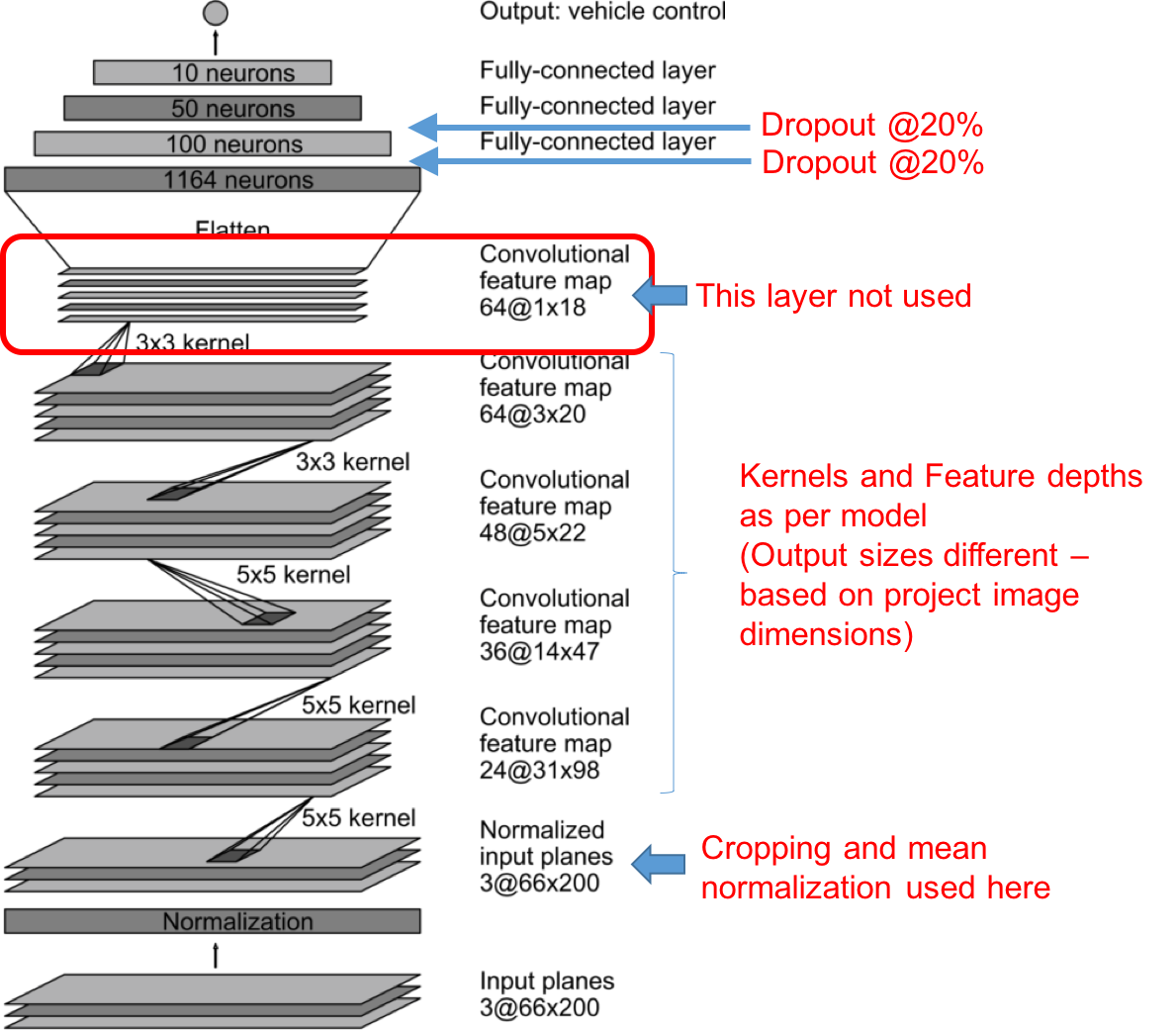

The initial development and analysis of various data augmentation and preprocessing techniques was done using LeNet. Once these steps yielded successively improved results, the final refinements were done using the NVIDIA model (minus one final convolutional layer).

- The first layer crops the image to the area of interest. Adding the layer into the Keras model itself ensures the image is cropped both during training and prediction.

- The next layer is normalization (scaled to 0-1 and mean centered).

- This is followed by 4 convolutional + maxpooling layers : 3 with 5X5 filters and increasing feature depth from 24 to 36 to 48, followed by 1 with 3X3 filters and depth 64. (These are same as the NVIDIA model except the last convolutional layer.)

- Then data is then flattened and fed to fully connected layers of 1164, 100, 50 and 10 neurons.

- Dropout at 0.2 between 1164-100 and 100-50

- Final output layer is one output, to be compared to predicted value for regression.

Model uses MSE loss function and adam optimizer, with 20% of the data used for validation.

-

With LeNet model, overfitting (increasing trend in validation error) occurs after 6 epochs. For final NVIDIA model, it occurs after 3 epochs. Further, Keras Dropout layer was used in the final NVIDIA model to prevent overfitting.

-

Dropout of 20% was attempted at 3 layers - with the rationale to introduce dropout in the "densest" interconnections :

- Between flattened input to first layer of 1164 neurons

- Between first layer of 1164 neurons and second layer of 100 neurons

- Between second layer of 100 neurons and third layer of 50 neurons

-

After experimentation, the first dropout layer was eliminated, and the remaining 2 were maintained - since it was observed that the model started to underfit less represented turn patterns (such as a "sharp left with a dirt boundary")

-

Final model with dropout was trained for 2 epochs and gave good performance. The model was tested by running it through the simulator and ensuring that the vehicle stayed on the track.

In addition to network sophistication, the results are impacted most significantly by data augmentation and preprocessing.

-

Each center image is augmented with its mirror image (and correspondingly, negative of that steering measurement)

-

Its important to simulate and train for "recovery driving" - meaning helping the simulator learn what to do when it is getting off track. This can either be done by forcing recovery driving in the simulator, OR, by using the left and right camera images as if they were center images. This means you need to adjust (increase/decrease) their steering measurements. This gives good additional data, which also teaches the simulator to recover!

-

The first correction parameter value that generalized to the full lap was 0.3 - though this causes some 'swerving' on straight segments. The final value chosen for correction was 0.25.

-

Starting with 8036 training records, the augmnted data set has 32144 images, out of which 25715 are used for training and 6429 for validation.

Getting the final smooth output requires many elements working together - data augmentation, suitable network complexity, correct dropout, and preprocessing (normalization, cropping the image to the relevant region of interest, etc).

-

drive.pyis a helpful script provided by udacity to use the simulator to drive the car in autonomous mode. It uses the trained Keras model filemodel.h5described above. -

This generates a stream of images 'seen' by the car in autonomous mode - which will then be stitched into a video with the script

video.py -

run2.mp4is the output of a successful autonomous run around the lap