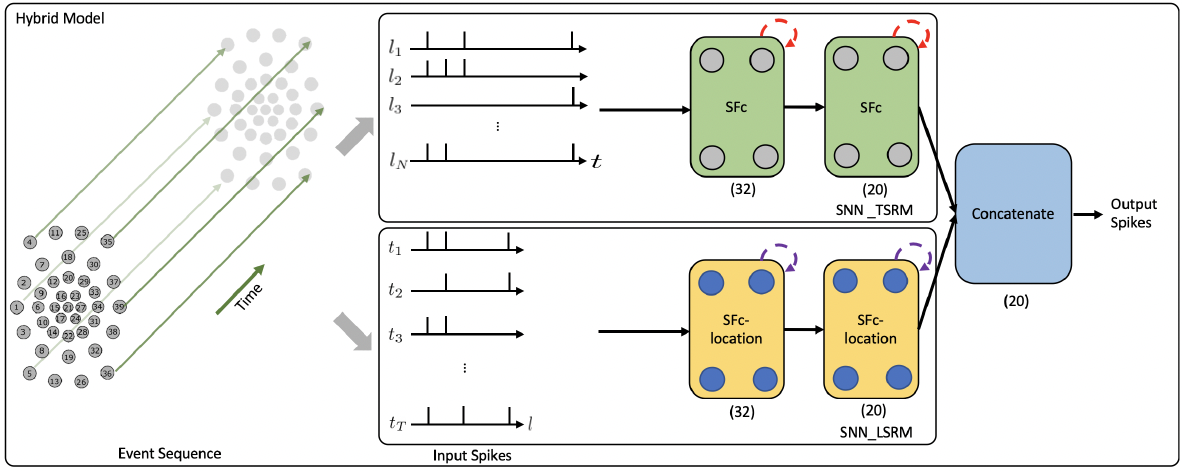

This package is a PyTorch implementation of the paper "Event-Driven Tactile Learning with Location Spiking Neurons".

Kang, Peng and Banerjee, Srutarshi and Chopp, Henry and Katsaggelos, Aggelos and Cossairt, Oliver. "Event-Driven Tactile Learning with Location Spiking Neurons". In 2022 International Joint Conference on Neural Networks (IJCNN 2022).

@inproceedings{kangTactile,

title={Event-Driven Tactile Learning with Location Spiking Neurons},

author={Kang, Peng and Banerjee, Srutarshi and Chopp, Henry and Katsaggelos, Aggelos and Cossairt, Oliver},

booktitle={2022 International Joint Conference on Neural Networks (IJCNN)},

pages={1--8},

year={2022},

organization={IEEE}

}Python 3 with slayerPytorch and the packages in the requirements.txt:

The project has been tested with one RTX 3090 on Ubuntu 20.04 / Ubuntu 22.04. The training and testing time should be in minutes.

- Follow the requirements and installation of

slayerPytorchto install it, seeslayerPytorch/README.md. - Install any necessary packages in the

requirements.txtwithpip installorconda install.

- Donwload the

preprocesseddata here. - Save the preprocessed data for Objects, Containers, and Slip Detection in

datasets/preprocessed.

python locsnn/train_location_snn.py --epoch 500 --lr 0.001 --sample_file 1 --batch_size 8 --fingers both --data_dir <preporcessed data dir> --hidden_size 32 --loss NumSpikes --mode location --network_config <network_config>/container_weight_location.yml --task cw --checkpoint_dir <checkpoint dir>- The hybrid model with the whorl-like location order:

python locsnn/train_location_snn.py --epoch 500 --lr 0.001 --sample_file 1 --batch_size 8 --fingers both --data_dir <preporcessed data dir> --hidden_size 32 --loss NumSpikes --mode location_cat_whorl --network_config <network_config>/container_weight_location.yml --task cw --checkpoint_dir <checkpoint dir>- Location Tactile SNN:

python locsnn/train_location_snn.py --epoch 500 --lr 0.001 --sample_file 1 --batch_size 8 --fingers both --data_dir <preporcessed data dir> --hidden_size 32 --loss NumSpikes --mode only_location --network_config <network_config>/container_weight_location_only.yml --task cw --checkpoint_dir <checkpoint dir>-

$\lambda$ tuning in the weighted loss function:$\lambda$ value can be changed inslayerPytorch/src/spikeLoss.py, but remember to install slayerPytorch again to activate the changes.

python locsnn/train_location_snn.py --epoch 500 --lr 0.001 --sample_file 1 --batch_size 8 --fingers both --data_dir <preporcessed data dir> --hidden_size 32 --loss WeightedLocationNumSpikes --mode location --network_config <network_config>/container_weight_location.yml --task cw --checkpoint_dir <checkpoint dir>- Confusion matrices on Containers

python confusion/confusion_location.py --runs <checkpoint dir>/cw_location_1- Timestep-wise inference

python timestep_inference/inference_timestep.py --runs <checkpoint dir>/cw_location_1 --save <timestep inference dir>- Download models from [https://drive.google.com/drive/folders/1XBzpbk5Vt7E7qevlOW06GvFY0N_N8ymU?usp=sharing].

- Save the models in

historyfolder.

if your scripts cannot find the locsnn module, please run in the root directory:

export PYTHONPATH=.

The codes of this work are based on slayerPytorch and VT-SNN.