Model Preparation is a stage in DL developer's workflow in which users could create or re-train models based on given dataset. This package provides various types of Model Preparation Algorithm (MPA) as the form of Transfer Learning Recipe (TL-Recipe).

Given datasets and base models as inputs, TL-Recipe offers model adaptation schemes in terms of data domain, model tasks and also NN architectures.

This package consists of

- Custom core modules which implement various TL algorithms

- Training configurations specifying module selection & hyper-parameters

- Tools and APIs to run transfer learning on given datasets & models

TL ( RECIPE, MODEL, DATA[, H-PARAMS] ) -> MODEL

- Model: NN arch + params to be transfer-learned

- Explicitly enabled models only

- No model input -> train default model from scratch

- Recipe: Custom modules & configs defining transfer learning schemes / modes

- Defined up to NN arch (e.g. Incremental learning recipe for FasterRCNN, SSD, etc)

- Expose & accept controllable hyper-params w/ default value

TL-Recipe is based on pytorch as base training framework. And MMCV is adopted as modular configuration framework. Recipes in this package are implemented using config & module libraries which are also based on MMCV

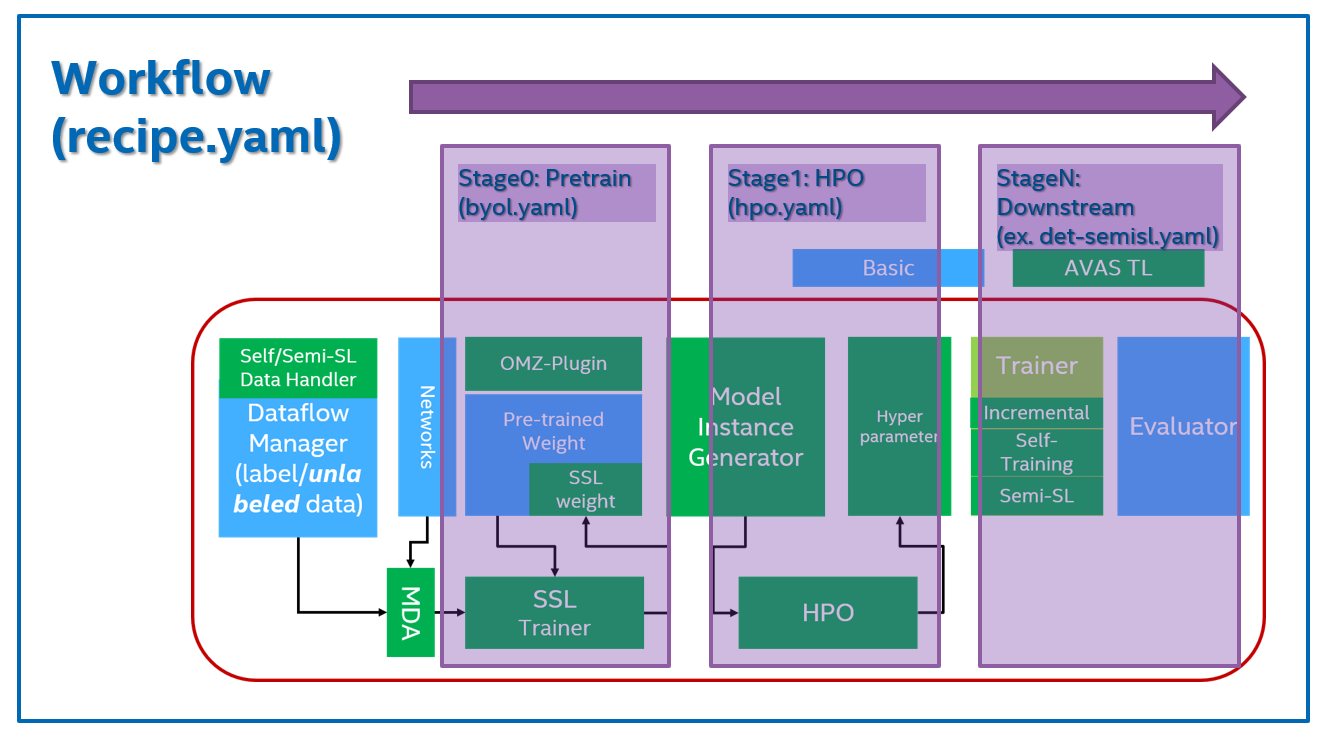

On top of above framework and libraries, we designed Multi-stage Workflow Framework

- Workflow: Model training w/ series of sub-stages (RECIPE)

- Stage: Individual (pre)train tasks (SUB-RECIPE)

TL-Recipe supports various TL methods for Computer Vision tasks and their related NN model architecures.

- Classification

- Detection

- Segmentation

- (WIP) Instance segmentation

- Semantic segmentation

- (TBD) Panoptic segmentation

Train / Infer / Evaluate / Export operations are supported for each task

Follwing table describes supported TL methods for each tasks.

- Class-Incremental Learning

- Self-Supervised Learning (available soon!)

- Semi-Supervised Learning (available soon!)

TL-Recipe supports transfer learning for subset of

- OTE (OpenVINO Traning Exetension) models

- Some custom pytorch models

For detailed list, please refer to: OTE Model Preparation Algorithms

Users could use TL-Recipe to retrain their models via

Please refer to: TBD

- Ubuntu 16.04 / 18.04 / 20.04 LTS x64

- Intel Core/Xeon

- (optional) NVIDIA GPUs

- tested with

- GTX 1080Ti

- RTX 3080

- RTX 3090

- Tesla V100

- tested with

To use MPA on your system, there are two different way to setup the environment for the development.

- Setup environment on your host machine using virtual environments (

virtualenvorconda) - Setup environment using

Docker

To contribute your code to the MPA project, there is a restriction to do liniting to all your changes. we are using Flake8 for doing this and it's recommended to do it using the Git hook before commiting your changes.

- how to install: please go to the link below to install on your development environment

- For example, you can install

Flake8andpre-commitusingAnaconda# install flake8 to your development environment (Anaconda) $ conda install flake8=3.9 -y # install pre-commit module $ conda install pre-commit -c conda-forge -y # install pre-commit hook to the local git repo $ pre-commit install

note: After installing and setting

Flake8using instructions above, your commit action will be ignored when your changes (staged files) have any LINT issues.

We provided a shell script init_venv.sh to make virtual environment for the MPA using tool virtualenv.

# init submodules

...mpa$ git submodule update --init --recursive

# create virtual environment to the path ./venv and named as 'mpa'

...mpa$ ./init_venv.sh ./venv

# activate created virtualenv

...mpa$ source ./venv/bin/activate

# run mpa cli to get help message

(mpa)...mpa$ python -m tools.cli -hnote: This script assume that your system has installed suitable version of CUDA (10.2 or 11.1) for the MPA.

Refer to OTE CLI quide