This work proposes a novel transformer-based approach for estimating the transfer entropy (TE) for stationary processes, incorporating the state-of-the-art capabilities of transformers.

TREET, transfer entropy estimation via transformers, is a neural network attention-based estimator of TE over continuous spaces. The proposed approach introduces the TE as a Donsker-Vardhan representation and shows its estimation and approximation stages, to prove the estimator consistency. A detailed implementation is given with elaborated modifications of the attention mechanism, and an estimation algorithm. In addition, estimation and optimization algorithm is presented with Neural Density Generator (NDG) as auxiliary model.

We demonstrated the algorithms in various sequence-related tasks, such as estimating channel coding capacity, while proving the relation between it to the TE, emphasizing the memory capabilities of the estimator, and presenting feature analysis on the Apnea disease dataset.

TREET: TRanasfer Entropy Estimation via Transformers Paper

All results are presented in our paper.

1. Estimation

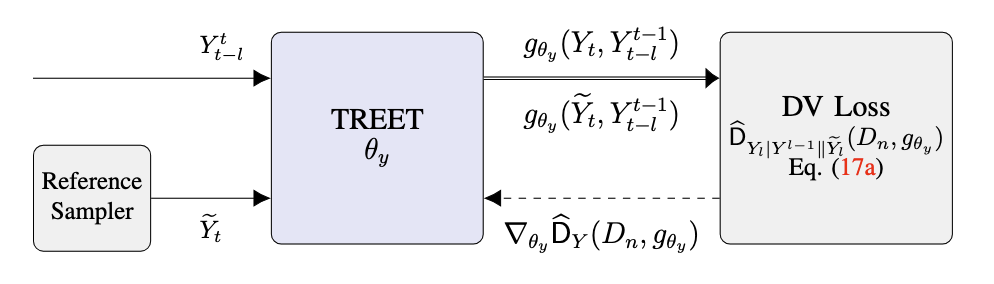

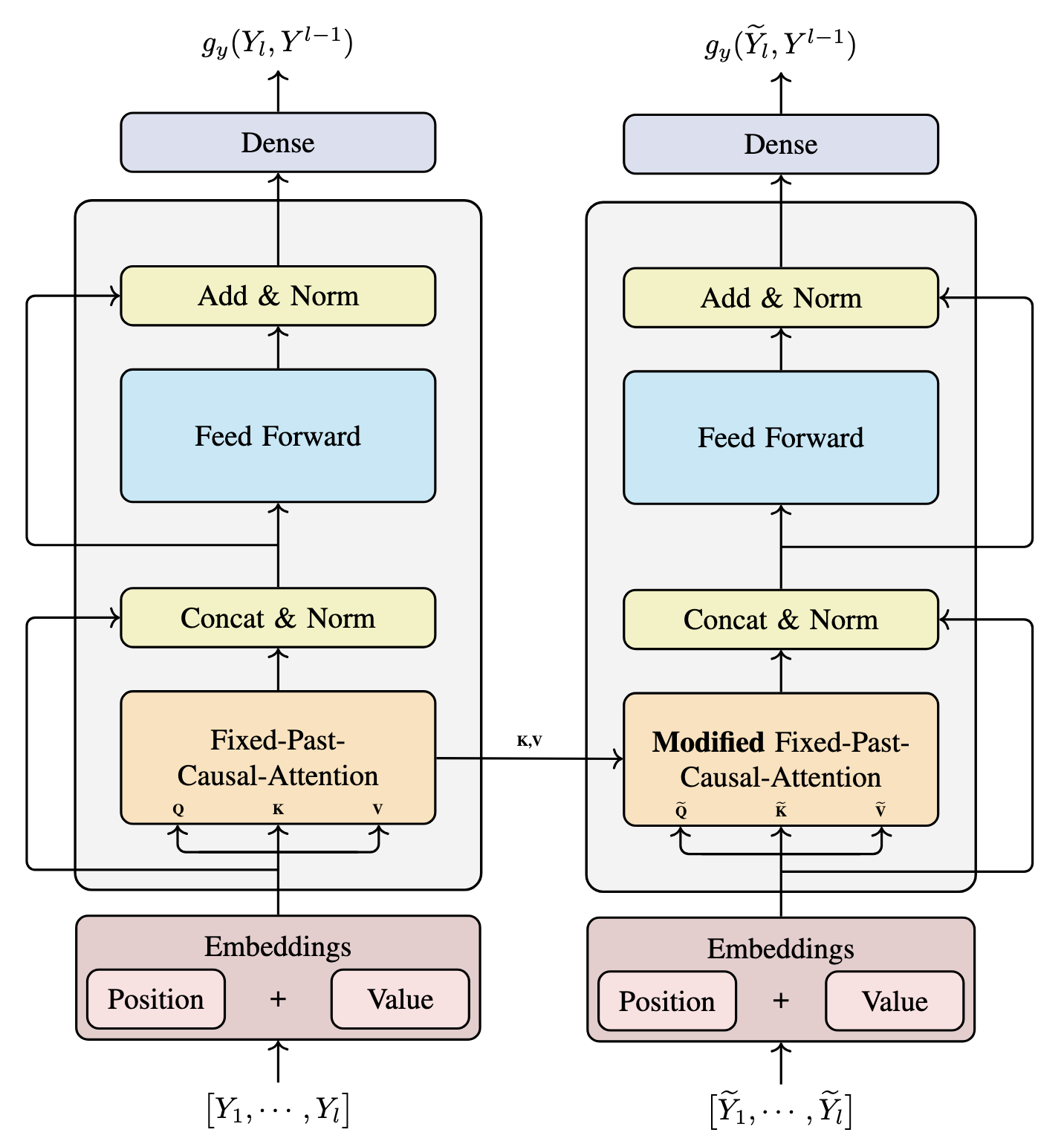

We present a novel algorithm to estimate TE for a given memory parameter l,

Figure 1. Overall scheme for estimation process (TE first part).

Figure 2. Overall architecture for estimation (TE first part).

2. Optimization

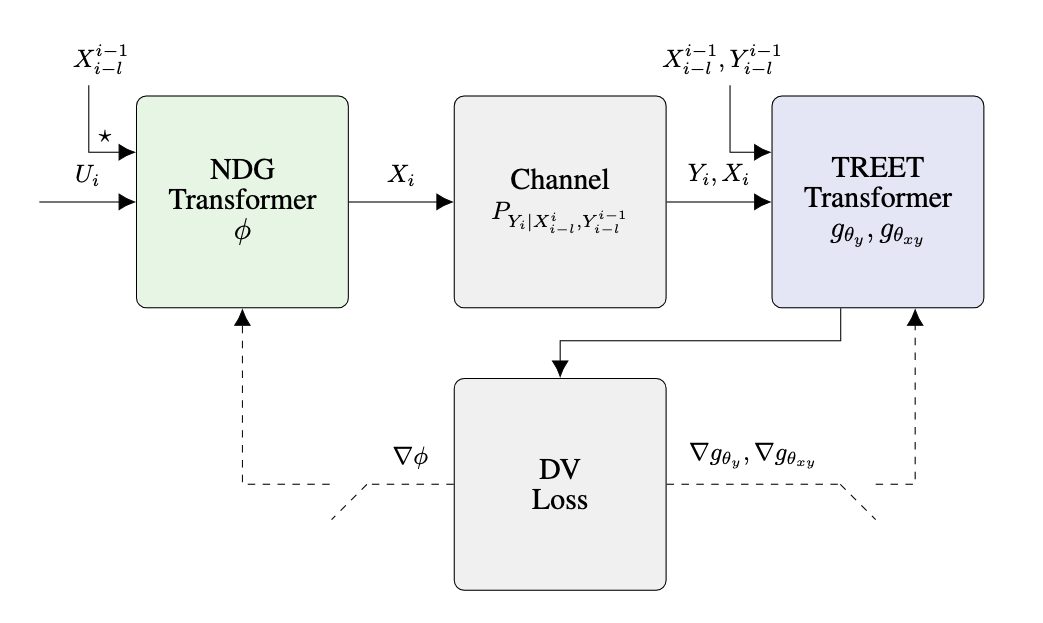

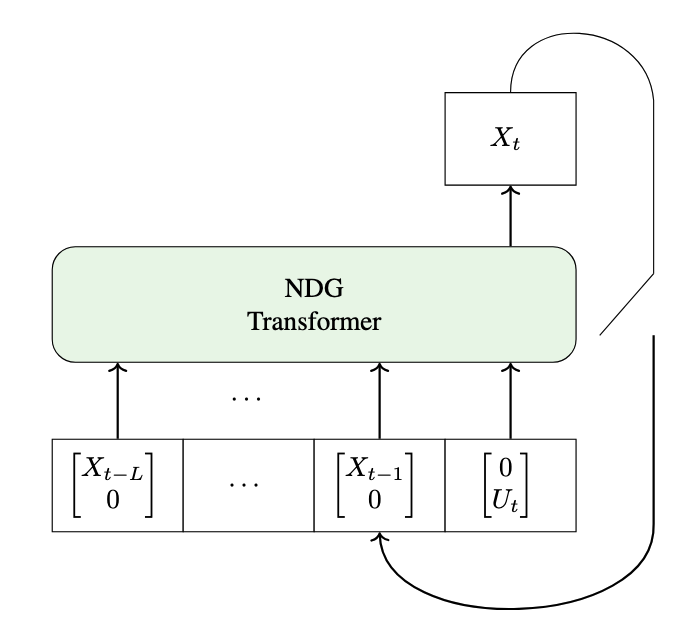

In addition, we use Neural Distribution Generator (NDG) for optimizing TE, while estimating it, with alternate learning procedure,

Figure 3. Overall scheme for optimization and estimation process (TE first part).

Figure 4. NDG architecture for estimation (without feedback).

- Install Python 3.8.10, PyTorch 2.0.1 and all requirments from

requierments.txtfile. - (Optional) Download data via script:

./datasets/apnea/downloader.sh- Check here for further details about the Apnea dataset.

- Train the model. We provide the experiment scripts of all benchmarks under the folder

./config. You can reproduce the experiment results by:

python run.py --config <file>.json5If you find this repo useful, please cite our paper.

@misc{luxembourg2024treet,

title={TREET: TRansfer Entropy Estimation via Transformer},

author={Omer Luxembourg and Dor Tsur and Haim Permuter},

year={2024},

eprint={2402.06919},

archivePrefix={arXiv},

primaryClass={cs.IT}

}

If you have any question or want to use the code, please contact omerlux@post.bgu.ac.il.

We appreciate the following sources for code base and datasets:

-

Repository - https://github.com/thuml/Autoformer

-

Apnea Data - https://physionet.org/content/santa-fe/1.0.0/

-

Logo making - https://openai.com/dall-e/